Dan Crisan

Generative Modelling of Stochastic Rotating Shallow Water Noise

Mar 15, 2024

Abstract:In recent work, the authors have developed a generic methodology for calibrating the noise in fluid dynamics stochastic partial differential equations where the stochasticity was introduced to parametrize subgrid-scale processes. The stochastic parameterization of sub-grid scale processes is required in the estimation of uncertainty in weather and climate predictions, to represent systematic model errors arising from subgrid-scale fluctuations. The previous methodology used a principal component analysis (PCA) technique based on the ansatz that the increments of the stochastic parametrization are normally distributed. In this paper, the PCA technique is replaced by a generative model technique. This enables us to avoid imposing additional constraints on the increments. The methodology is tested on a stochastic rotating shallow water model with the elevation variable of the model used as input data. The numerical simulations show that the noise is indeed non-Gaussian. The generative modelling technology gives good RMSE, CRPS score and forecast rank histogram results.

An application of the splitting-up method for the computation of a neural network representation for the solution for the filtering equations

Jan 10, 2022

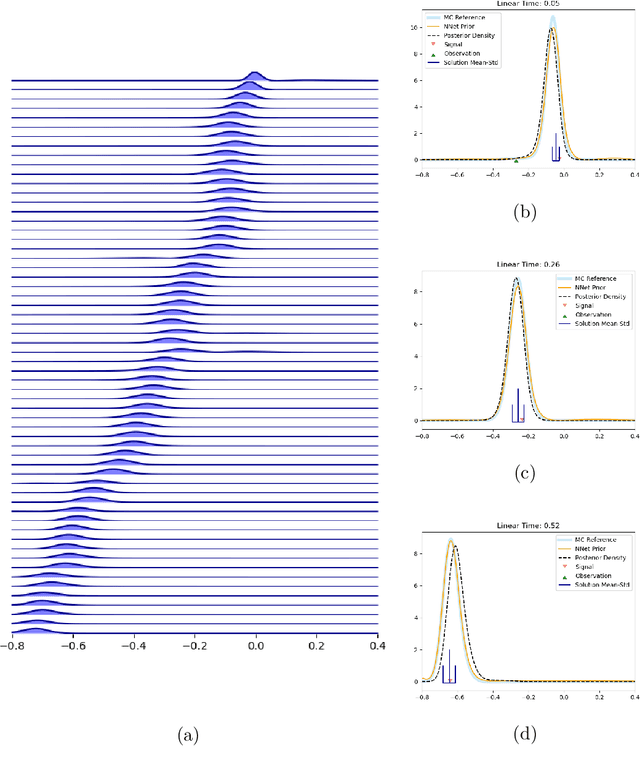

Abstract:The filtering equations govern the evolution of the conditional distribution of a signal process given partial, and possibly noisy, observations arriving sequentially in time. Their numerical approximation plays a central role in many real-life applications, including numerical weather prediction, finance and engineering. One of the classical approaches to approximate the solution of the filtering equations is to use a PDE inspired method, called the splitting-up method, initiated by Gyongy, Krylov, LeGland, among other contributors. This method, and other PDE based approaches, have particular applicability for solving low-dimensional problems. In this work we combine this method with a neural network representation. The new methodology is used to produce an approximation of the unnormalised conditional distribution of the signal process. We further develop a recursive normalisation procedure to recover the normalised conditional distribution of the signal process. The new scheme can be iterated over multiple time steps whilst keeping its asymptotic unbiasedness property intact. We test the neural network approximations with numerical approximation results for the Kalman and Benes filter.

Parallel sequential Monte Carlo for stochastic optimization

Nov 23, 2018

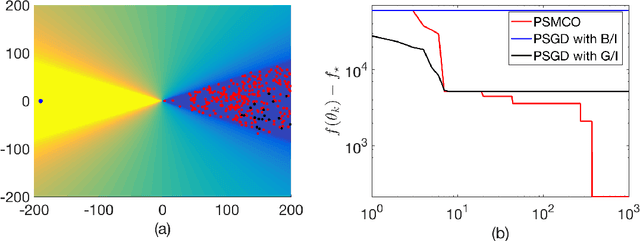

Abstract:We propose a parallel sequential Monte Carlo optimization method to minimize cost functions which are computed as the sum of many component functions. The proposed scheme is a stochastic zeroth order optimization algorithm which uses only evaluations of small subsets of component functions to collect information from the problem. The algorithm consists of a bank of samplers and generates particle approximations of several sequences of probability measures. These measures are constructed in such a way that they have associated probability density functions whose global maxima coincide with the global minima of the cost function. The algorithm selects the best performing sampler and uses it to approximate a global minimum of the cost function. We prove analytically that the resulting estimator converges to a global minimum of the cost function almost surely as the number of Monte Carlo samples tends to infinity. We show that the algorithm can tackle cost functions with multiple minima or with wide flat regions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge