Damien Coyle

Towards improving Alzheimer's intervention: a machine learning approach for biomarker detection through combining MEG and MRI pipelines

Aug 09, 2024

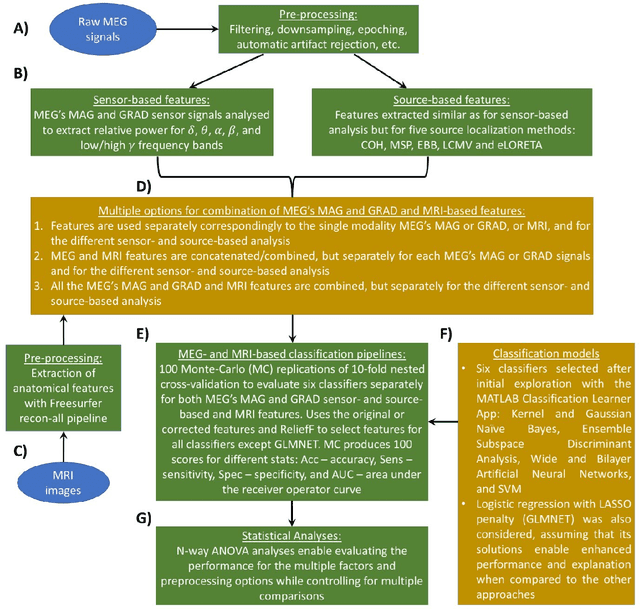

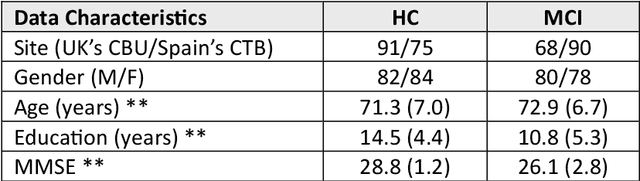

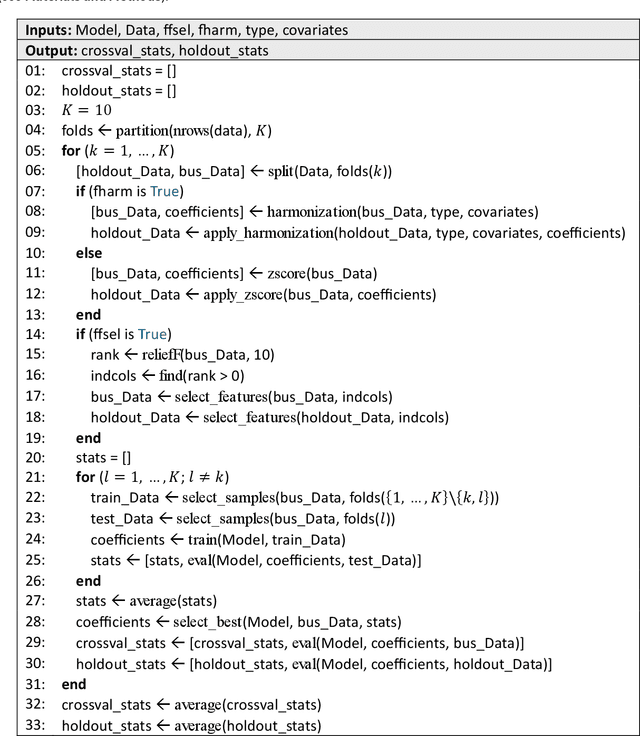

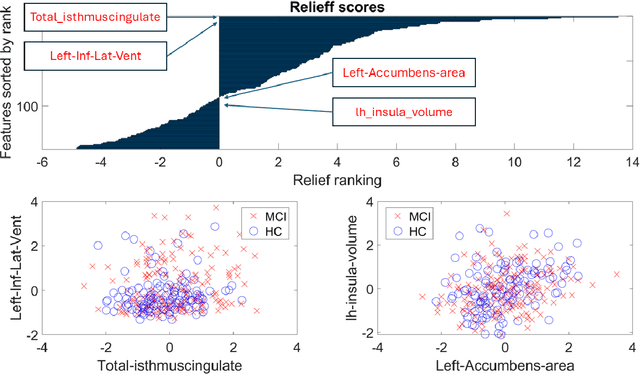

Abstract:MEG are non invasive neuroimaging techniques with excellent temporal and spatial resolution, crucial for studying brain function in dementia and Alzheimer Disease. They identify changes in brain activity at various Alzheimer stages, including preclinical and prodromal phases. MEG may detect pathological changes before clinical symptoms, offering potential biomarkers for intervention. This study evaluates classification techniques using MEG features to distinguish between healthy controls and mild cognitive impairment participants from the BioFIND study. We compare MEG based biomarkers with MRI based anatomical features, both independently and combined. We used 3 Tesla MRI and MEG data from 324 BioFIND participants;158 MCI and 166 HC. Analyses were performed using MATLAB with SPM12 and OSL toolboxes. Machine learning analyses, including 100 Monte Carlo replications of 10 fold cross validation, were conducted on sensor and source spaces. Combining MRI with MEG features achieved the best performance; 0.76 accuracy and AUC of 0.82 for GLMNET using LCMV source based MEG. MEG only analyses using LCMV and eLORETA also performed well, suggesting that combining uncorrected MEG with z-score-corrected MRI features is optimal.

R2 Indicator and Deep Reinforcement Learning Enhanced Adaptive Multi-Objective Evolutionary Algorithm

Apr 11, 2024Abstract:Choosing an appropriate optimization algorithm is essential to achieving success in optimization challenges. Here we present a new evolutionary algorithm structure that utilizes a reinforcement learning-based agent aimed at addressing these issues. The agent employs a double deep q-network to choose a specific evolutionary operator based on feedback it receives from the environment during optimization. The algorithm's structure contains five single-objective evolutionary algorithm operators. This single-objective structure is transformed into a multi-objective one using the R2 indicator. This indicator serves two purposes within our structure: first, it renders the algorithm multi-objective, and second, provides a means to evaluate each algorithm's performance in each generation to facilitate constructing the reinforcement learning-based reward function. The proposed R2-reinforcement learning multi-objective evolutionary algorithm (R2-RLMOEA) is compared with six other multi-objective algorithms that are based on R2 indicators. These six algorithms include the operators used in R2-RLMOEA as well as an R2 indicator-based algorithm that randomly selects operators during optimization. We benchmark performance using the CEC09 functions, with performance measured by inverted generational distance and spacing. The R2-RLMOEA algorithm outperforms all other algorithms with strong statistical significance (p<0.001) when compared with the average spacing metric across all ten benchmarks.

Deep Predictive Coding with Bi-directional Propagation for Classification and Reconstruction

May 29, 2023Abstract:This paper presents a new learning algorithm, termed Deep Bi-directional Predictive Coding (DBPC) that allows developing networks to simultaneously perform classification and reconstruction tasks using the same weights. Predictive Coding (PC) has emerged as a prominent theory underlying information processing in the brain. The general concept for learning in PC is that each layer learns to predict the activities of neurons in the previous layer which enables local computation of error and in-parallel learning across layers. In this paper, we extend existing PC approaches by developing a network which supports both feedforward and feedback propagation of information. Each layer in the networks trained using DBPC learn to predict the activities of neurons in the previous and next layer which allows the network to simultaneously perform classification and reconstruction tasks using feedforward and feedback propagation, respectively. DBPC also relies on locally available information for learning, thus enabling in-parallel learning across all layers in the network. The proposed approach has been developed for training both, fully connected networks and convolutional neural networks. The performance of DBPC has been evaluated on both, classification and reconstruction tasks using the MNIST and FashionMNIST datasets. The classification and the reconstruction performance of networks trained using DBPC is similar to other approaches used for comparison but DBPC uses a significantly smaller network. Further, the significant benefit of DBPC is its ability to achieve this performance using locally available information and in-parallel learning mechanisms which results in an efficient training protocol. This results clearly indicate that DBPC is a much more efficient approach for developing networks that can simultaneously perform both classification and reconstruction.

Solving large-scale MEG/EEG source localization and functional connectivity problems simultaneously using state-space models

Aug 26, 2022

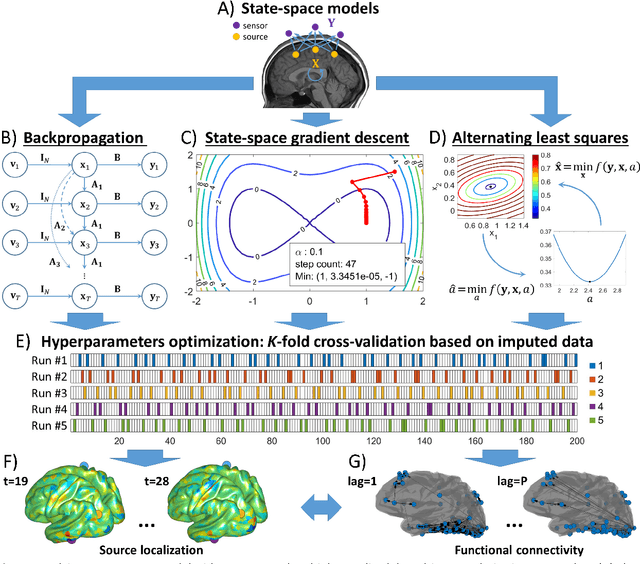

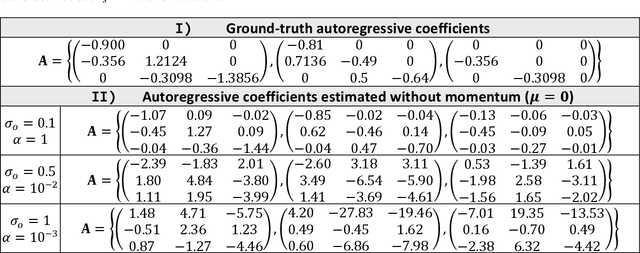

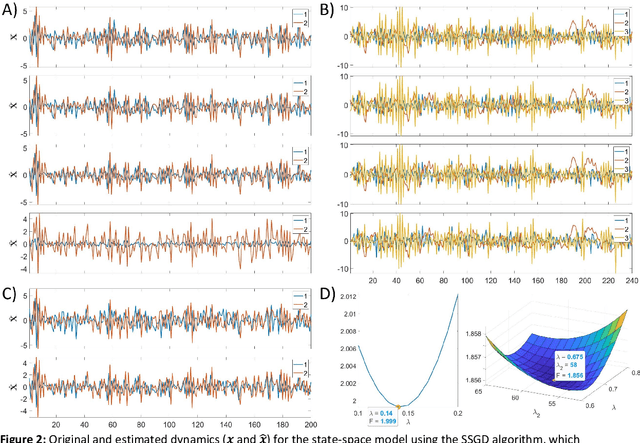

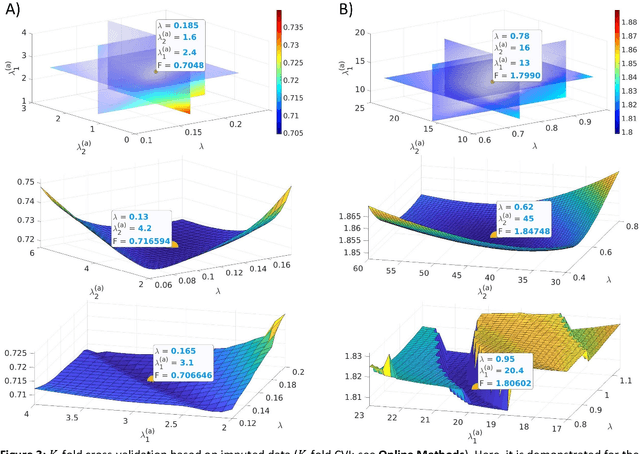

Abstract:State-space models are used in many fields when dynamics are unobserved. Popular methods such as the Kalman filter and expectation maximization enable estimation of these models but pay a high computational cost in large-scale analysis. In these approaches, sparse inverse covariance estimators can reduce the cost; however, a trade-off between enforced sparsity and increased estimation bias occurs, which demands careful consideration in low signal-to-noise ratio scenarios. We overcome these limitations by 1) Introducing multiple penalized state-space models based on data-driven regularization; 2) Implementing novel algorithms such as backpropagation, state-space gradient descent, and alternating least squares; 3) Proposing an extension of K-fold cross-validation to evaluate the regularization parameters. Finally, we solve the simultaneous brain source localization and functional connectivity problems for simulated and real MEG/EEG signals for thousands of sources on the cortical surface, demonstrating a substantial improvement over state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge