Saugat Bhattacharyya

Deep Predictive Coding with Bi-directional Propagation for Classification and Reconstruction

May 29, 2023Abstract:This paper presents a new learning algorithm, termed Deep Bi-directional Predictive Coding (DBPC) that allows developing networks to simultaneously perform classification and reconstruction tasks using the same weights. Predictive Coding (PC) has emerged as a prominent theory underlying information processing in the brain. The general concept for learning in PC is that each layer learns to predict the activities of neurons in the previous layer which enables local computation of error and in-parallel learning across layers. In this paper, we extend existing PC approaches by developing a network which supports both feedforward and feedback propagation of information. Each layer in the networks trained using DBPC learn to predict the activities of neurons in the previous and next layer which allows the network to simultaneously perform classification and reconstruction tasks using feedforward and feedback propagation, respectively. DBPC also relies on locally available information for learning, thus enabling in-parallel learning across all layers in the network. The proposed approach has been developed for training both, fully connected networks and convolutional neural networks. The performance of DBPC has been evaluated on both, classification and reconstruction tasks using the MNIST and FashionMNIST datasets. The classification and the reconstruction performance of networks trained using DBPC is similar to other approaches used for comparison but DBPC uses a significantly smaller network. Further, the significant benefit of DBPC is its ability to achieve this performance using locally available information and in-parallel learning mechanisms which results in an efficient training protocol. This results clearly indicate that DBPC is a much more efficient approach for developing networks that can simultaneously perform both classification and reconstruction.

A Many Objective Optimization Approach for Transfer Learning in EEG Classification

Apr 04, 2019

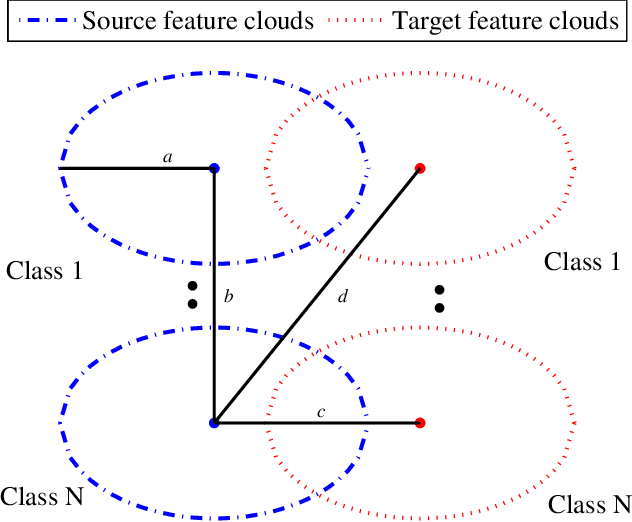

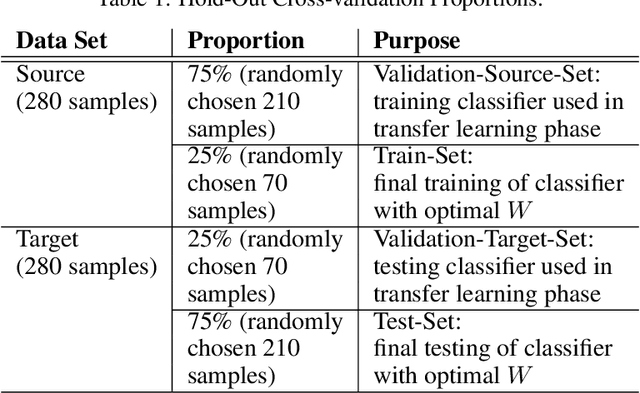

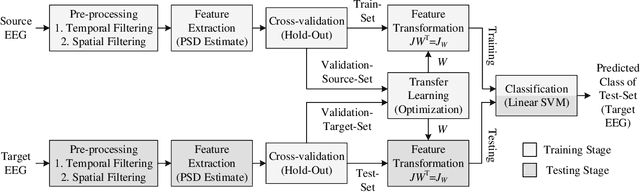

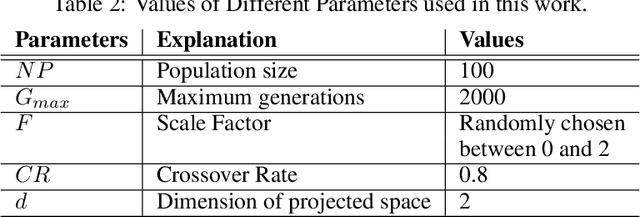

Abstract:In Brain-Computer Interfacing (BCI), due to inter-subject non-stationarities of electroencephalogram (EEG), classifiers are trained and tested using EEG from the same subject. When physical disabilities bottleneck the natural modality of performing a task, acquisition of ample training data is difficult which practically obstructs classifier training. Previous works have tackled this problem by generalizing the feature space amongst multiple subjects including the test subject. This work aims at knowledge transfer to classify EEG of the target subject using a classifier trained with the EEG of another unit source subject. A many-objective optimization framework is proposed where optimal weights are obtained for projecting features in another dimension such that single source-trained target EEG classification performance is maximized with the modified features. To validate the approach, motor imagery tasks from the BCI Competition III Dataset IVa are classified using power spectral density based features and linear support vector machine. Several performance metrics, improvement in accuracy, sensitivity to the dimension of the projected space, assess the efficacy of the proposed approach. Addressing single-source training promotes independent living of differently-abled individuals by reducing assistance from others. The proposed approach eliminates the requirement of EEG from multiple source subjects and is applicable to any existing feature extractors and classifiers. Source code is available at http://worksupplements.droppages.com/tlbci.html.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge