Cyriel Diels

RESenv: A Realistic Earthquake Simulation Environment based on Unreal Engine

Nov 13, 2023

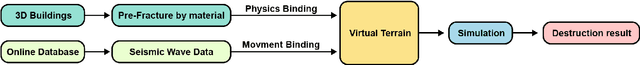

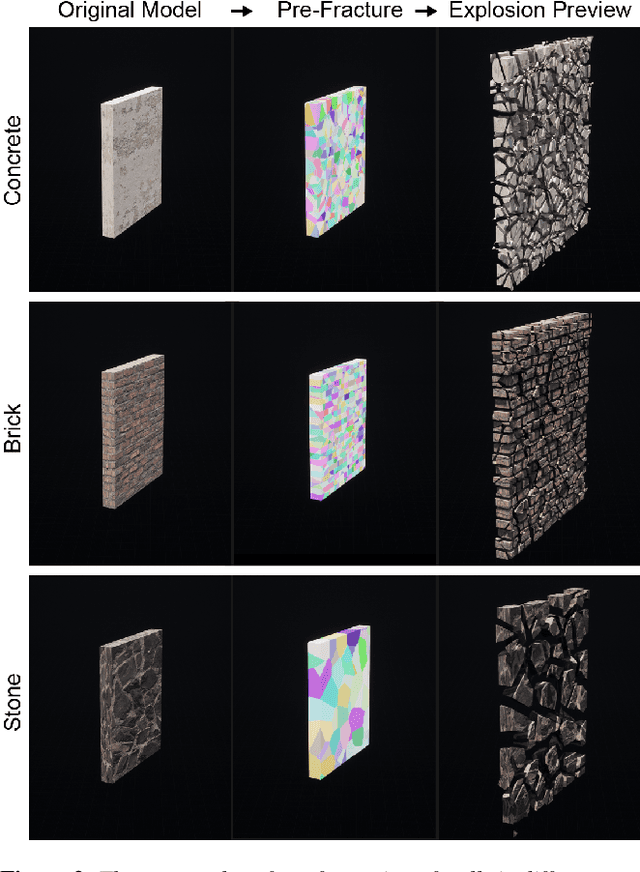

Abstract:Earthquakes have a significant impact on societies and economies, driving the need for effective search and rescue strategies. With the growing role of AI and robotics in these operations, high-quality synthetic visual data becomes crucial. Current simulation methods, mostly focusing on single building damages, often fail to provide realistic visuals for complex urban settings. To bridge this gap, we introduce an innovative earthquake simulation system using the Chaos Physics System in Unreal Engine. Our approach aims to offer detailed and realistic visual simulations essential for AI and robotic training in rescue missions. By integrating real seismic waveform data, we enhance the authenticity and relevance of our simulations, ensuring they closely mirror real-world earthquake scenarios. Leveraging the advanced capabilities of Unreal Engine, our system delivers not only high-quality visualisations but also real-time dynamic interactions, making the simulated environments more immersive and responsive. By providing advanced renderings, accurate physical interactions, and comprehensive geological movements, our solution outperforms traditional methods in efficiency and user experience. Our simulation environment stands out in its detail and realism, making it a valuable tool for AI tasks such as path planning and image recognition related to earthquake responses. We validate our approach through three AI-based tasks: similarity detection, path planning, and image segmentation.

DeepMetricEye: Metric Depth Estimation in Periocular VR Imagery

Nov 13, 2023Abstract:Despite the enhanced realism and immersion provided by VR headsets, users frequently encounter adverse effects such as digital eye strain (DES), dry eye, and potential long-term visual impairment due to excessive eye stimulation from VR displays and pressure from the mask. Recent VR headsets are increasingly equipped with eye-oriented monocular cameras to segment ocular feature maps. Yet, to compute the incident light stimulus and observe periocular condition alterations, it is imperative to transform these relative measurements into metric dimensions. To bridge this gap, we propose a lightweight framework derived from the U-Net 3+ deep learning backbone that we re-optimised, to estimate measurable periocular depth maps. Compatible with any VR headset equipped with an eye-oriented monocular camera, our method reconstructs three-dimensional periocular regions, providing a metric basis for related light stimulus calculation protocols and medical guidelines. Navigating the complexities of data collection, we introduce a Dynamic Periocular Data Generation (DPDG) environment based on UE MetaHuman, which synthesises thousands of training images from a small quantity of human facial scan data. Evaluated on a sample of 36 participants, our method exhibited notable efficacy in the periocular global precision evaluation experiment, and the pupil diameter measurement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge