Cristian da Costa Rocha

A Machine Learning Early Warning System: Multicenter Validation in Brazilian Hospitals

Jun 09, 2020

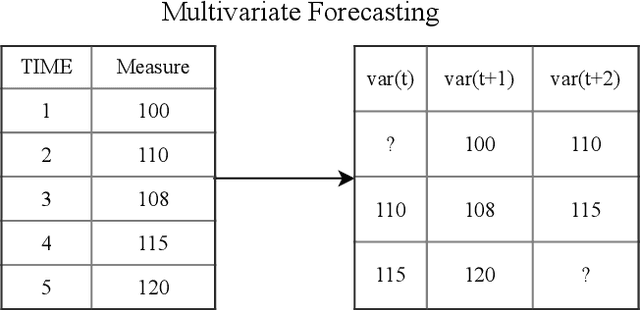

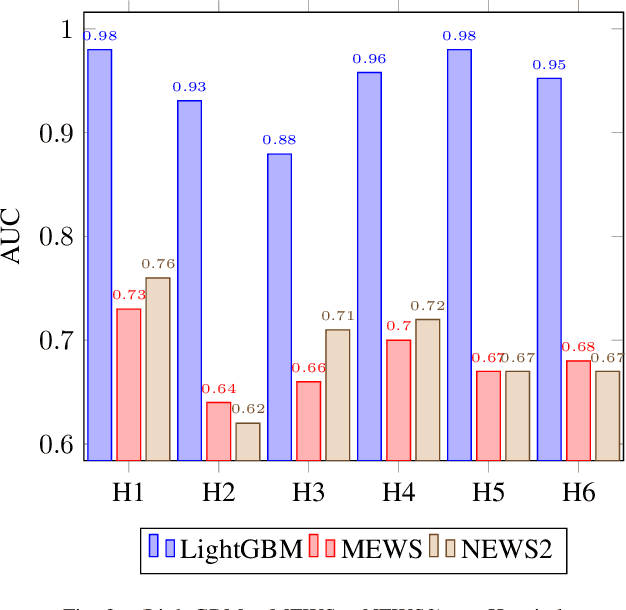

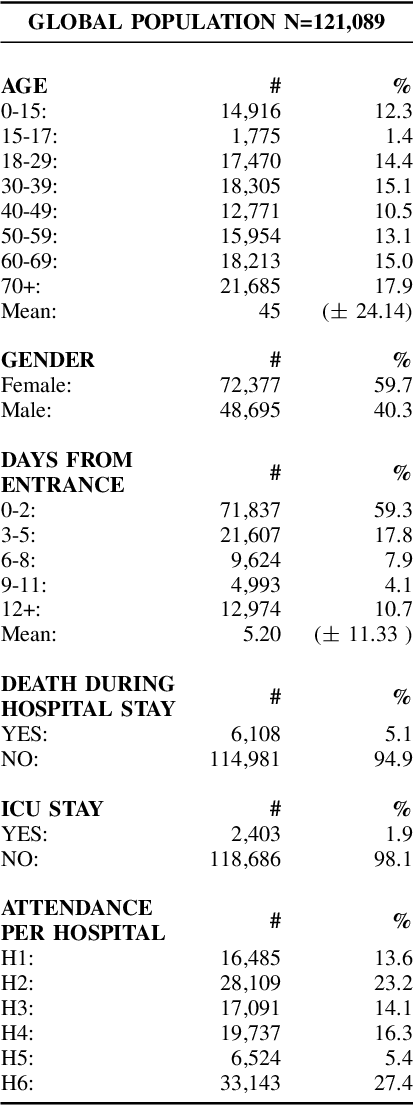

Abstract:Early recognition of clinical deterioration is one of the main steps for reducing inpatient morbidity and mortality. The challenging task of clinical deterioration identification in hospitals lies in the intense daily routines of healthcare practitioners, in the unconnected patient data stored in the Electronic Health Records (EHRs) and in the usage of low accuracy scores. Since hospital wards are given less attention compared to the Intensive Care Unit, ICU, we hypothesized that when a platform is connected to a stream of EHR, there would be a drastic improvement in dangerous situations awareness and could thus assist the healthcare team. With the application of machine learning, the system is capable to consider all patient's history and through the use of high-performing predictive models, an intelligent early warning system is enabled. In this work we used 121,089 medical encounters from six different hospitals and 7,540,389 data points, and we compared popular ward protocols with six different scalable machine learning methods (three are classic machine learning models, logistic and probabilistic-based models, and three gradient boosted models). The results showed an advantage in AUC (Area Under the Receiver Operating Characteristic Curve) of 25 percentage points in the best Machine Learning model result compared to the current state-of-the-art protocols. This is shown by the generalization of the algorithm with leave-one-group-out (AUC of 0.949) and the robustness through cross-validation (AUC of 0.961). We also perform experiments to compare several window sizes to justify the use of five patient timestamps. A sample dataset, experiments, and code are available for replicability purposes.

Self-Supervised Surgical Tool Segmentation using Kinematic Information

Feb 13, 2019

Abstract:Surgical tool segmentation in endoscopic images is the first step towards pose estimation and (sub-)task automation in challenging minimally invasive surgical operations. While many approaches in the literature have shown great results using modern machine learning methods such as convolutional neural networks, the main bottleneck lies in the acquisition of a large number of manually-annotated images for efficient learning. This is especially true in surgical context, where patient-to-patient differences impede the overall generalizability. In order to cope with this lack of annotated data, we propose a self-supervised approach in a robot-assisted context. To our knowledge, the proposed approach is the first to make use of the kinematic model of the robot in order to generate training labels. The core contribution of the paper is to propose an optimization method to obtain good labels for training despite an unknown hand-eye calibration and an imprecise kinematic model. The labels can subsequently be used for fine-tuning a fully-convolutional neural network for pixel-wise classification. As a result, the tool can be segmented in the endoscopic images without needing a single manually-annotated image. Experimental results on phantom and in vivo datasets obtained using a flexible robotized endoscopy system are very promising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge