Cory Hayes

Towards Preference Learning for Autonomous Ground Robot Navigation Tasks

Nov 05, 2020

Abstract:We are interested in the design of autonomous robot behaviors that learn the preferences of users over continued interactions, with the goal of efficiently executing navigation behaviors in a way that the user expects. In this paper, we discuss our work in progress to modify a general model for robot navigation behaviors in an exploration task on a per-user basis using preference-based reinforcement learning. The novel contribution of this approach is that it combines reinforcement learning, motion planning, and natural language processing to allow an autonomous agent to learn from sustained dialogue with a human teammate as opposed to one-off instructions.

Balancing Efficiency and Coverage in Human-Robot Dialogue Collection

Oct 07, 2018

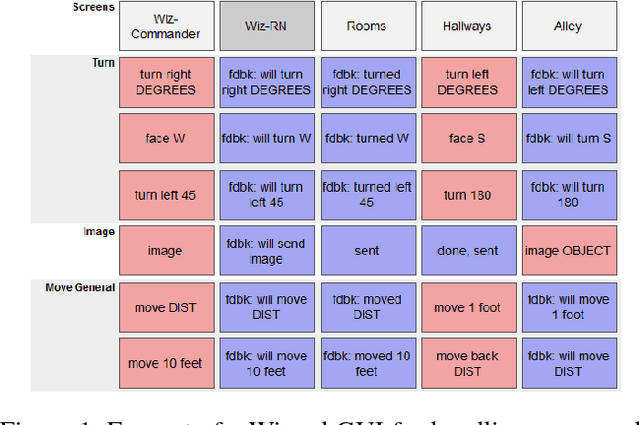

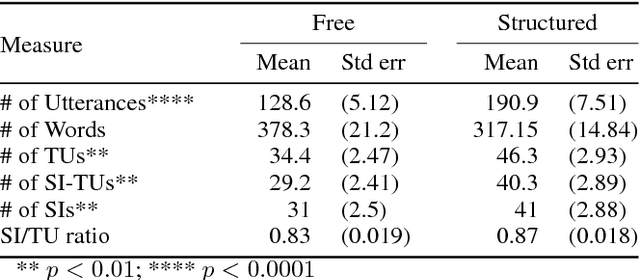

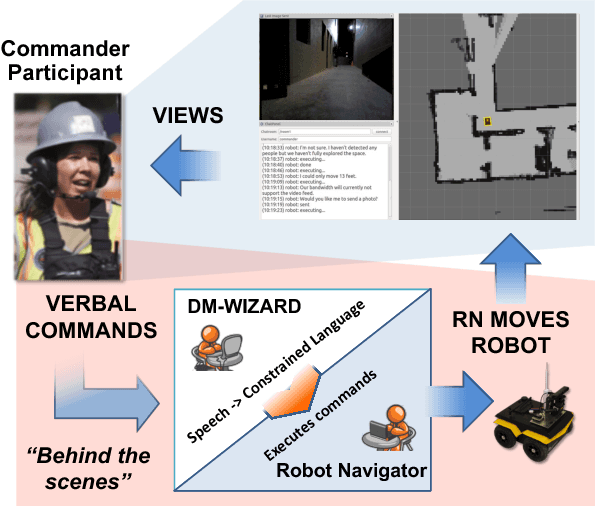

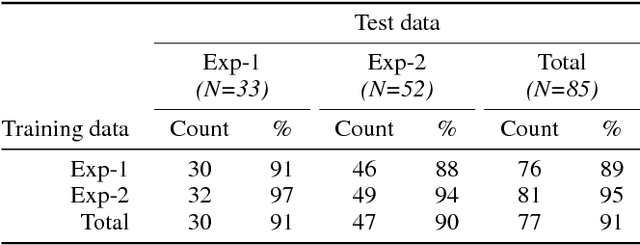

Abstract:We describe a multi-phased Wizard-of-Oz approach to collecting human-robot dialogue in a collaborative search and navigation task. The data is being used to train an initial automated robot dialogue system to support collaborative exploration tasks. In the first phase, a wizard freely typed robot utterances to human participants. For the second phase, this data was used to design a GUI that includes buttons for the most common communications, and templates for communications with varying parameters. Comparison of the data gathered in these phases show that the GUI enabled a faster pace of dialogue while still maintaining high coverage of suitable responses, enabling more efficient targeted data collection, and improvements in natural language understanding using GUI-collected data. As a promising first step towards interactive learning, this work shows that our approach enables the collection of useful training data for navigation-based HRI tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge