Clayton Harper

Scaling Continuous Kernels with Sparse Fourier Domain Learning

Sep 15, 2024

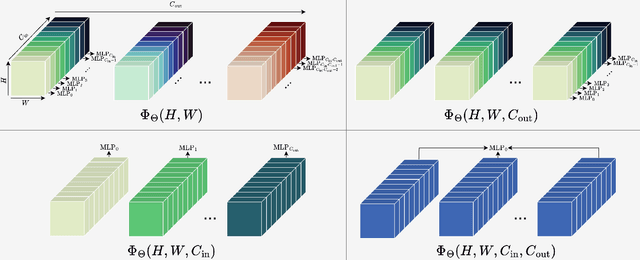

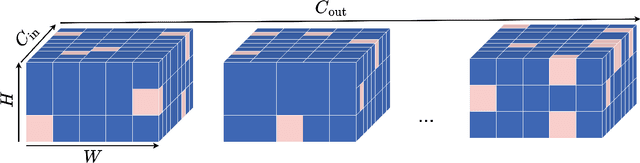

Abstract:We address three key challenges in learning continuous kernel representations: computational efficiency, parameter efficiency, and spectral bias. Continuous kernels have shown significant potential, but their practical adoption is often limited by high computational and memory demands. Additionally, these methods are prone to spectral bias, which impedes their ability to capture high-frequency details. To overcome these limitations, we propose a novel approach that leverages sparse learning in the Fourier domain. Our method enables the efficient scaling of continuous kernels, drastically reduces computational and memory requirements, and mitigates spectral bias by exploiting the Gibbs phenomenon.

Automatic Modulation Classification with Deep Neural Networks

Jan 27, 2023

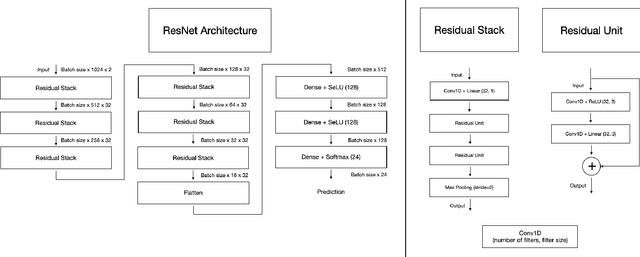

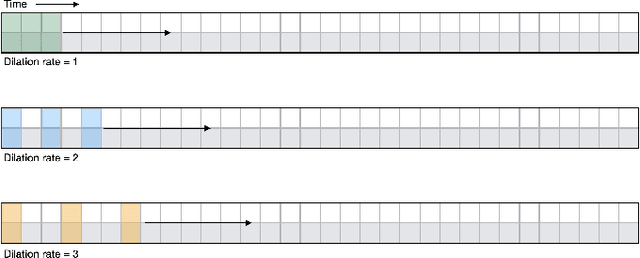

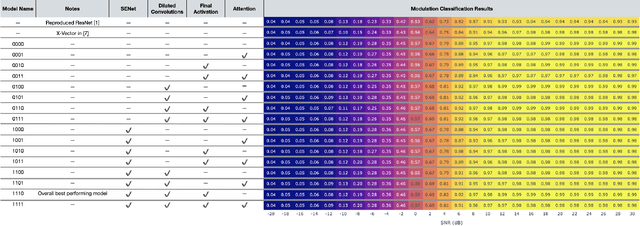

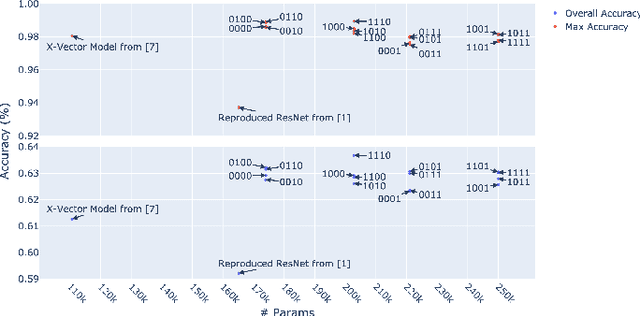

Abstract:Automatic modulation classification is a desired feature in many modern software-defined radios. In recent years, a number of convolutional deep learning architectures have been proposed for automatically classifying the modulation used on observed signal bursts. However, a comprehensive analysis of these differing architectures and importance of each design element has not been carried out. Thus it is unclear what tradeoffs the differing designs of these convolutional neural networks might have. In this research, we investigate numerous architectures for automatic modulation classification and perform a comprehensive ablation study to investigate the impacts of varying hyperparameters and design elements on automatic modulation classification performance. We show that a new state of the art in performance can be achieved using a subset of the studied design elements. In particular, we show that a combination of dilated convolutions, statistics pooling, and squeeze-and-excitation units results in the strongest performing classifier. We further investigate this best performer according to various other criteria, including short signal bursts, common misclassifications, and performance across differing modulation categories and modes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge