Claudio Caprioli

Food Pairing Unveiled: Exploring Recipe Creation Dynamics through Recommender Systems

Jun 21, 2024Abstract:In the early 2000s, renowned chef Heston Blumenthal formulated his "food pairing" hypothesis, positing that if foods share many flavor compounds, then they tend to taste good when eaten together. In 2011, Ahn et al. conducted a study using a dataset of recipes, ingredients, and flavor compounds, finding that, in Western cuisine, ingredients in recipes often share more flavor compounds than expected by chance, indicating a natural tendency towards food pairing. Building upon Ahn's research, our work applies state-of-the-art collaborative filtering techniques to the dataset, providing a tool that can recommend new foods to add in recipes, retrieve missing ingredients and advise against certain combinations. We create our recommender in two ways, by taking into account ingredients appearances in recipes or shared flavor compounds between foods. While our analysis confirms the existence of food pairing, the recipe-based recommender performs significantly better than the flavor-based one, leading to the conclusion that food pairing is just one of the principles to take into account when creating recipes. Furthermore, and more interestingly, we find that food pairing in data is mostly due to trivial couplings of very similar ingredients, leading to a reconsideration of its current role in recipes, from being an already existing feature to a key to open up new scenarios in gastronomy. Our flavor-based recommender can thus leverage this novel concept and provide a new tool to lead culinary innovation.

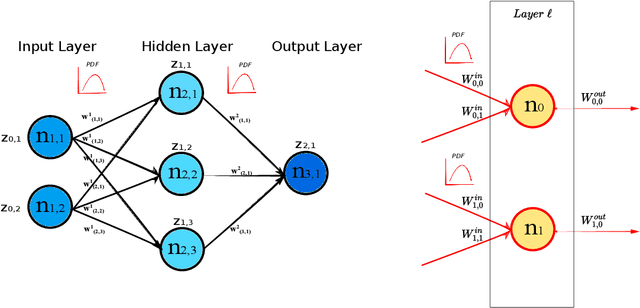

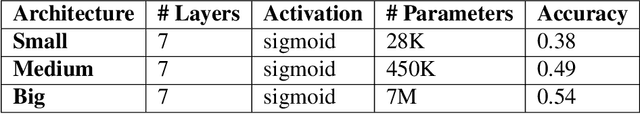

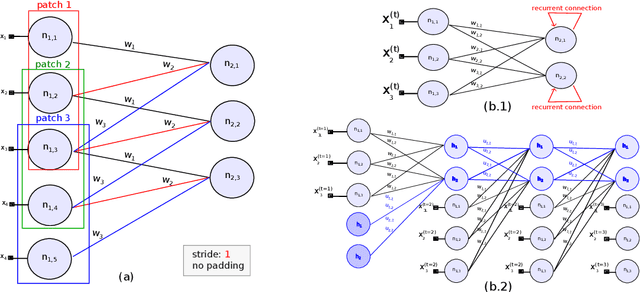

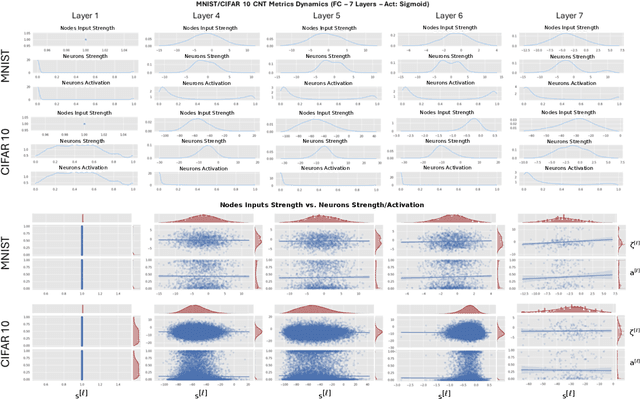

Deep Neural Networks as Complex Networks

Sep 12, 2022

Abstract:Deep Neural Networks are, from a physical perspective, graphs whose `links` and `vertices` iteratively process data and solve tasks sub-optimally. We use Complex Network Theory (CNT) to represents Deep Neural Networks (DNNs) as directed weighted graphs: within this framework, we introduce metrics to study DNNs as dynamical systems, with a granularity that spans from weights to layers, including neurons. CNT discriminates networks that differ in the number of parameters and neurons, the type of hidden layers and activations, and the objective task. We further show that our metrics discriminate low vs. high performing networks. CNT is a comprehensive method to reason about DNNs and a complementary approach to explain a model's behavior that is physically grounded to networks theory and goes beyond the well-studied input-output relation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge