Clément Playout

Multi-style conversion for semantic segmentation of lesions in fundus images by adversarial attacks

Oct 17, 2024Abstract:The diagnosis of diabetic retinopathy, which relies on fundus images, faces challenges in achieving transparency and interpretability when using a global classification approach. However, segmentation-based databases are significantly more expensive to acquire and combining them is often problematic. This paper introduces a novel method, termed adversarial style conversion, to address the lack of standardization in annotation styles across diverse databases. By training a single architecture on combined databases, the model spontaneously modifies its segmentation style depending on the input, demonstrating the ability to convert among different labeling styles. The proposed methodology adds a linear probe to detect dataset origin based on encoder features and employs adversarial attacks to condition the model's segmentation style. Results indicate significant qualitative and quantitative through dataset combination, offering avenues for improved model generalization, uncertainty estimation and continuous interpolation between annotation styles. Our approach enables training a segmentation model with diverse databases while controlling and leveraging annotation styles for improved retinopathy diagnosis.

Cross-Dataset Generalization For Retinal Lesions Segmentation

May 14, 2024

Abstract:Identifying lesions in fundus images is an important milestone toward an automated and interpretable diagnosis of retinal diseases. To support research in this direction, multiple datasets have been released, proposing groundtruth maps for different lesions. However, important discrepancies exist between the annotations and raise the question of generalization across datasets. This study characterizes several known datasets and compares different techniques that have been proposed to enhance the generalisation performance of a model, such as stochastic weight averaging, model soups and ensembles. Our results provide insights into how to combine coarsely labelled data with a finely-grained dataset in order to improve the lesions segmentation.

MAPLES-DR: MESSIDOR Anatomical and Pathological Labels for Explainable Screening of Diabetic Retinopathy

Jan 19, 2024Abstract:Reliable automatic diagnosis of Diabetic Retinopathy (DR) and Macular Edema (ME) is an invaluable asset in improving the rate of monitored patients among at-risk populations and in enabling earlier treatments before the pathology progresses and threatens vision. However, the explainability of screening models is still an open question, and specifically designed datasets are required to support the research. We present MAPLES-DR (MESSIDOR Anatomical and Pathological Labels for Explainable Screening of Diabetic Retinopathy), which contains, for 198 images of the MESSIDOR public fundus dataset, new diagnoses for DR and ME as well as new pixel-wise segmentation maps for 10 anatomical and pathological biomarkers related to DR. This paper documents the design choices and the annotation procedure that produced MAPLES-DR, discusses the interobserver variability and the overall quality of the annotations, and provides guidelines on using the dataset in a machine learning context.

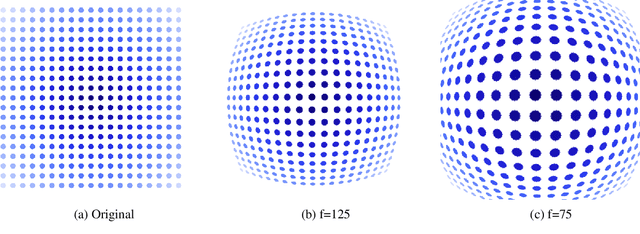

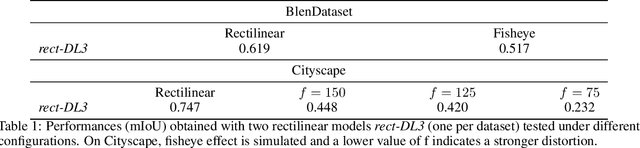

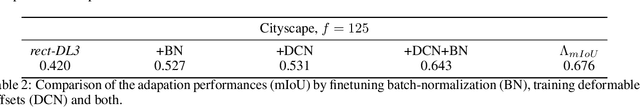

Adaptable Deformable Convolutions for Semantic Segmentation of Fisheye Images in Autonomous Driving Systems

Feb 19, 2021

Abstract:Advanced Driver-Assistance Systems rely heavily on perception tasks such as semantic segmentation where images are captured from large field of view (FoV) cameras. State-of-the-art works have made considerable progress toward applying Convolutional Neural Network (CNN) to standard (rectilinear) images. However, the large FoV cameras used in autonomous vehicles produce fisheye images characterized by strong geometric distortion. This work demonstrates that a CNN trained on standard images can be readily adapted to fisheye images, which is crucial in real-world applications where time-consuming real-time data transformation must be avoided. Our adaptation protocol mainly relies on modifying the support of the convolutions by using their deformable equivalents on top of pre-existing layers. We prove that tuning an optimal support only requires a limited amount of labeled fisheye images, as a small number of training samples is sufficient to significantly improve an existing model's performance on wide-angle images. Furthermore, we show that finetuning the weights of the network is not necessary to achieve high performance once the deformable components are learned. Finally, we provide an in-depth analysis of the effect of the deformable convolutions, bringing elements of discussion on the behavior of CNN models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge