Chun-Tse Chien

FCE-YOLOv8: YOLOv8 with Feature Context Excitation Modules for Fracture Detection in Pediatric Wrist X-ray Images

Oct 01, 2024

Abstract:Children often suffer wrist trauma in daily life, while they usually need radiologists to analyze and interpret X-ray images before surgical treatment by surgeons. The development of deep learning has enabled neural networks to serve as computer-assisted diagnosis (CAD) tools to help doctors and experts in medical image diagnostics. Since the You Only Look Once Version-8 (YOLOv8) model has obtained the satisfactory success in object detection tasks, it has been applied to various fracture detection. This work introduces four variants of Feature Contexts Excitation-YOLOv8 (FCE-YOLOv8) model, each incorporating a different FCE module (i.e., modules of Squeeze-and-Excitation (SE), Global Context (GC), Gather-Excite (GE), and Gaussian Context Transformer (GCT)) to enhance the model performance. Experimental results on GRAZPEDWRI-DX dataset demonstrate that our proposed YOLOv8+GC-M3 model improves the mAP@50 value from 65.78% to 66.32%, outperforming the state-of-the-art (SOTA) model while reducing inference time. Furthermore, our proposed YOLOv8+SE-M3 model achieves the highest mAP@50 value of 67.07%, exceeding the SOTA performance. The implementation of this work is available at https://github.com/RuiyangJu/FCE-YOLOv8.

YOLOv8-ResCBAM: YOLOv8 Based on An Effective Attention Module for Pediatric Wrist Fracture Detection

Sep 27, 2024

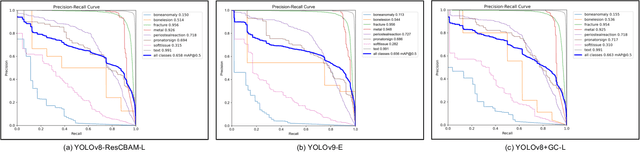

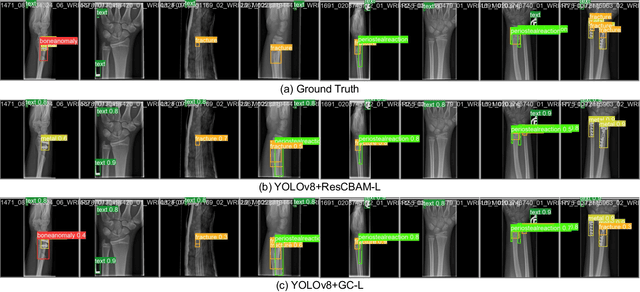

Abstract:Wrist trauma and even fractures occur frequently in daily life, particularly among children who account for a significant proportion of fracture cases. Before performing surgery, surgeons often request patients to undergo X-ray imaging first, and prepare for the surgery based on the analysis of the X-ray images. With the development of neural networks, You Only Look Once (YOLO) series models have been widely used in fracture detection for Computer-Assisted Diagnosis, where the YOLOv8 model has obtained the satisfactory results. Applying the attention modules to neural networks is one of the effective methods to improve the model performance. This paper proposes YOLOv8-ResCBAM, which incorporates Convolutional Block Attention Module integrated with resblock (ResCBAM) into the original YOLOv8 network architecture. The experimental results on the GRAZPEDWRI-DX dataset demonstrate that the mean Average Precision calculated at Intersection over Union threshold of 0.5 (mAP 50) of the proposed model increased from 63.6% of the original YOLOv8 model to 65.8%, which achieves the state-of-the-art performance. The implementation code is available at https://github.com/RuiyangJu/Fracture_Detection_Improved_YOLOv8.

Global Context Modeling in YOLOv8 for Pediatric Wrist Fracture Detection

Jul 03, 2024

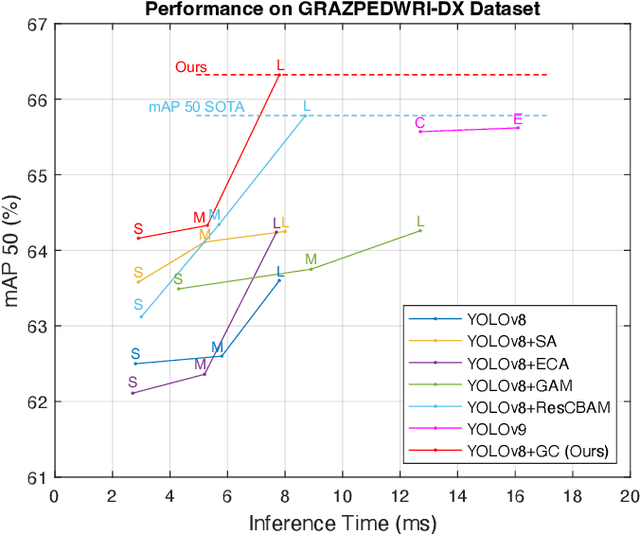

Abstract:Children often suffer wrist injuries in daily life, while fracture injuring radiologists usually need to analyze and interpret X-ray images before surgical treatment by surgeons. The development of deep learning has enabled neural network models to work as computer-assisted diagnosis (CAD) tools to help doctors and experts in diagnosis. Since the YOLOv8 models have obtained the satisfactory success in object detection tasks, it has been applied to fracture detection. The Global Context (GC) block effectively models the global context in a lightweight way, and incorporating it into YOLOv8 can greatly improve the model performance. This paper proposes the YOLOv8+GC model for fracture detection, which is an improved version of the YOLOv8 model with the GC block. Experimental results demonstrate that compared to the original YOLOv8 model, the proposed YOLOv8-GC model increases the mean average precision calculated at intersection over union threshold of 0.5 (mAP 50) from 63.58% to 66.32% on the GRAZPEDWRI-DX dataset, achieving the state-of-the-art (SOTA) level. The implementation code for this work is available on GitHub at https://github.com/RuiyangJu/YOLOv8_Global_Context_Fracture_Detection.

YOLOv9 for Fracture Detection in Pediatric Wrist Trauma X-ray Images

Mar 17, 2024Abstract:The introduction of YOLOv9, the latest version of the You Only Look Once (YOLO) series, has led to its widespread adoption across various scenarios. This paper is the first to apply the YOLOv9 algorithm model to the fracture detection task as computer-assisted diagnosis (CAD) to help radiologists and surgeons to interpret X-ray images. Specifically, this paper trained the model on the GRAZPEDWRI-DX dataset and extended the training set using data augmentation techniques to improve the model performance. Experimental results demonstrate that compared to the mAP 50-95 of the current state-of-the-art (SOTA) model, the YOLOv9 model increased the value from 42.16% to 43.73%, with an improvement of 3.7%. The implementation code is publicly available at https://github.com/RuiyangJu/YOLOv9-Fracture-Detection.

YOLOv8-AM: YOLOv8 with Attention Mechanisms for Pediatric Wrist Fracture Detection

Feb 17, 2024

Abstract:Wrist trauma and even fractures occur frequently in daily life, particularly among children who account for a significant proportion of fracture cases. Before performing surgery, surgeons often request patients to undergo X-ray imaging first and prepare for it based on the analysis of the radiologist. With the development of neural networks, You Only Look Once (YOLO) series models have been widely used in fracture detection as computer-assisted diagnosis (CAD). In 2023, Ultralytics presented the latest version of the YOLO models, which has been employed for detecting fractures across various parts of the body. Attention mechanism is one of the hottest methods to improve the model performance. This research work proposes YOLOv8-AM, which incorporates the attention mechanism into the original YOLOv8 architecture. Specifically, we respectively employ four attention modules, Convolutional Block Attention Module (CBAM), Global Attention Mechanism (GAM), Efficient Channel Attention (ECA), and Shuffle Attention (SA), to design the improved models and train them on GRAZPEDWRI-DX dataset. Experimental results demonstrate that the mean Average Precision at IoU 50 (mAP 50) of the YOLOv8-AM model based on ResBlock + CBAM (ResCBAM) increased from 63.6% to 65.8%, which achieves the state-of-the-art (SOTA) performance. Conversely, YOLOv8-AM model incorporating GAM obtains the mAP 50 value of 64.2%, which is not a satisfactory enhancement. Therefore, we combine ResBlock and GAM, introducing ResGAM to design another new YOLOv8-AM model, whose mAP 50 value is increased to 65.0%.

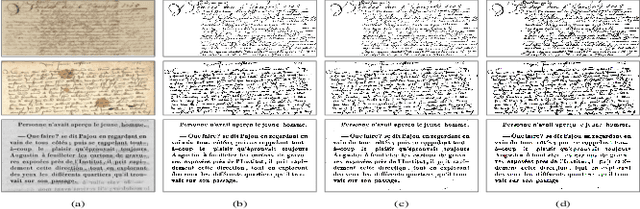

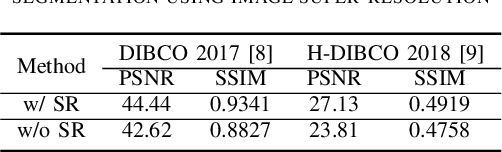

Semantic Segmentation Using Super Resolution Technique as Pre-Processing

Jun 27, 2023

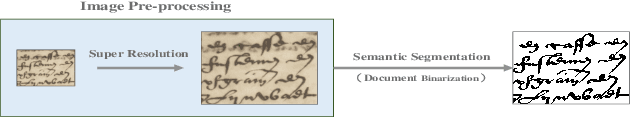

Abstract:Combining high-level and low-level visual tasks is a common technique in the field of computer vision. This work integrates the technique of image super resolution to semantic segmentation for document image binarization. It demonstrates that using image super-resolution as a preprocessing step can effectively enhance the results and performance of semantic segmentation.

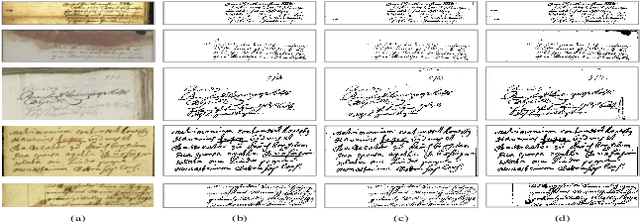

CCDWT-GAN: Generative Adversarial Networks Based on Color Channel Using Discrete Wavelet Transform for Document Image Binarization

May 27, 2023Abstract:To efficiently extract the textual information from color degraded document images is an important research topic. Long-term imperfect preservation of ancient documents has led to various types of degradation such as page staining, paper yellowing, and ink bleeding; these degradations badly impact the image processing for information extraction. In this paper, we present CCDWT-GAN, a generative adversarial network (GAN) that utilizes the discrete wavelet transform (DWT) on RGB (red, green, blue) channel splited images. The proposed method comprises three stages: image preprocessing, image enhancement, and image binarization. This work conducts comparative experiments in the image preprocessing stage to determine the optimal selection of DWT with normalization. Additionally, we perform an ablation study on the results of the image enhancement stage and the image binarization stage to validate their positive effect on the model performance. This work compares the performance of the proposed method with other state-of-the-art (SOTA) methods on DIBCO and H-DIBCO ((Handwritten) Document Image Binarization Competition) datasets. The experimental results demonstrate that CCDWT-GAN achieves a top two performance on multiple benchmark datasets, and outperforms other SOTA methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge