Chulmin Joo

Ptychographic lens-less polarization microscopy

Sep 13, 2022

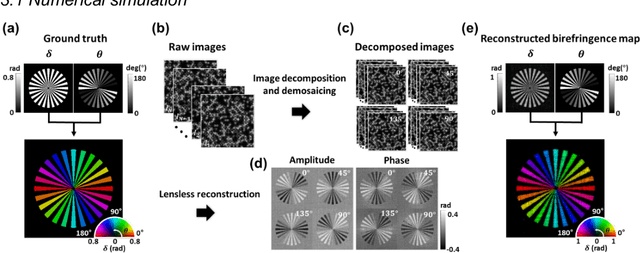

Abstract:Birefringence, an inherent characteristic of optically anisotropic materials, is widely utilized in various imaging applications ranging from material characterizations to clinical diagnosis. Polarized light microscopy enables high-resolution, high-contrast imaging of optically anisotropic specimens, but it is associated with mechanical rotations of polarizer/analyzer and relatively complex optical designs. Here, we present a novel form of polarization-sensitive microscopy capable of birefringence imaging of transparent objects without an optical lens and any moving parts. Our method exploits an optical mask-modulated polarization image sensor and single-input-state LED illumination design to obtain complex and birefringence images of the object via ptychographic phase retrieval. Using a camera with a pixel resolution of 3.45 um, the method achieves birefringence imaging with a half-pitch resolution of 2.46 um over a 59.74 mm^2 field-of-view, which corresponds to a space-bandwidth product of 9.9 megapixels. We demonstrate the high-resolution, large-area birefringence imaging capability of our method by presenting the birefringence images of various anisotropic objects, including a birefringent resolution target, liquid crystal polymer depolarizer, monosodium urate crystal, and excised mouse eye and heart tissues.

Light Lies: Optical Adversarial Attack

Jul 14, 2021

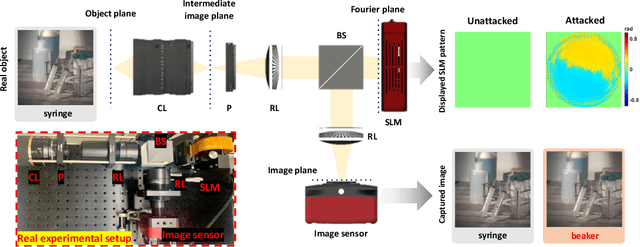

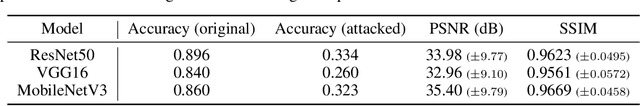

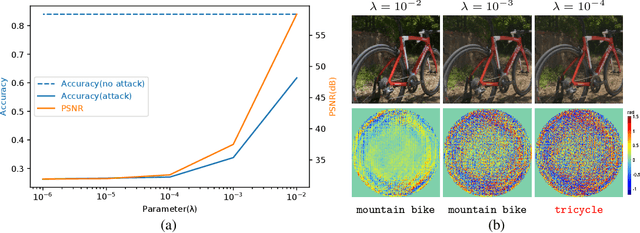

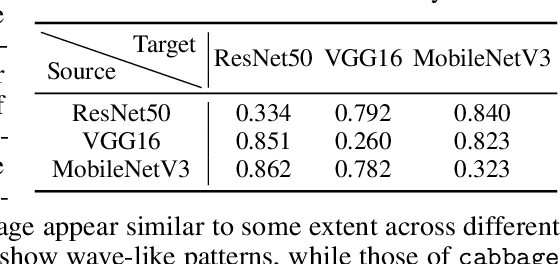

Abstract:A significant amount of work has been done on adversarial attacks that inject imperceptible noise to images to deteriorate the image classification performance of deep models. However, most of the existing studies consider attacks in the digital (pixel) domain where an image acquired by an image sensor with sampling and quantization has been recorded. This paper, for the first time, introduces an optical adversarial attack, which physically alters the light field information arriving at the image sensor so that the classification model yields misclassification. More specifically, we modulate the phase of the light in the Fourier domain using a spatial light modulator placed in the photographic system. The operative parameters of the modulator are obtained by gradient-based optimization to maximize cross-entropy and minimize distortions. We present experiments based on both simulation and a real hardware optical system, from which the feasibility of the proposed optical attack is demonstrated. It is also verified that the proposed attack is completely different from common optical-domain distortions such as spherical aberration, defocus, and astigmatism in terms of both perturbation patterns and classification results.

Intraoperative margin assessment of human breast tissue in optical coherence tomography images using deep neural networks

Mar 31, 2017

Abstract:Objective: In this work, we perform margin assessment of human breast tissue from optical coherence tomography (OCT) images using deep neural networks (DNNs). This work simulates an intraoperative setting for breast cancer lumpectomy. Methods: To train the DNNs, we use both the state-of-the-art methods (Weight Decay and DropOut) and a newly introduced regularization method based on function norms. Commonly used methods can fail when only a small database is available. The use of a function norm introduces a direct control over the complexity of the function with the aim of diminishing the risk of overfitting. Results: As neither the code nor the data of previous results are publicly available, the obtained results are compared with reported results in the literature for a conservative comparison. Moreover, our method is applied to locally collected data on several data configurations. The reported results are the average over the different trials. Conclusion: The experimental results show that the use of DNNs yields significantly better results than other techniques when evaluated in terms of sensitivity, specificity, F1 score, G-mean and Matthews correlation coefficient. Function norm regularization yielded higher and more robust results than competing methods. Significance: We have demonstrated a system that shows high promise for (partially) automated margin assessment of human breast tissue, Equal error rate (EER) is reduced from approximately 12\% (the lowest reported in the literature) to 5\%\,--\,a 58\% reduction. The method is computationally feasible for intraoperative application (less than 2 seconds per image).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge