Christoph Schöller

FloMo: Tractable Motion Prediction with Normalizing Flows

Mar 05, 2021

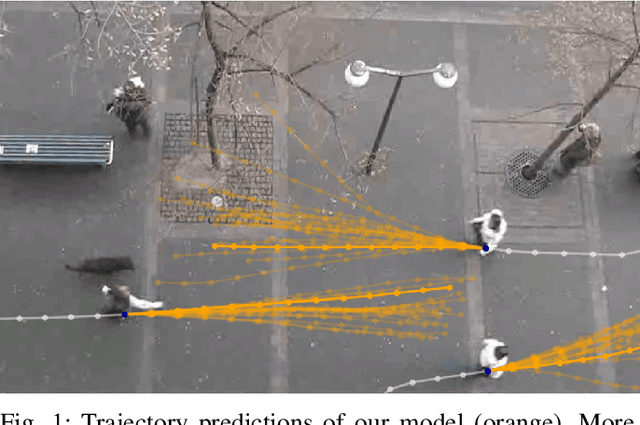

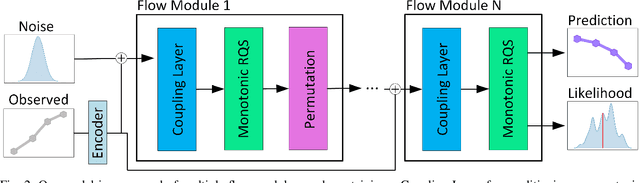

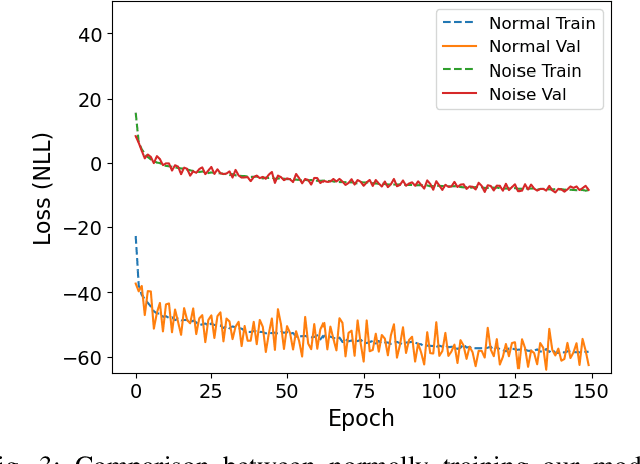

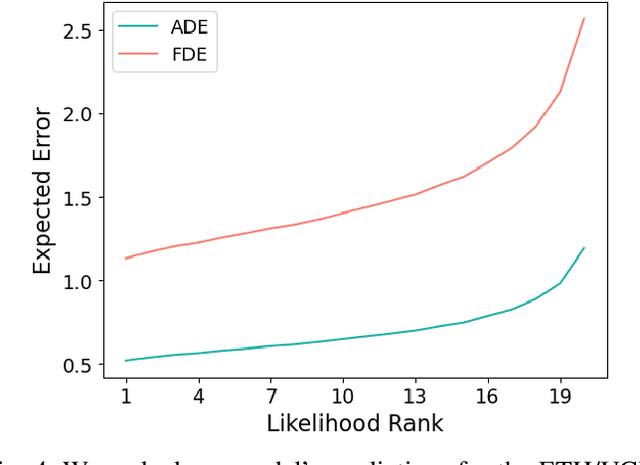

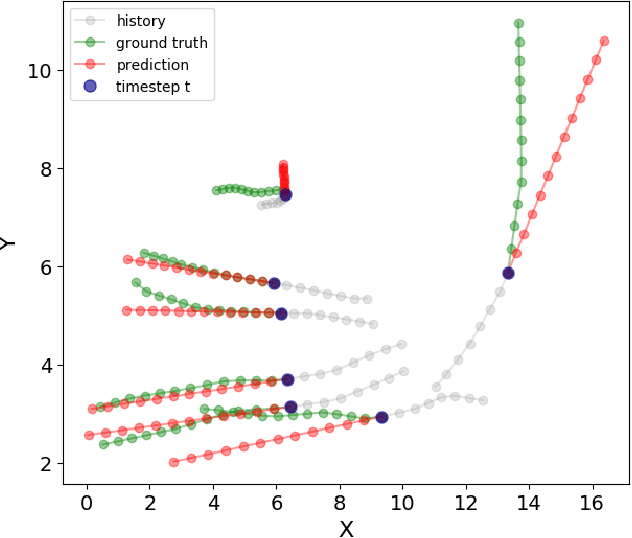

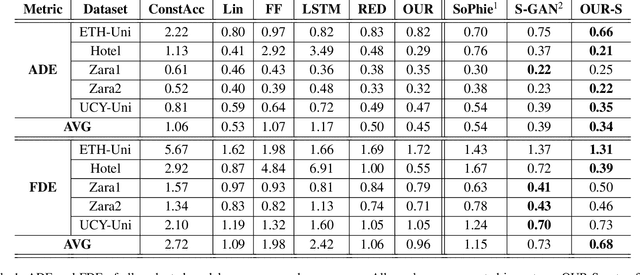

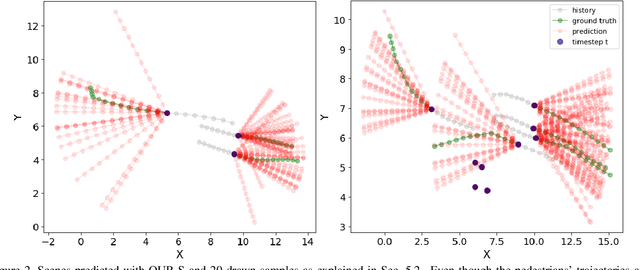

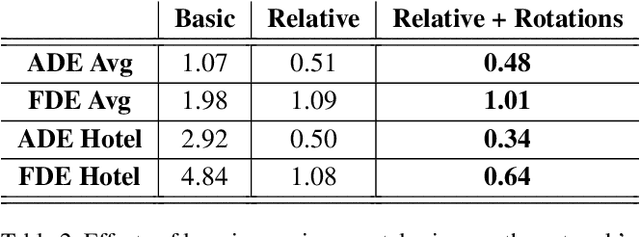

Abstract:The future motion of traffic participants is inherently uncertain. To plan safely, therefore, an autonomous agent must take into account multiple possible outcomes and prioritize them. Recently, this problem has been addressed with generative neural networks. However, most generative models either do not learn the true underlying trajectory distribution reliably, or do not allow likelihoods to be associated with predictions. In our work, we model motion prediction directly as a density estimation problem with a normalizing flow between a noise sample and the future motion distribution. Our model, named FloMo, allows likelihoods to be computed in a single network pass and can be trained directly with maximum likelihood estimation. Furthermore, we propose a method to stabilize training flows on trajectory datasets and a new data augmentation transformation that improves the performance and generalization of our model. Our method achieves state-of-the-art performance on three popular prediction datasets, with a significant gap to most competing models.

Providentia -- A Large Scale Sensing System for the Assistance of Autonomous Vehicles

Jun 19, 2019

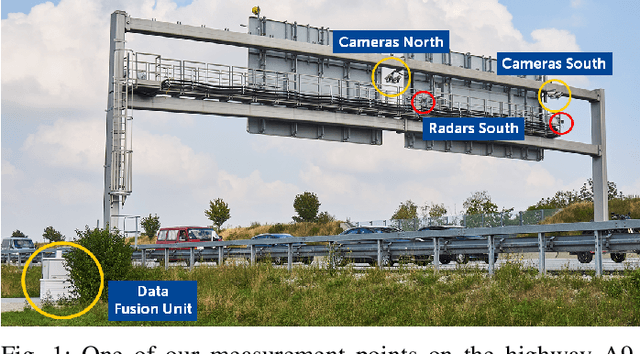

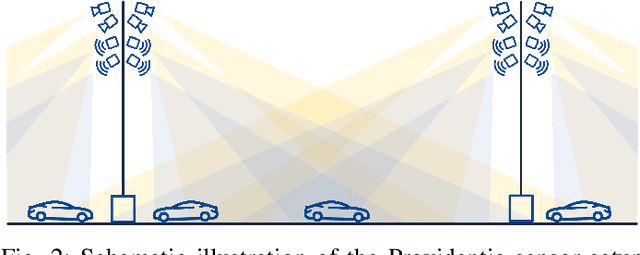

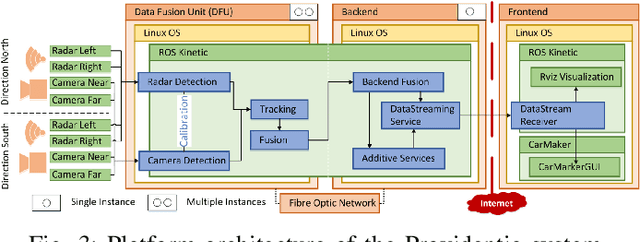

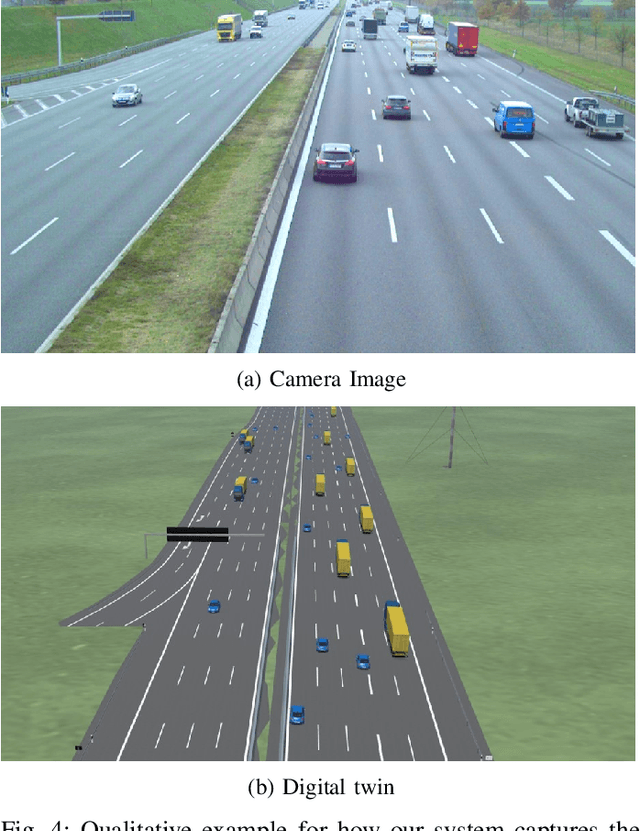

Abstract:The environmental perception of autonomous vehicles is not only limited by physical sensor ranges and algorithmic performance, but also occlusions degrade their understanding of the current traffic situation. This poses a great threat for safety, limits their driving speed and can lead to inconvenient maneuvers that decrease their acceptance. Intelligent Transportation Systems can help to alleviate these problems. By providing autonomous vehicles with additional detailed information about the current traffic in form of a digital model of their world, i.e. a digital twin, an Intelligent Transportation System can fill in the gaps in the vehicle's perception and enhance its field of view. However, detailed descriptions of implementations of such a system and working prototypes demonstrating its feasibility are scarce. In this work, we propose a hardware and software architecture to build such a reliable Intelligent Transportation System. We have implemented this system in the real world and show that it is able to create an accurate digital twin of an extended highway stretch. Furthermore, we provide this digital twin to an autonomous vehicle and demonstrate how it extends the vehicle's perception beyond the limits of its on-board sensors.

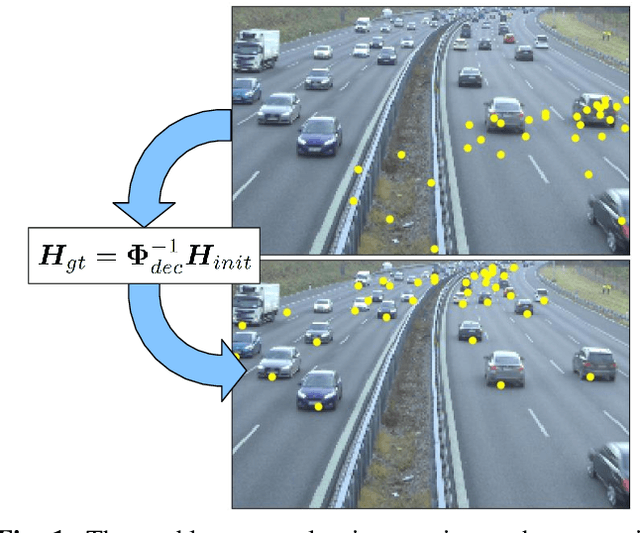

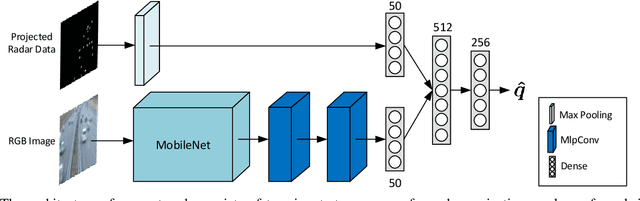

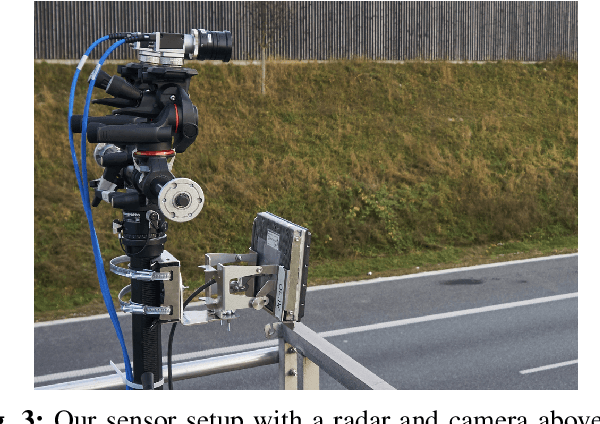

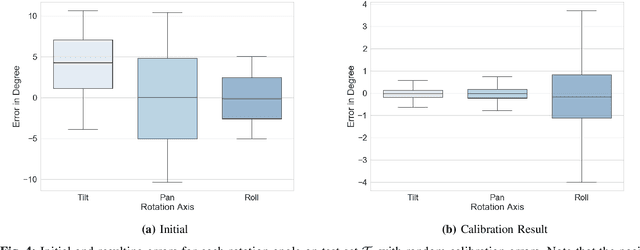

Targetless Rotational Auto-Calibration of Radar and Camera for Intelligent Transportation Systems

Apr 18, 2019

Abstract:Most intelligent transportation systems use a combination of radar sensors and cameras for robust vehicle perception. The calibration of these heterogeneous sensor types in an automatic fashion during system operation is challenging due to differing physical measurement principles and the high sparsity of traffic radars. We propose - to the best of our knowledge - the first data-driven method for automatic rotational radar-camera calibration without dedicated calibration targets. Our approach is based on a coarse and a fine convolutional neural network. We employ a boosting-inspired training algorithm, where we train the fine network on the residual error of the coarse network. Due to the unavailability of public datasets combining radar and camera measurements, we recorded our own real-world data. We demonstrate that our method is able to reach precise and robust sensor registration and show its generalization capabilities to different sensor alignments and perspectives.

The Simpler the Better: Constant Velocity for Pedestrian Motion Prediction

Mar 19, 2019

Abstract:Pedestrian motion prediction is a fundamental task for autonomous robots and vehicles to operate safely. In recent years many complex models have been proposed to address this problem. While complex models can be justified, simple models should be preferred given the same or better performance. In this work we show that a simple Constant Velocity Model can achieve competitive performance on this task. We evaluate the Constant Velocity Model using two popular benchmark datasets for pedestrian motion prediction and show that it outperforms state-of-the-art models and several common baselines. The success of this model indicates that either neural networks are not able to make use of the additional information they are provided with, or it is not as relevant as commonly believed. Therefore, we analyze how neural networks process this information and how it impacts their predictions. Our analysis shows that neural networks implicitly learn environmental priors that have a negative impact on their generalization capability, most of the pedestrian's motion history is ignored and interactions - while happening - are too complex to predict. These findings explain the success of the Constant Velocity Model and lead to a better understanding of the problem at hand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge