Christoffer Nellåker

A New Graph Node Classification Benchmark: Learning Structure from Histology Cell Graphs

Nov 11, 2022

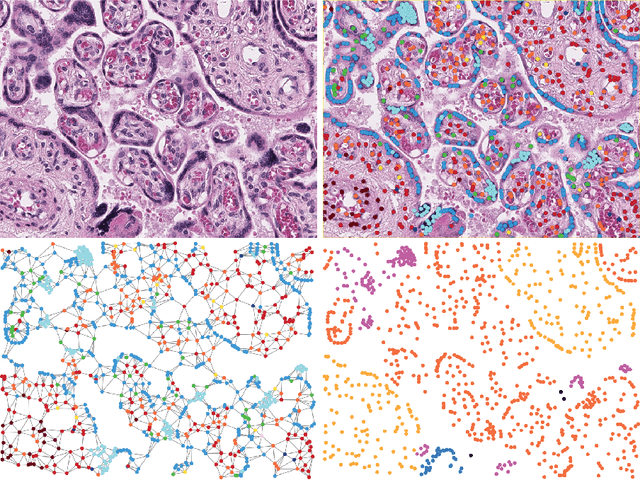

Abstract:We introduce a new benchmark dataset, Placenta, for node classification in an underexplored domain: predicting microanatomical tissue structures from cell graphs in placenta histology whole slide images. This problem is uniquely challenging for graph learning for a few reasons. Cell graphs are large (>1 million nodes per image), node features are varied (64-dimensions of 11 types of cells), class labels are imbalanced (9 classes ranging from 0.21% of the data to 40.0%), and cellular communities cluster into heterogeneously distributed tissues of widely varying sizes (from 11 nodes to 44,671 nodes for a single structure). Here, we release a dataset consisting of two cell graphs from two placenta histology images totalling 2,395,747 nodes, 799,745 of which have ground truth labels. We present inductive benchmark results for 7 scalable models and show how the unique qualities of cell graphs can help drive the development of novel graph neural network architectures.

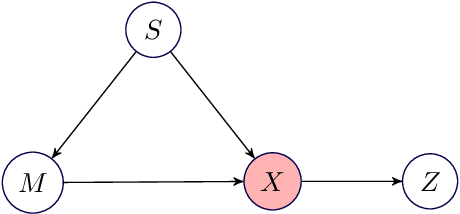

Adjusting for Confounding in Unsupervised Latent Representations of Images

Nov 26, 2018

Abstract:Biological imaging data are often partially confounded or contain unwanted variability. Examples of such phenomena include variable lighting across microscopy image captures, stain intensity variation in histological slides, and batch effects for high throughput drug screening assays. Therefore, to develop "fair" models which generalise well to unseen examples, it is crucial to learn data representations that are insensitive to nuisance factors of variation. In this paper, we present a strategy based on adversarial training, capable of learning unsupervised representations invariant to confounders. As an empirical validation of our method, we use deep convolutional autoencoders to learn unbiased cellular representations from microscopy imaging.

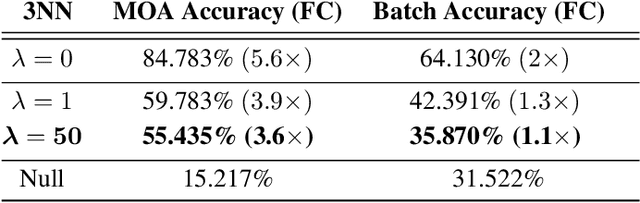

From Same Photo: Cheating on Visual Kinship Challenges

Nov 05, 2018

Abstract:With the propensity for deep learning models to learn unintended signals from data sets there is always the possibility that the network can `cheat' in order to solve a task. In the instance of data sets for visual kinship verification, one such unintended signal could be that the faces are cropped from the same photograph, since faces from the same photograph are more likely to be from the same family. In this paper we investigate the influence of this artefactual data inference in published data sets for kinship verification. To this end, we obtain a large dataset, and train a CNN classifier to determine if two faces are from the same photograph or not. Using this classifier alone as a naive classifier of kinship, we demonstrate near state of the art results on five public benchmark data sets for kinship verification - achieving over 90% accuracy on one of them. Thus, we conclude that faces derived from the same photograph are a strong inadvertent signal in all the data sets we examined, and it is likely that the fraction of kinship explained by existing kinship models is small.

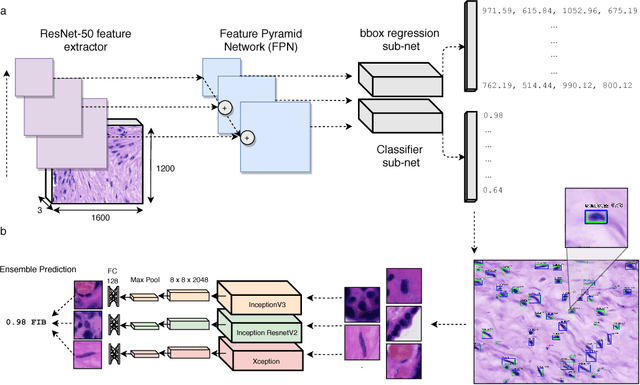

Towards Deep Cellular Phenotyping in Placental Histology

May 25, 2018

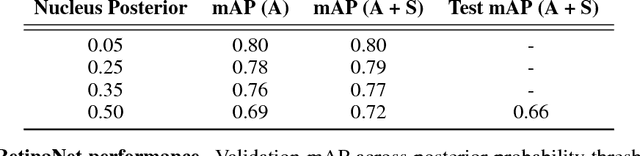

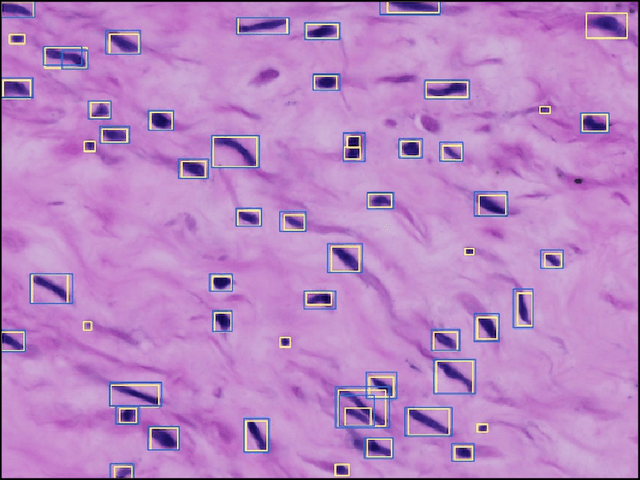

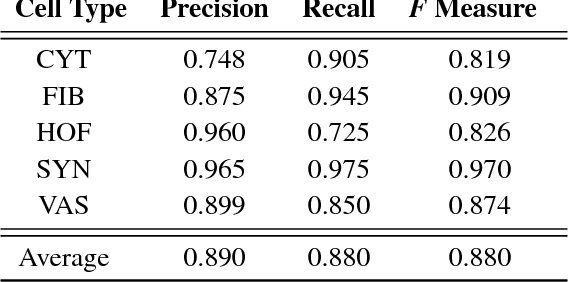

Abstract:The placenta is a complex organ, playing multiple roles during fetal development. Very little is known about the association between placental morphological abnormalities and fetal physiology. In this work, we present an open sourced, computationally tractable deep learning pipeline to analyse placenta histology at the level of the cell. By utilising two deep Convolutional Neural Network architectures and transfer learning, we can robustly localise and classify placental cells within five classes with an accuracy of 89%. Furthermore, we learn deep embeddings encoding phenotypic knowledge that is capable of both stratifying five distinct cell populations and learn intraclass phenotypic variance. We envisage that the automation of this pipeline to population scale studies of placenta histology has the potential to improve our understanding of basic cellular placental biology and its variations, particularly its role in predicting adverse birth outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge