Christian Jacobsen

TC-LoRA: Temporally Modulated Conditional LoRA for Adaptive Diffusion Control

Oct 10, 2025

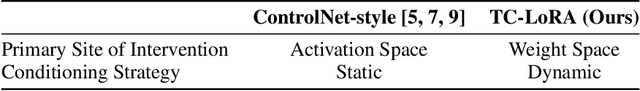

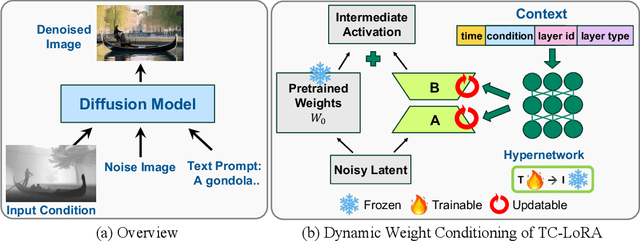

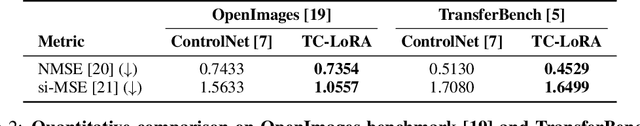

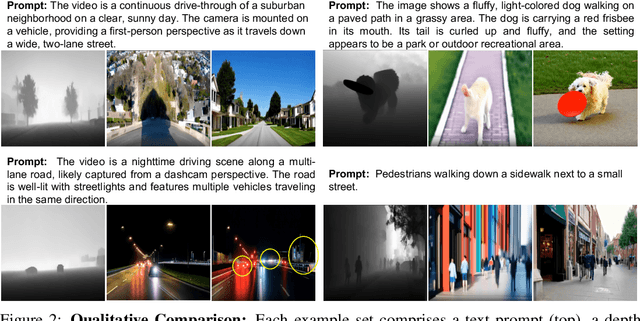

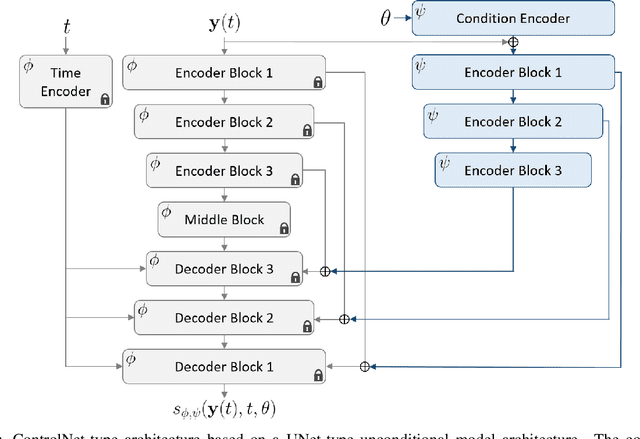

Abstract:Current controllable diffusion models typically rely on fixed architectures that modify intermediate activations to inject guidance conditioned on a new modality. This approach uses a static conditioning strategy for a dynamic, multi-stage denoising process, limiting the model's ability to adapt its response as the generation evolves from coarse structure to fine detail. We introduce TC-LoRA (Temporally Modulated Conditional LoRA), a new paradigm that enables dynamic, context-aware control by conditioning the model's weights directly. Our framework uses a hypernetwork to generate LoRA adapters on-the-fly, tailoring weight modifications for the frozen backbone at each diffusion step based on time and the user's condition. This mechanism enables the model to learn and execute an explicit, adaptive strategy for applying conditional guidance throughout the entire generation process. Through experiments on various data domains, we demonstrate that this dynamic, parametric control significantly enhances generative fidelity and adherence to spatial conditions compared to static, activation-based methods. TC-LoRA establishes an alternative approach in which the model's conditioning strategy is modified through a deeper functional adaptation of its weights, allowing control to align with the dynamic demands of the task and generative stage.

3D-Generalist: Self-Improving Vision-Language-Action Models for Crafting 3D Worlds

Jul 09, 2025Abstract:Despite large-scale pretraining endowing models with language and vision reasoning capabilities, improving their spatial reasoning capability remains challenging due to the lack of data grounded in the 3D world. While it is possible for humans to manually create immersive and interactive worlds through 3D graphics, as seen in applications such as VR, gaming, and robotics, this process remains highly labor-intensive. In this paper, we propose a scalable method for generating high-quality 3D environments that can serve as training data for foundation models. We recast 3D environment building as a sequential decision-making problem, employing Vision-Language-Models (VLMs) as policies that output actions to jointly craft a 3D environment's layout, materials, lighting, and assets. Our proposed framework, 3D-Generalist, trains VLMs to generate more prompt-aligned 3D environments via self-improvement fine-tuning. We demonstrate the effectiveness of 3D-Generalist and the proposed training strategy in generating simulation-ready 3D environments. Furthermore, we demonstrate its quality and scalability in synthetic data generation by pretraining a vision foundation model on the generated data. After fine-tuning the pre-trained model on downstream tasks, we show that it surpasses models pre-trained on meticulously human-crafted synthetic data and approaches results achieved with real data orders of magnitude larger.

Enhancing Dynamical System Modeling through Interpretable Machine Learning Augmentations: A Case Study in Cathodic Electrophoretic Deposition

Jan 16, 2024Abstract:We introduce a comprehensive data-driven framework aimed at enhancing the modeling of physical systems, employing inference techniques and machine learning enhancements. As a demonstrative application, we pursue the modeling of cathodic electrophoretic deposition (EPD), commonly known as e-coating. Our approach illustrates a systematic procedure for enhancing physical models by identifying their limitations through inference on experimental data and introducing adaptable model enhancements to address these shortcomings. We begin by tackling the issue of model parameter identifiability, which reveals aspects of the model that require improvement. To address generalizability , we introduce modifications which also enhance identifiability. However, these modifications do not fully capture essential experimental behaviors. To overcome this limitation, we incorporate interpretable yet flexible augmentations into the baseline model. These augmentations are parameterized by simple fully-connected neural networks (FNNs), and we leverage machine learning tools, particularly Neural Ordinary Differential Equations (Neural ODEs), to learn these augmentations. Our simulations demonstrate that the machine learning-augmented model more accurately captures observed behaviors and improves predictive accuracy. Nevertheless, we contend that while the model updates offer superior performance and capture the relevant physics, we can reduce off-line computational costs by eliminating certain dynamics without compromising accuracy or interpretability in downstream predictions of quantities of interest, particularly film thickness predictions. The entire process outlined here provides a structured approach to leverage data-driven methods. Firstly, it helps us comprehend the root causes of model inaccuracies, and secondly, it offers a principled method for enhancing model performance.

CoCoGen: Physically-Consistent and Conditioned Score-based Generative Models for Forward and Inverse Problems

Dec 16, 2023

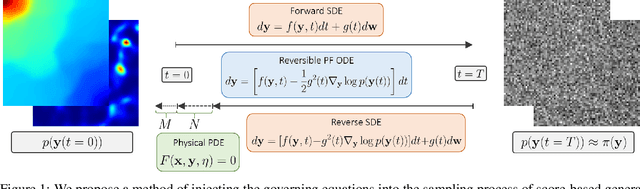

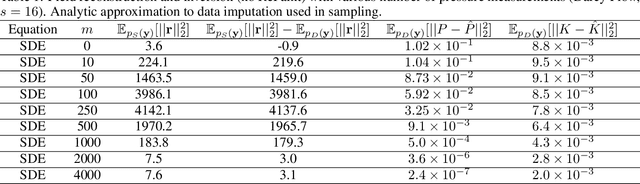

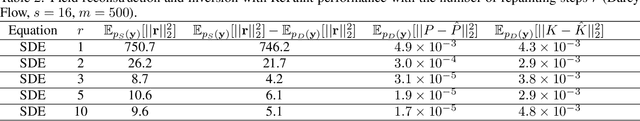

Abstract:Recent advances in generative artificial intelligence have had a significant impact on diverse domains spanning computer vision, natural language processing, and drug discovery. This work extends the reach of generative models into physical problem domains, particularly addressing the efficient enforcement of physical laws and conditioning for forward and inverse problems involving partial differential equations (PDEs). Our work introduces two key contributions: firstly, we present an efficient approach to promote consistency with the underlying PDE. By incorporating discretized information into score-based generative models, our method generates samples closely aligned with the true data distribution, showcasing residuals comparable to data generated through conventional PDE solvers, significantly enhancing fidelity. Secondly, we showcase the potential and versatility of score-based generative models in various physics tasks, specifically highlighting surrogate modeling as well as probabilistic field reconstruction and inversion from sparse measurements. A robust foundation is laid by designing unconditional score-based generative models that utilize reversible probability flow ordinary differential equations. Leveraging conditional models that require minimal training, we illustrate their flexibility when combined with a frozen unconditional model. These conditional models generate PDE solutions by incorporating parameters, macroscopic quantities, or partial field measurements as guidance. The results illustrate the inherent flexibility of score-based generative models and explore the synergy between unconditional score-based generative models and the present physically-consistent sampling approach, emphasizing the power and flexibility in solving for and inverting physical fields governed by differential equations, and in other scientific machine learning tasks.

Disentangling Generative Factors of Physical Fields Using Variational Autoencoders

Sep 15, 2021

Abstract:The ability to extract generative parameters from high-dimensional fields of data in an unsupervised manner is a highly desirable yet unrealized goal in computational physics. This work explores the use of variational autoencoders (VAEs) for non-linear dimension reduction with the aim of disentangling the low-dimensional latent variables to identify independent physical parameters that generated the data. A disentangled decomposition is interpretable and can be transferred to a variety of tasks including generative modeling, design optimization, and probabilistic reduced order modelling. A major emphasis of this work is to characterize disentanglement using VAEs while minimally modifying the classic VAE loss function (i.e. the ELBO) to maintain high reconstruction accuracy. Disentanglement is shown to be highly sensitive to rotations of the latent space, hyperparameters, random initializations and the learning schedule. The loss landscape is characterized by over-regularized local minima which surrounds desirable solutions. We illustrate comparisons between disentangled and entangled representations by juxtaposing learned latent distributions and the 'true' generative factors in a model porous flow problem. Implementing hierarchical priors (HP) is shown to better facilitate the learning of disentangled representations over the classic VAE. The choice of the prior distribution is shown to have a dramatic effect on disentanglement. In particular, the regularization loss is unaffected by latent rotation when training with rotationally-invariant priors, and thus learning non-rotationally-invariant priors aids greatly in capturing the properties of generative factors, improving disentanglement. Some issues inherent to training VAEs, such as the convergence to over-regularized local minima are illustrated and investigated, and potential techniques for mitigation are presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge