Christian Bailer

Flow Fields: Dense Correspondence Fields for Highly Accurate Large Displacement Optical Flow Estimation

Oct 28, 2018

Abstract:Modern large displacement optical flow algorithms usually use an initialization by either sparse descriptor matching techniques or dense approximate nearest neighbor fields. While the latter have the advantage of being dense, they have the major disadvantage of being very outlier-prone as they are not designed to find the optical flow, but the visually most similar correspondence. In this article we present a dense correspondence field approach that is much less outlier-prone and thus much better suited for optical flow estimation than approximate nearest neighbor fields. Our approach does not require explicit regularization, smoothing (like median filtering) or a new data term. Instead we solely rely on patch matching techniques and a novel multi-scale matching strategy. We also present enhancements for outlier filtering. We show that our approach is better suited for large displacement optical flow estimation than modern descriptor matching techniques. We do so by initializing EpicFlow with our approach instead of their originally used state-of-the-art descriptor matching technique. We significantly outperform the original EpicFlow on MPI-Sintel, KITTI 2012, KITTI 2015 and Middlebury. In this extended article of our former conference publication we further improve our approach in matching accuracy as well as runtime and present more experiments and insights.

FlowFields++: Accurate Optical Flow Correspondences Meet Robust Interpolation

May 09, 2018

Abstract:Optical Flow algorithms are of high importance for many applications. Recently, the Flow Field algorithm and its modifications have shown remarkable results, as they have been evaluated with top accuracy on different data sets. In our analysis of the algorithm we have found that it produces accurate sparse matches, but there is room for improvement in the interpolation. Thus, we propose in this paper FlowFields++, where we combine the accurate matches of Flow Fields with a robust interpolation. In addition, we propose improved variational optimization as post-processing. Our new algorithm is evaluated on the challenging KITTI and MPI Sintel data sets with public top results on both benchmarks.

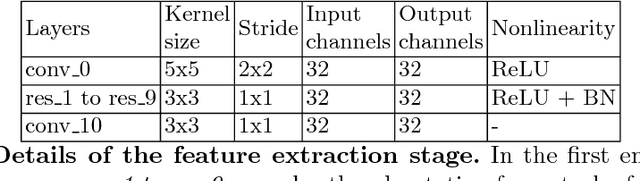

Fast Feature Extraction with CNNs with Pooling Layers

May 08, 2018

Abstract:In recent years, many publications showed that convolutional neural network based features can have a superior performance to engineered features. However, not much effort was taken so far to extract local features efficiently for a whole image. In this paper, we present an approach to compute patch-based local feature descriptors efficiently in presence of pooling and striding layers for whole images at once. Our approach is generic and can be applied to nearly all existing network architectures. This includes networks for all local feature extraction tasks like camera calibration, Patchmatching, optical flow estimation and stereo matching. In addition, our approach can be applied to other patch-based approaches like sliding window object detection and recognition. We complete our paper with a speed benchmark of popular CNN based feature extraction approaches applied on a whole image, with and without our speedup, and example code (for Torch) that shows how an arbitrary CNN architecture can be easily converted by our approach.

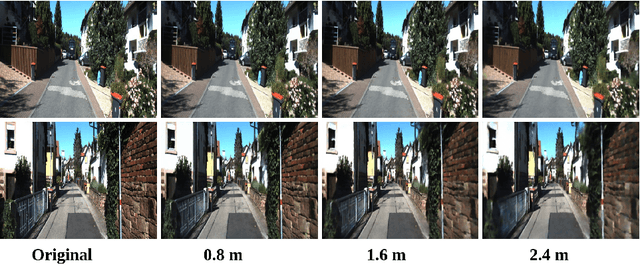

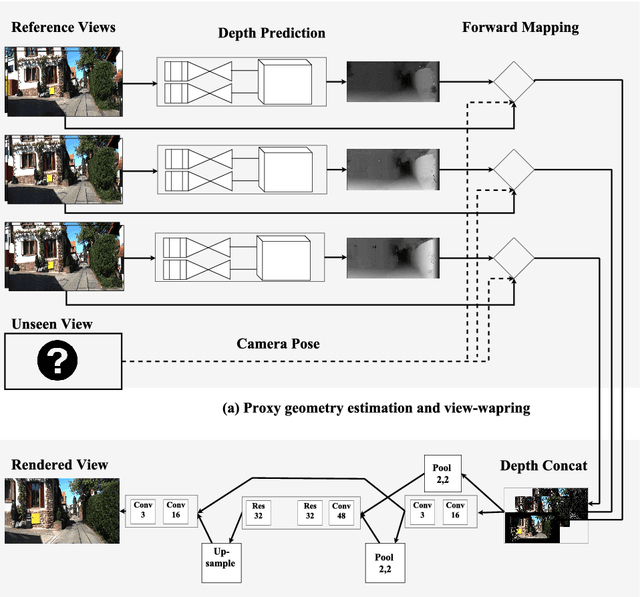

Fast View Synthesis with Deep Stereo Vision

May 07, 2018

Abstract:Novel view synthesis is an important problem in computer vision and graphics. Over the years a large number of solutions have been put forward to solve the problem. However, the large-baseline novel view synthesis problem is far from being "solved". Recent works have attempted to use Convolutional Neural Networks (CNNs) to solve view synthesis tasks. Due to the difficulty of learning scene geometry and interpreting camera motion, CNNs are often unable to generate realistic novel views. In this paper, we present a novel view synthesis approach based on stereo-vision and CNNs that decomposes the problem into two sub-tasks: view dependent geometry estimation and texture inpainting. Both tasks are structured prediction problems that could be effectively learned with CNNs. Experiments on the KITTI Odometry dataset show that our approach is more accurate and significantly faster than the current state-of-the-art. The code and supplementary material will be publicly available. Results could be found here https://youtu.be/5pzS9jc-5t0

Combining Stereo Disparity and Optical Flow for Basic Scene Flow

Jan 15, 2018

Abstract:Scene flow is a description of real world motion in 3D that contains more information than optical flow. Because of its complexity there exists no applicable variant for real-time scene flow estimation in an automotive or commercial vehicle context that is sufficiently robust and accurate. Therefore, many applications estimate the 2D optical flow instead. In this paper, we examine the combination of top-performing state-of-the-art optical flow and stereo disparity algorithms in order to achieve a basic scene flow. On the public KITTI Scene Flow Benchmark we demonstrate the reasonable accuracy of the combination approach and show its speed in computation.

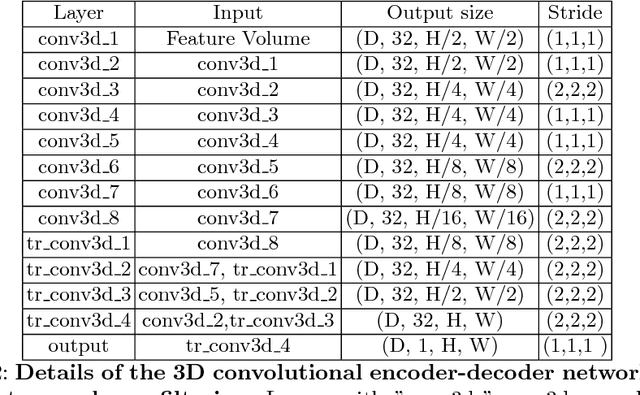

SceneFlowFields: Dense Interpolation of Sparse Scene Flow Correspondences

Oct 27, 2017

Abstract:While most scene flow methods use either variational optimization or a strong rigid motion assumption, we show for the first time that scene flow can also be estimated by dense interpolation of sparse matches. To this end, we find sparse matches across two stereo image pairs that are detected without any prior regularization and perform dense interpolation preserving geometric and motion boundaries by using edge information. A few iterations of variational energy minimization are performed to refine our results, which are thoroughly evaluated on the KITTI benchmark and additionally compared to state-of-the-art on MPI Sintel. For application in an automotive context, we further show that an optional ego-motion model helps to boost performance and blends smoothly into our approach to produce a segmentation of the scene into static and dynamic parts.

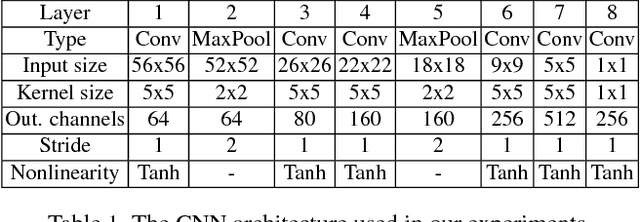

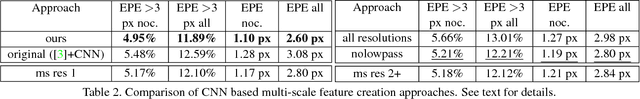

CNN-based Patch Matching for Optical Flow with Thresholded Hinge Embedding Loss

May 18, 2017

Abstract:Learning based approaches have not yet achieved their full potential in optical flow estimation, where their performance still trails heuristic approaches. In this paper, we present a CNN based patch matching approach for optical flow estimation. An important contribution of our approach is a novel thresholded loss for Siamese networks. We demonstrate that our loss performs clearly better than existing losses. It also allows to speed up training by a factor of 2 in our tests. Furthermore, we present a novel way for calculating CNN based features for different image scales, which performs better than existing methods. We also discuss new ways of evaluating the robustness of trained features for the application of patch matching for optical flow. An interesting discovery in our paper is that low-pass filtering of feature maps can increase the robustness of features created by CNNs. We proved the competitive performance of our approach by submitting it to the KITTI 2012, KITTI 2015 and MPI-Sintel evaluation portals where we obtained state-of-the-art results on all three datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge