Chris Vorster

Enhancing CLIP with GPT-4: Harnessing Visual Descriptions as Prompts

Aug 08, 2023

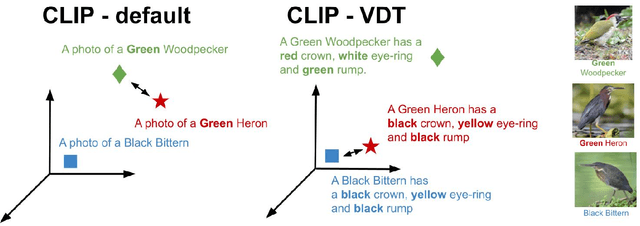

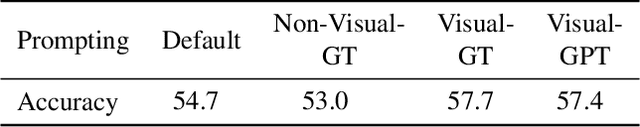

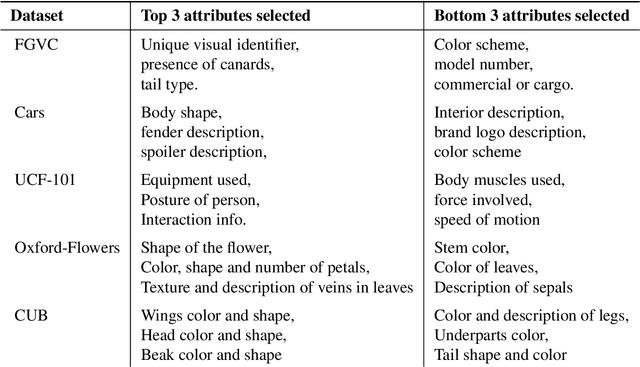

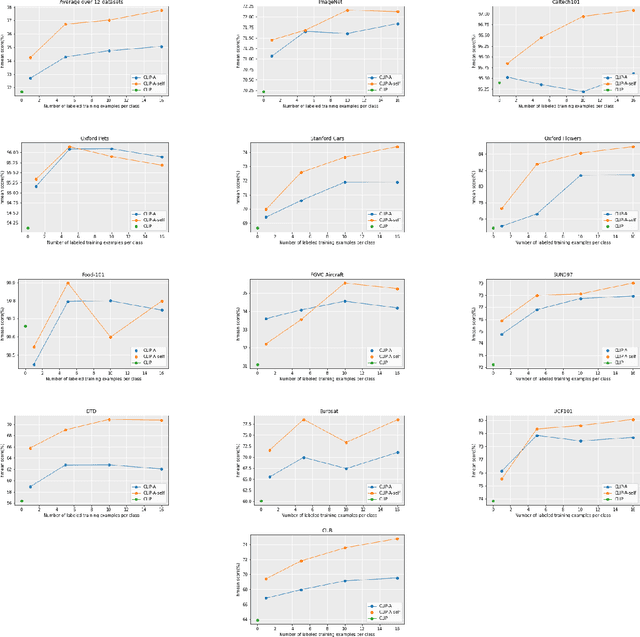

Abstract:Contrastive pretrained large Vision-Language Models (VLMs) like CLIP have revolutionized visual representation learning by providing good performance on downstream datasets. VLMs are 0-shot adapted to a downstream dataset by designing prompts that are relevant to the dataset. Such prompt engineering makes use of domain expertise and a validation dataset. Meanwhile, recent developments in generative pretrained models like GPT-4 mean they can be used as advanced internet search tools. They can also be manipulated to provide visual information in any structure. In this work, we show that GPT-4 can be used to generate text that is visually descriptive and how this can be used to adapt CLIP to downstream tasks. We show considerable improvements in 0-shot transfer accuracy on specialized fine-grained datasets like EuroSAT (~7%), DTD (~7%), SUN397 (~4.6%), and CUB (~3.3%) when compared to CLIP's default prompt. We also design a simple few-shot adapter that learns to choose the best possible sentences to construct generalizable classifiers that outperform the recently proposed CoCoOP by ~2% on average and by over 4% on 4 specialized fine-grained datasets. The code, prompts, and auxiliary text dataset is available at https://github.com/mayug/VDT-Adapter.

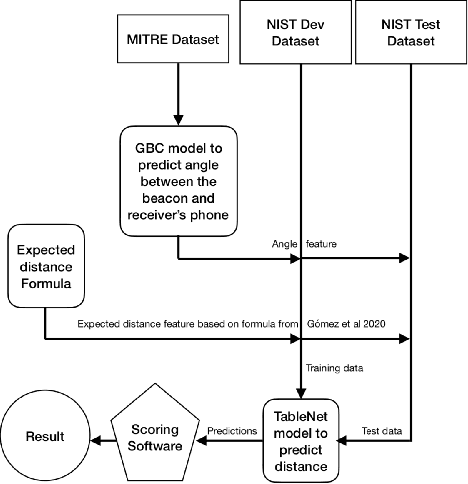

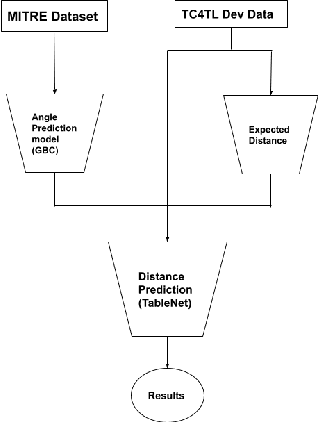

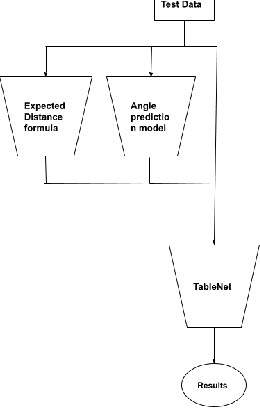

A Machine Learning Approach to Digital Contact Tracing: TC4TL Challenge

Mar 08, 2022

Abstract:Contact tracing is a method used by public health organisations to try prevent the spread of infectious diseases in the community. Traditionally performed by manual contact tracers, more recently the use of apps have been considered utilising phone sensor data to determine the distance between two phones. In this paper, we investigate the development of machine learning approaches to determine the distance between two mobile phone devices using Bluetooth Low Energy, sensory data and meta data. We use TableNet architecture and feature engineering to improve on the existing state of the art (total nDCF 0.21 vs 2.08), significantly outperforming existing models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge