Chiaki Sakama

Editors

Human Conditional Reasoning in Answer Set Programming

Nov 08, 2023

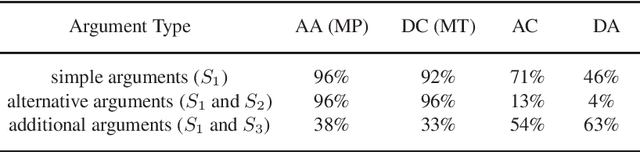

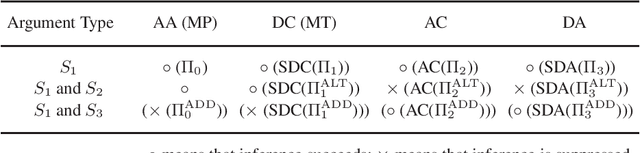

Abstract:Given a conditional sentence P=>Q (if P then Q) and respective facts, four different types of inferences are observed in human reasoning. Affirming the antecedent (AA) (or modus ponens) reasons Q from P; affirming the consequent (AC) reasons P from Q; denying the antecedent (DA) reasons -Q from -P; and denying the consequent (DC) (or modus tollens) reasons -P from -Q. Among them, AA and DC are logically valid, while AC and DA are logically invalid and often called logical fallacies. Nevertheless, humans often perform AC or DA as pragmatic inference in daily life. In this paper, we realize AC, DA and DC inferences in answer set programming. Eight different types of completion are introduced and their semantics are given by answer sets. We investigate formal properties and characterize human reasoning tasks in cognitive psychology. Those completions are also applied to commonsense reasoning in AI.

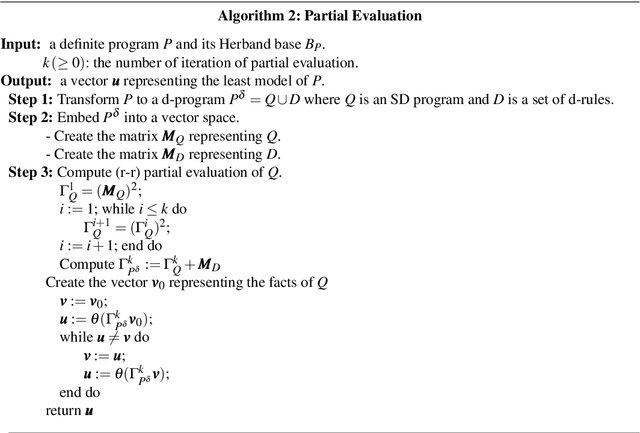

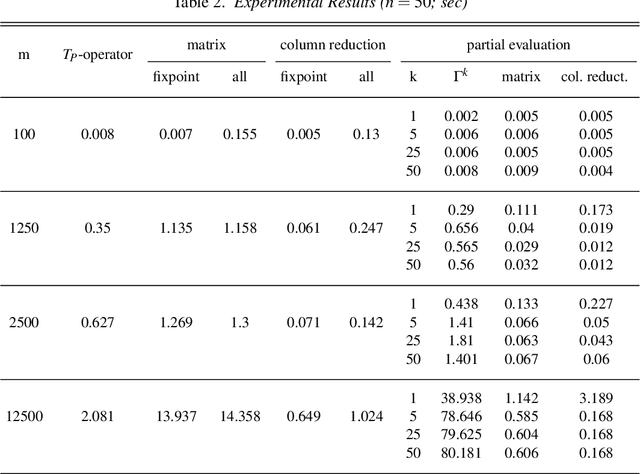

Partial Evaluation of Logic Programs in Vector Spaces

Nov 28, 2018

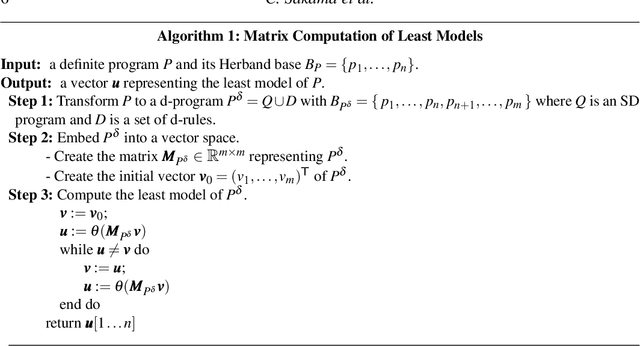

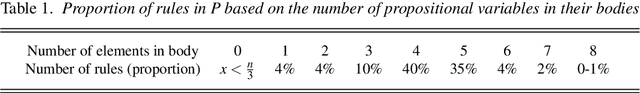

Abstract:In this paper, we introduce methods of encoding propositional logic programs in vector spaces. Interpretations are represented by vectors and programs are represented by matrices. The least model of a definite program is computed by multiplying an interpretation vector and a program matrix. To optimize computation in vector spaces, we provide a method of partial evaluation of programs using linear algebra. Partial evaluation is done by unfolding rules in a program, and it is realized in a vector space by multiplying program matrices. We perform experiments using randomly generated programs and show that partial evaluation has potential for realizing efficient computation in huge scale of programs.

Post-Proceedings of the First International Workshop on Learning and Nonmonotonic Reasoning

Nov 19, 2013

Abstract:Knowledge Representation and Reasoning and Machine Learning are two important fields in AI. Nonmonotonic logic programming (NMLP) and Answer Set Programming (ASP) provide formal languages for representing and reasoning with commonsense knowledge and realize declarative problem solving in AI. On the other side, Inductive Logic Programming (ILP) realizes Machine Learning in logic programming, which provides a formal background to inductive learning and the techniques have been applied to the fields of relational learning and data mining. Generally speaking, NMLP and ASP realize nonmonotonic reasoning while lack the ability of learning. By contrast, ILP realizes inductive learning while most techniques have been developed under the classical monotonic logic. With this background, some researchers attempt to combine techniques in the context of nonmonotonic ILP. Such combination will introduce a learning mechanism to programs and would exploit new applications on the NMLP side, while on the ILP side it will extend the representation language and enable us to use existing solvers. Cross-fertilization between learning and nonmonotonic reasoning can also occur in such as the use of answer set solvers for ILP, speed-up learning while running answer set solvers, learning action theories, learning transition rules in dynamical systems, abductive learning, learning biological networks with inhibition, and applications involving default and negation. This workshop is the first attempt to provide an open forum for the identification of problems and discussion of possible collaborations among researchers with complementary expertise. The workshop was held on September 15th of 2013 in Corunna, Spain. This post-proceedings contains five technical papers (out of six accepted papers) and the abstract of the invited talk by Luc De Raedt.

Confidentiality-Preserving Data Publishing for Credulous Users by Extended Abduction

Aug 30, 2011Abstract:Publishing private data on external servers incurs the problem of how to avoid unwanted disclosure of confidential data. We study a problem of confidentiality in extended disjunctive logic programs and show how it can be solved by extended abduction. In particular, we analyze how credulous non-monotonic reasoning affects confidentiality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge