Chia-Hsiang Lin

Department of Electrical Engineering, National Cheng Kung University, Miin Wu School of Computing, National Cheng Kung University

COS2A: Conversion from Sentinel-2 to AVIRIS Hyperspectral Data Using Interpretable Algorithm With Spectral-Spatial Duality

Jul 09, 2025

Abstract:The Sentinel-2 satellite, launched by the European Space Agency (ESA), offers extensive spatial coverage and has become indispensable in a wide range of remote sensing applications. However, it just has 12 spectral bands, making substances/objects identification less effective, not mentioning the varying spatial resolutions (10/20/60 m) across the 12 bands. If such a multi-resolution 12-band image can be computationally converted into a hyperspectral image with uniformly high resolution (i.e., 10 m), it significantly facilitates remote identification tasks. Though there are some spectral super-resolution methods, they did not address the multi-resolution issue on one hand, and, more seriously, they mostly focused on the CAVE-level hyperspectral image reconstruction (involving only 31 visible bands) on the other hand, greatly limiting their applicability in real-world remote sensing scenarios. We ambitiously aim to convert Sentinel-2 data directly into NASA's AVIRIS-level hyperspectral image (encompassing up to 172 visible and near-infrared (NIR) bands, after ignoring those absorption/corruption ones). For the first time, this paper solves this specific super-resolution problem (highly ill-posed), allowing all historical Sentinel-2 data to have their corresponding high-standard AVIRIS counterparts. We achieve so by customizing a novel algorithm that introduces deep unfolding regularization and Q-quadratic-norm regularization into the so-called convex/deep (CODE) small-data learning criterion. Based on the derived spectral-spatial duality, the proposed interpretable COS2A algorithm demonstrates superior spectral super-resolution results across diverse land cover types, as validated through extensive experiments.

Quantum-Driven Multihead Inland Waterbody Detection With Transformer-Encoded CYGNSS Delay-Doppler Map Data

May 22, 2025

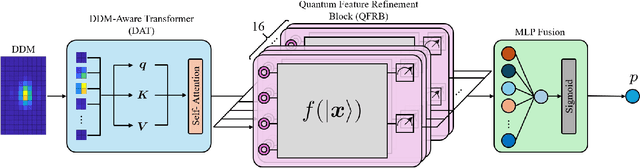

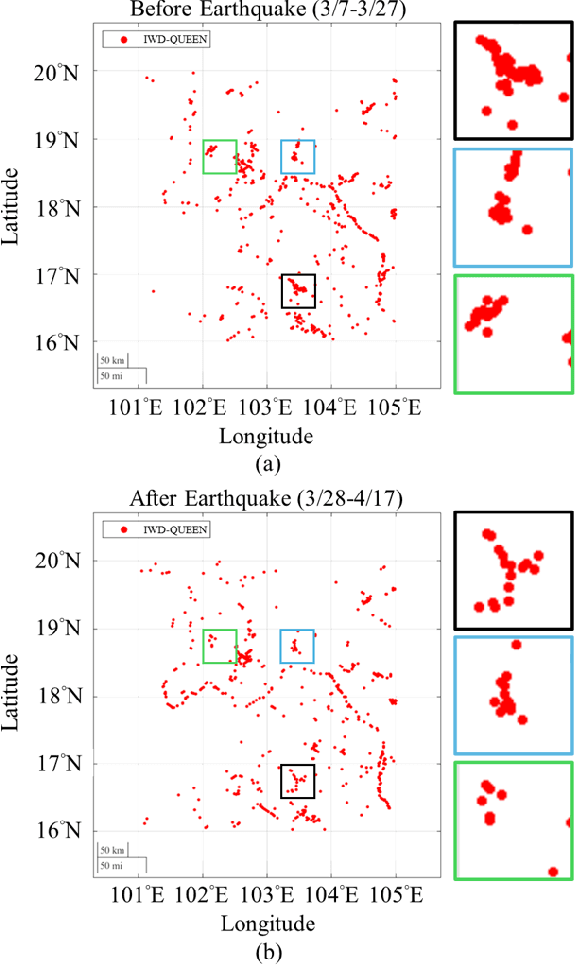

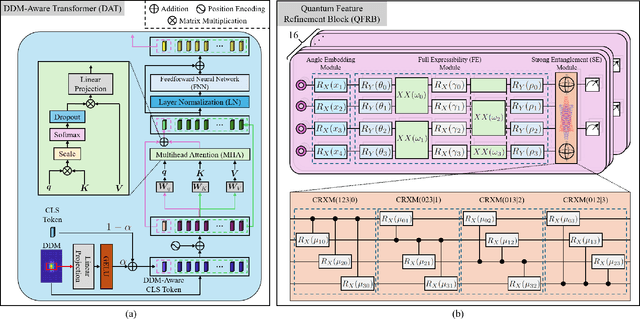

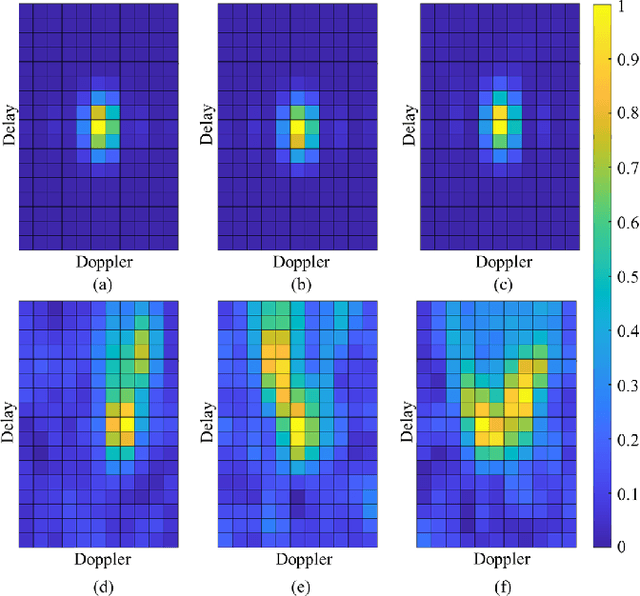

Abstract:Inland waterbody detection (IWD) is critical for water resources management and agricultural planning. However, the development of high-fidelity IWD mapping technology remains unresolved. We aim to propose a practical solution based on the easily accessible data, i.e., the delay-Doppler map (DDM) provided by NASA's Cyclone Global Navigation Satellite System (CYGNSS), which facilitates effective estimation of physical parameters on the Earth's surface with high temporal resolution and wide spatial coverage. Specifically, as quantum deep network (QUEEN) has revealed its strong proficiency in addressing classification-like tasks, we encode the DDM using a customized transformer, followed by feeding the transformer-encoded DDM (tDDM) into a highly entangled QUEEN to distinguish whether the tDDM corresponds to a hydrological region. In recent literature, QUEEN has achieved outstanding performances in numerous challenging remote sensing tasks (e.g., hyperspectral restoration, change detection, and mixed noise removal, etc.), and its high effectiveness stems from the fundamentally different way it adopts to extract features (the so-called quantum unitary-computing features). The meticulously designed IWD-QUEEN retrieves high-precision river textures, such as those in Amazon River Basin in South America, demonstrating its superiority over traditional classification methods and existing global hydrography maps. IWD-QUEEN, together with its parallel quantum multihead scheme, works in a near-real-time manner (i.e., millisecond-level computing per DDM). To broaden accessibility for users of traditional computers, we also provide the non-quantum counterpart of our method, called IWD-Transformer, thereby increasing the impact of this work.

HyperKING: Quantum-Classical Generative Adversarial Networks for Hyperspectral Image Restoration

Apr 16, 2025Abstract:Quantum machine intelligence starts showing its impact on satellite remote sensing (SRS). Also, recent literature exhibits that quantum generative intelligences encompass superior potential than their classical counterpart, motivating us to develop quantum generative adversarial networks (GANs) for SRS. However, existing quantum GANs are restricted by the limited quantum bit (qubit) resources of current quantum computers and process merely a small 2x2 grayscale image, far from being applicable to SRS. Recently, the novel concept of hybrid quantum-classical GAN, a quantum generator with a classical discriminator, has upgraded the order to 28x28 (still grayscale), whereas it is still insufficient for SRS. This motivates us to design a radically new hybrid framework, where both generator and discriminator are hybrid architectures. We demonstrate this feasibility, leading to a breakthrough of processing 128x128 hyperspectral images for SRS. Specifically, we design the quantum part with mathematically provable quantum full expressibility (FE) to address core signal processing tasks, wherein the FE property allows the quantum network to realize any valid quantum operator with appropriate training. The classical part, composed of convolutional layers, treats the read-in (compressing the optical information into limited qubits) and read-out (addressing the quantum collapse effect) procedures. The proposed innovative hybrid quantum GAN, named Hyperspectral Knot-like IntelligeNt dIscrimiNator and Generator (HyperKING), where knot partly symbolizes the quantum entanglement and partly the compressed quantum domain in the central part of the network architecture. HyperKING significantly surpasses the classical approaches in hyperspectral tensor completion, mixed noise removal (about 3dB improvement), and blind source separation results.

A Quantum-Empowered SPEI Drought Forecasting Algorithm Using Spatially-Aware Mamba Network

Feb 28, 2025Abstract:Due to the intensifying impacts of extreme climate changes, drought forecasting (DF), which aims to predict droughts from historical meteorological data, has become increasingly critical for monitoring and managing water resources. Though drought conditions often exhibit spatial climatic coherence among neighboring regions, benchmark deep learning-based DF methods overlook this fact and predict the conditions on a region-by-region basis. Using the Standardized Precipitation Evapotranspiration Index (SPEI), we designed and trained a novel and transformative spatially-aware DF neural network, which effectively captures local interactions among neighboring regions, resulting in enhanced spatial coherence and prediction accuracy. As DF also requires sophisticated temporal analysis, the Mamba network, recognized as the most accurate and efficient existing time-sequence modeling, was adopted to extract temporal features from short-term time frames. We also adopted quantum neural networks (QNN) to entangle the spatial features of different time instances, leading to refined spatiotemporal features of seven different meteorological variables for effectively identifying short-term climate fluctuations. In the last stage of our proposed SPEI-driven quantum spatially-aware Mamba network (SQUARE-Mamba), the extracted spatiotemporal features of seven different meteorological variables were fused to achieve more accurate DF. Validation experiments across El Ni\~no, La Ni\~na, and normal years demonstrated the superiority of the proposed SQUARE-Mamba, remarkably achieving an average improvement of more than 9.8% in the coefficient of determination index (R^2) compared to baseline methods, thereby illustrating the promising roles of the temporal quantum entanglement and Mamba temporal analysis to achieve more accurate DF.

Quantum Feature-Empowered Deep Classification for Fast Mangrove Mapping

Jan 06, 2025

Abstract:A mangrove mapping (MM) algorithm is an essential classification tool for environmental monitoring. The recent literature shows that compared with other index-based MM methods that treat pixels as spatially independent, convolutional neural networks (CNNs) are crucial for leveraging spatial continuity information, leading to improved classification performance. In this work, we go a step further to show that quantum features provide radically new information for CNN to further upgrade the classification results. Simply speaking, CNN computes affine-mapping features, while quantum neural network (QNN) offers unitary-computing features, thereby offering a fresh perspective in the final decision-making (classification). To address the challenging MM problem, we design an entangled spatial-spectral quantum feature extraction module. Notably, to ensure that the quantum features contribute genuinely novel information (unaffected by traditional CNN features), we design a separate network track consisting solely of quantum neurons with built-in interpretability. The extracted pure quantum information is then fused with traditional feature information to jointly make the final decision. The proposed quantum-empowered deep network (QEDNet) is very lightweight, so the improvement does come from the cooperation between CNN and QNN (rather than parameter augmentation). Extensive experiments will be conducted to demonstrate the superiority of QEDNet.

Transformer-Driven Inverse Problem Transform for Fast Blind Hyperspectral Image Dehazing

Jan 03, 2025

Abstract:Hyperspectral dehazing (HyDHZ) has become a crucial signal processing technology to facilitate the subsequent identification and classification tasks, as the airborne visible/infrared imaging spectrometer (AVIRIS) data portal reports a massive portion of haze-corrupted areas in typical hyperspectral remote sensing images. The idea of inverse problem transform (IPT) has been proposed in recent remote sensing literature in order to reformulate a hardly tractable inverse problem (e.g., HyDHZ) into a relatively simple one. Considering the emerging spectral super-resolution (SSR) technique, which spectrally upsamples multispectral data to hyperspectral data, we aim to solve the challenging HyDHZ problem by reformulating it as an SSR problem. Roughly speaking, the proposed algorithm first automatically selects some uncorrupted/informative spectral bands, from which SSR is applied to spectrally upsample the selected bands in the feature space, thereby obtaining a clean hyperspectral image (HSI). The clean HSI is then further refined by a deep transformer network to obtain the final dehazed HSI, where a global attention mechanism is designed to capture nonlocal information. There are very few HyDHZ works in existing literature, and this article introduces the powerful spatial-spectral transformer into HyDHZ for the first time. Remarkably, the proposed transformer-driven IPT-based HyDHZ (T2HyDHZ) is a blind algorithm without requiring the user to manually select the corrupted region. Extensive experiments demonstrate the superiority of T2HyDHZ with less color distortion.

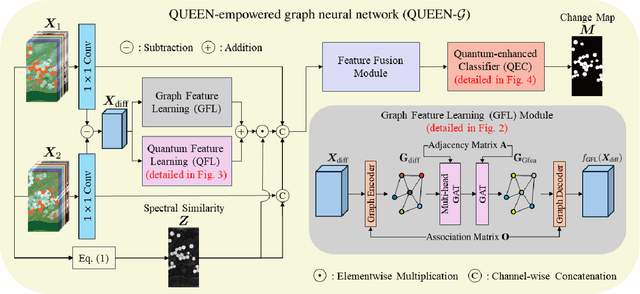

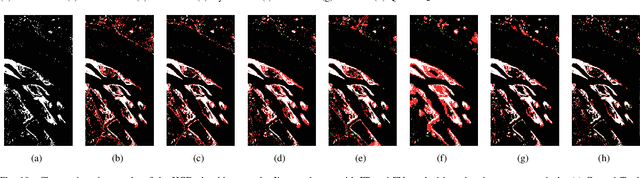

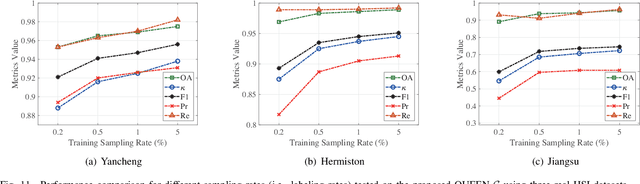

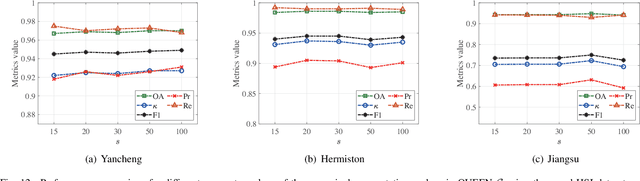

Quantum Information-Empowered Graph Neural Network for Hyperspectral Change Detection

Nov 12, 2024

Abstract:Change detection (CD) is a critical remote sensing technique for identifying changes in the Earth's surface over time. The outstanding substance identifiability of hyperspectral images (HSIs) has significantly enhanced the detection accuracy, making hyperspectral change detection (HCD) an essential technology. The detection accuracy can be further upgraded by leveraging the graph structure of HSIs, motivating us to adopt the graph neural networks (GNNs) in solving HCD. For the first time, this work introduces quantum deep network (QUEEN) into HCD. Unlike GNN and CNN, both extracting the affine-computing features, QUEEN provides fundamentally different unitary-computing features. We demonstrate that through the unitary feature extraction procedure, QUEEN provides radically new information for deciding whether there is a change or not. Hierarchically, a graph feature learning (GFL) module exploits the graph structure of the bitemporal HSIs at the superpixel level, while a quantum feature learning (QFL) module learns the quantum features at the pixel level, as a complementary to GFL by preserving pixel-level detailed spatial information not retained in the superpixels. In the final classification stage, a quantum classifier is designed to cooperate with a traditional fully connected classifier. The superior HCD performance of the proposed QUEEN-empowered GNN (i.e., QUEEN-G) will be experimentally demonstrated on real hyperspectral datasets.

PRIME: Blind Multispectral Unmixing Using Virtual Quantum Prism and Convex Geometry

Jul 22, 2024

Abstract:Multispectral unmixing (MU) is critical due to the inevitable mixed pixel phenomenon caused by the limited spatial resolution of typical multispectral images in remote sensing. However, MU mathematically corresponds to the underdetermined blind source separation problem, thus highly challenging, preventing researchers from tackling it. Previous MU works all ignore the underdetermined issue, and merely consider scenarios with more bands than sources. This work attempts to resolve the underdetermined issue by further conducting the light-splitting task using a network-inspired virtual prism, and as this task is challenging, we achieve so by incorporating the very advanced quantum feature extraction techniques. We emphasize that the prism is virtual (allowing us to fix the spectral response as a simple deterministic matrix), so the virtual hyperspectral image (HSI) it generates has no need to correspond to some real hyperspectral sensor; in other words, it is good enough as long as the virtual HSI satisfies some fundamental properties of light splitting (e.g., non-negativity and continuity). With the above virtual quantum prism, we know that the virtual HSI is expected to possess some desired simplex structure. This allows us to adopt the convex geometry to unmix the spectra, followed by downsampling the pure spectra back to the multispectral domain, thereby achieving MU. Experimental evidence shows great potential of our MU algorithm, termed as prism-inspired multispectral endmember extraction (PRIME).

Hyper-Restormer: A General Hyperspectral Image Restoration Transformer for Remote Sensing Imaging

Dec 12, 2023Abstract:The deep learning model Transformer has achieved remarkable success in the hyperspectral image (HSI) restoration tasks by leveraging Spectral and Spatial Self-Attention (SA) mechanisms. However, applying these designs to remote sensing (RS) HSI restoration tasks, which involve far more spectrums than typical HSI (e.g., ICVL dataset with 31 bands), presents challenges due to the enormous computational complexity of using Spectral and Spatial SA mechanisms. To address this problem, we proposed Hyper-Restormer, a lightweight and effective Transformer-based architecture for RS HSI restoration. First, we introduce a novel Lightweight Spectral-Spatial (LSS) Transformer Block that utilizes both Spectral and Spatial SA to capture long-range dependencies of input features map. Additionally, we employ a novel Lightweight Locally-enhanced Feed-Forward Network (LLFF) to further enhance local context information. Then, LSS Transformer Blocks construct a Single-stage Lightweight Spectral-Spatial Transformer (SLSST) that cleverly utilizes the low-rank property of RS HSI to decompose the feature maps into basis and abundance components, enabling Spectral and Spatial SA with low computational cost. Finally, the proposed Hyper-Restormer cascades several SLSSTs in a stepwise manner to progressively enhance the quality of RS HSI restoration from coarse to fine. Extensive experiments were conducted on various RS HSI restoration tasks, including denoising, inpainting, and super-resolution, demonstrating that the proposed Hyper-Restormer outperforms other state-of-the-art methods.

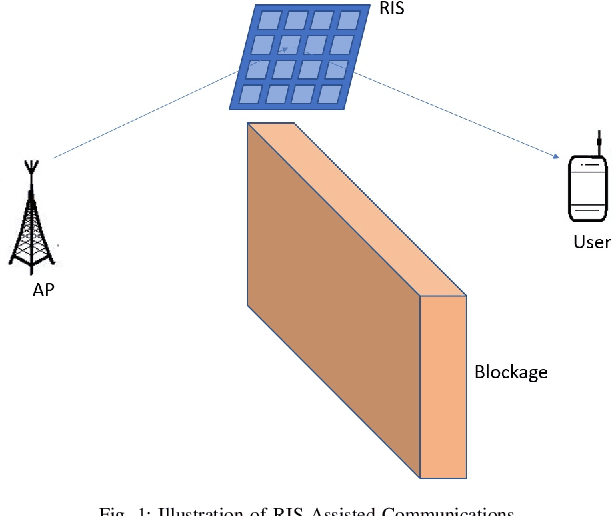

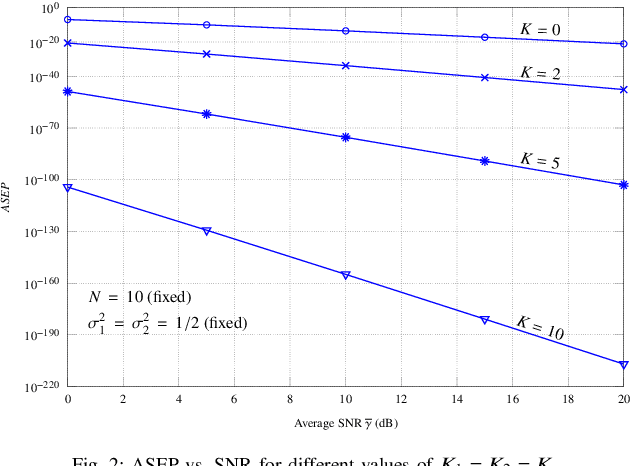

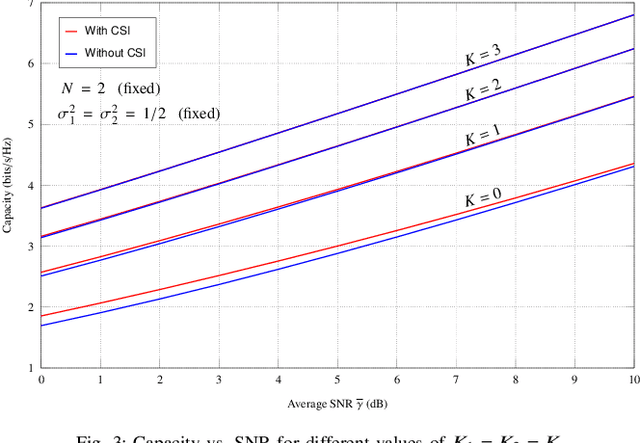

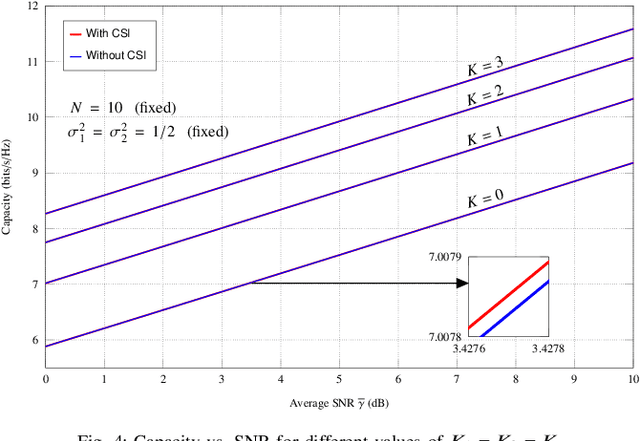

Capacity and Performance Analysis of RIS-Assisted Communication Over Rician Fading Channels

Nov 08, 2021

Abstract:This paper investigates two performance metrics, namely ergodic capacity and symbol error rate, of mmWave communication system assisted by a reconfigurable intelligent surface (RIS). We assume independent and identically distributed (i.i.d.) Rician fadings between user-RIS-Access Point (AP), with RIS surface consisting of passive reflecting elements. First, we derive a new unified closed-form formula for the average symbol error probability of generalised M-QAM/M-PSK signalling over this mmWave link. We then obtain new closed-form expressions for the ergodic capacity with and without channel state information (CSI) at the AP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge