Cheongjae Jang

A.I. Institute, Hanyang University

On the Information Processing of One-Dimensional Wasserstein Distances with Finite Samples

Nov 17, 2025Abstract:Leveraging the Wasserstein distance -- a summation of sample-wise transport distances in data space -- is advantageous in many applications for measuring support differences between two underlying density functions. However, when supports significantly overlap while densities exhibit substantial pointwise differences, it remains unclear whether and how this transport information can accurately identify these differences, particularly their analytic characterization in finite-sample settings. We address this issue by conducting an analysis of the information processing capabilities of the one-dimensional Wasserstein distance with finite samples. By utilizing the Poisson process and isolating the rate factor, we demonstrate the capability of capturing the pointwise density difference with Wasserstein distances and how this information harmonizes with support differences. The analyzed properties are confirmed using neural spike train decoding and amino acid contact frequency data. The results reveal that the one-dimensional Wasserstein distance highlights meaningful density differences related to both rate and support.

Learning to increase matching efficiency in identifying additional b-jets in the $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ process

Mar 16, 2021

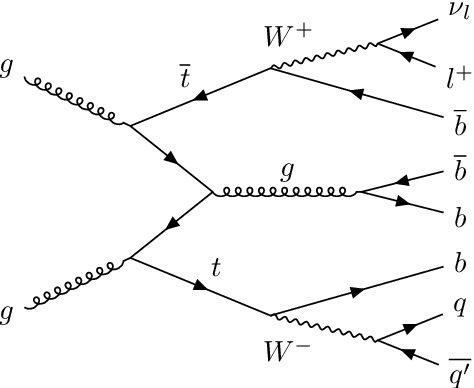

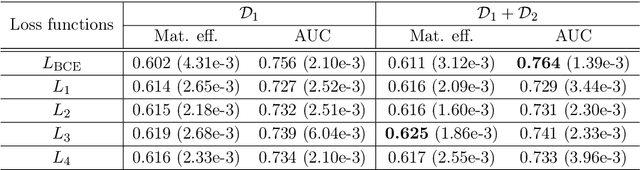

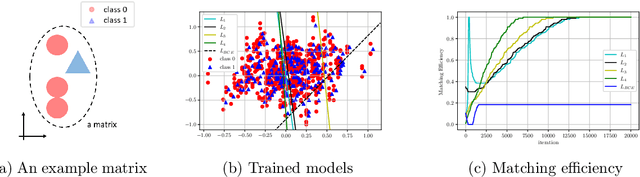

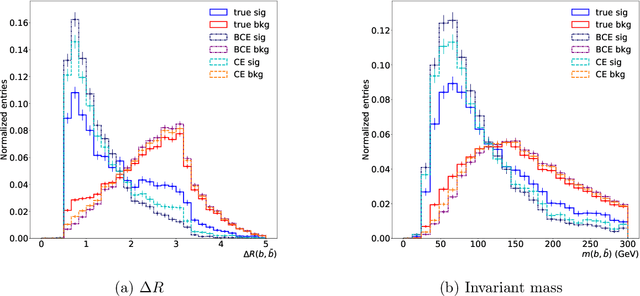

Abstract:The $\text{t}\bar{\text{t}}\text{H}(\text{b}\bar{\text{b}})$ process is an essential channel to reveal the Higgs properties but has an irreducible background from the $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ process, which produces a top quark pair in association with a b quark pair. Therefore, understanding the $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ process is crucial for improving the sensitivity of a search for the $\text{t}\bar{\text{t}}\text{H}(\text{b}\bar{\text{b}})$ process. To this end, when measuring the differential cross-section of the $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ process, we need to distinguish the b-jets originated from top quark decays, and additional b-jets originated from gluon splitting. Since there are no simple identification rules, we adopt deep learning methods to learn from data to identify the additional b-jets from the $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ events. Specifically, by exploiting the special structure of the $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ event data, we propose several loss functions that can be minimized to directly increase the matching efficiency, the accuracy of identifying additional b-jets. We discuss the difference between our method and another deep learning-based approach based on binary classification arXiv:1910.14535 using synthetic data. We then verify that additional b-jets can be identified more accurately by increasing matching efficiency directly rather than the binary classification accuracy, using simulated $\text{t}\bar{\text{t}}\text{b}\bar{\text{b}}$ event data in the lepton+jets channel from pp collision at $\sqrt{s}$ = 13 TeV.

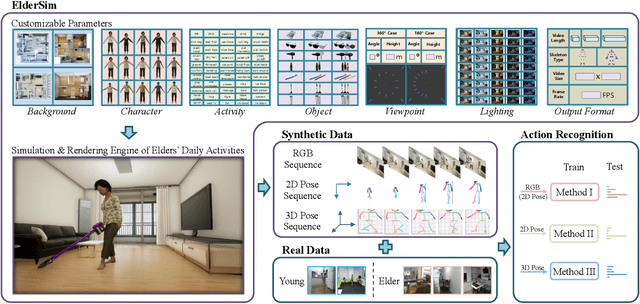

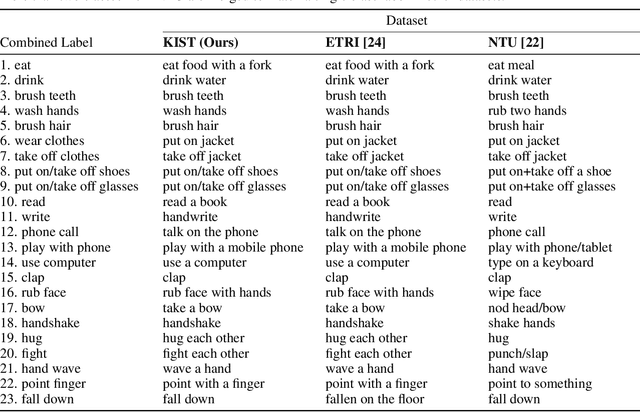

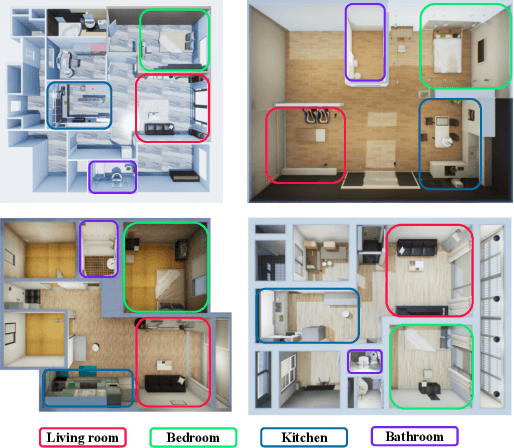

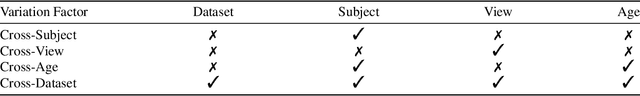

ElderSim: A Synthetic Data Generation Platform for Human Action Recognition in Eldercare Applications

Oct 28, 2020

Abstract:To train deep learning models for vision-based action recognition of elders' daily activities, we need large-scale activity datasets acquired under various daily living environments and conditions. However, most public datasets used in human action recognition either differ from or have limited coverage of elders' activities in many aspects, making it challenging to recognize elders' daily activities well by only utilizing existing datasets. Recently, such limitations of available datasets have actively been compensated by generating synthetic data from realistic simulation environments and using those data to train deep learning models. In this paper, based on these ideas we develop ElderSim, an action simulation platform that can generate synthetic data on elders' daily activities. For 55 kinds of frequent daily activities of the elders, ElderSim generates realistic motions of synthetic characters with various adjustable data-generating options, and provides different output modalities including RGB videos, two- and three-dimensional skeleton trajectories. We then generate KIST SynADL, a large-scale synthetic dataset of elders' activities of daily living, from ElderSim and use the data in addition to real datasets to train three state-of the-art human action recognition models. From the experiments following several newly proposed scenarios that assume different real and synthetic dataset configurations for training, we observe a noticeable performance improvement by augmenting our synthetic data. We also offer guidance with insights for the effective utilization of synthetic data to help recognize elders' daily activities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge