Chengjie Niu

RIM-Net: Recursive Implicit Fields for Unsupervised Learning of Hierarchical Shape Structures

Jan 30, 2022

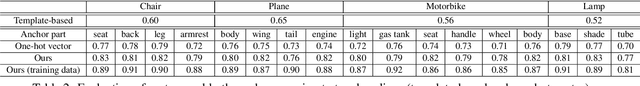

Abstract:We introduce RIM-Net, a neural network which learns recursive implicit fields for unsupervised inference of hierarchical shape structures. Our network recursively decomposes an input 3D shape into two parts, resulting in a binary tree hierarchy. Each level of the tree corresponds to an assembly of shape parts, represented as implicit functions, to reconstruct the input shape. At each node of the tree, simultaneous feature decoding and shape decomposition are carried out by their respective feature and part decoders, with weight sharing across the same hierarchy level. As an implicit field decoder, the part decoder is designed to decompose a sub-shape, via a two-way branched reconstruction, where each branch predicts a set of parameters defining a Gaussian to serve as a local point distribution for shape reconstruction. With reconstruction losses accounted for at each hierarchy level and a decomposition loss at each node, our network training does not require any ground-truth segmentations, let alone hierarchies. Through extensive experiments and comparisons to state-of-the-art alternatives, we demonstrate the quality, consistency, and interpretability of hierarchical structural inference by RIM-Net.

Learning Part Generation and Assembly for Structure-aware Shape Synthesis

Jul 30, 2019

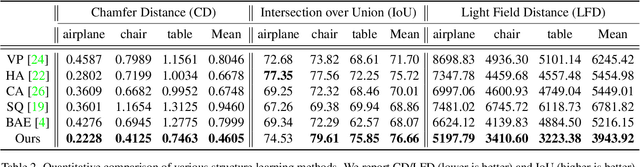

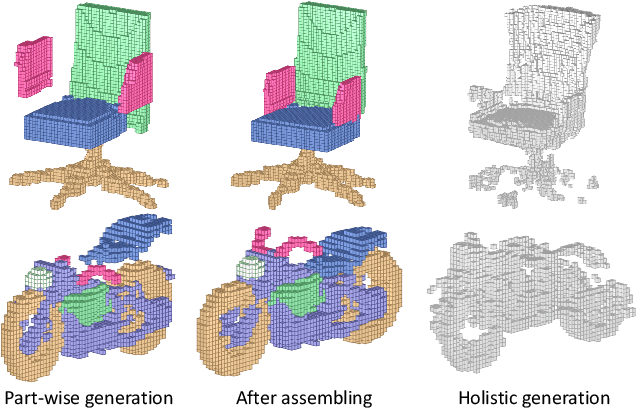

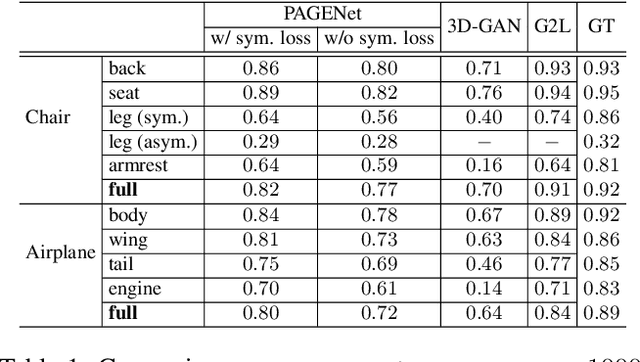

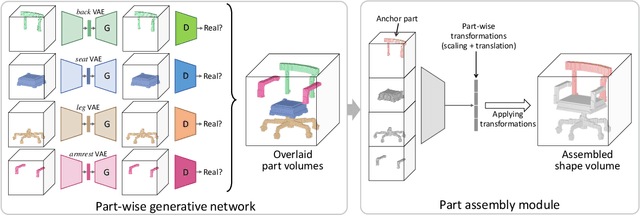

Abstract:Learning deep generative models for 3D shape synthesis is largely limited by the difficulty of generating plausible shapes with correct topology and reasonable geometry. Indeed, learning the distribution of plausible 3D shapes seems a daunting task for most existing holistic shape representation, given the significant topological variations of 3D objects even within the same shape category. Enlightened by the common view that 3D shape structure is characterized as part composition and placement, we propose to model 3D shape variations with a part-aware deep generative network which we call PAGENet. The network is composed of an array of per-part VAE-GANs, generating semantic parts composing a complete shape, followed by a part assembly module that estimates a transformation for each part to correlate and assemble them into a plausible structure. Through splitting the generation of part composition and part relations into separate networks, the difficulty of modeling structural variations of 3D shapes is greatly reduced. We demonstrate through extensive experiments that PAGENet generates 3D shapes with plausible, diverse and detailed structure, and show two prototype applications: semantic shape segmentation and shape set evolution.

Im2Struct: Recovering 3D Shape Structure from a Single RGB Image

Apr 16, 2018

Abstract:We propose to recover 3D shape structures from single RGB images, where structure refers to shape parts represented by cuboids and part relations encompassing connectivity and symmetry. Given a single 2D image with an object depicted, our goal is automatically recover a cuboid structure of the object parts as well as their mutual relations. We develop a convolutional-recursive auto-encoder comprised of structure parsing of a 2D image followed by structure recovering of a cuboid hierarchy. The encoder is achieved by a multi-scale convolutional network trained with the task of shape contour estimation, thereby learning to discern object structures in various forms and scales. The decoder fuses the features of the structure parsing network and the original image, and recursively decodes a hierarchy of cuboids. Since the decoder network is learned to recover part relations including connectivity and symmetry explicitly, the plausibility and generality of part structure recovery can be ensured. The two networks are jointly trained using the training data of contour-mask and cuboid structure pairs. Such pairs are generated by rendering stock 3D CAD models coming with part segmentation. Our method achieves unprecedentedly faithful and detailed recovery of diverse 3D part structures from single-view 2D images. We demonstrate two applications of our method including structure-guided completion of 3D volumes reconstructed from single-view images and structure-aware interactive editing of 2D images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge