Cheng-Kang Hsieh

Convolutional Collaborative Filter Network for Video Based Recommendation Systems

Oct 22, 2018

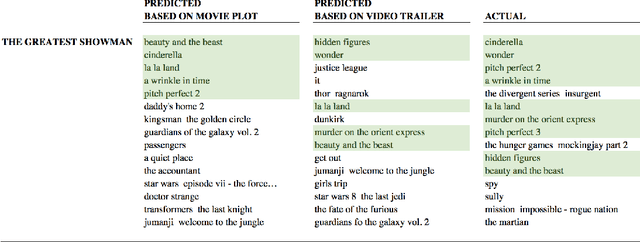

Abstract:This analysis explores the temporal sequencing of objects in a movie trailer. Temporal sequencing of objects in a movie trailer (e.g., a long shot of an object vs intermittent short shots) can convey information about the type of movie, plot of the movie, role of the main characters, and the filmmakers cinematographic choices. When combined with historical customer data, sequencing analysis can be used to improve predictions of customer behavior. E.g., a customer buys tickets to a new movie and maybe the customer has seen movies in the past that contained similar sequences. To explore object sequencing in movie trailers, we propose a video convolutional network to capture actions and scenes that are predictive of customers' preferences. The model learns the specific nature of sequences for different types of objects (e.g., cars vs faces), and the role of sequences in predicting customer future behavior. We show how such a temporal-aware model outperforms simple feature pooling methods proposed in our previous works and, importantly, demonstrate the additional model explain-ability allowed by such a model.

Competitive Analysis System for Theatrical Movie Releases Based on Movie Trailer Deep Video Representation

Jul 12, 2018

Abstract:Audience discovery is an important activity at major movie studios. Deep models that use convolutional networks to extract frame-by-frame features of a movie trailer and represent it in a form that is suitable for prediction are now possible thanks to the availability of pre-built feature extractors trained on large image datasets. Using these pre-built feature extractors, we are able to process hundreds of publicly available movie trailers, extract frame-by-frame low level features (e.g., a face, an object, etc) and create video-level representations. We use the video-level representations to train a hybrid Collaborative Filtering model that combines video features with historical movie attendance records. The trained model not only makes accurate attendance and audience prediction for existing movies, but also successfully profiles new movies six to eight months prior to their release.

Yum-me: A Personalized Nutrient-based Meal Recommender System

Apr 30, 2017

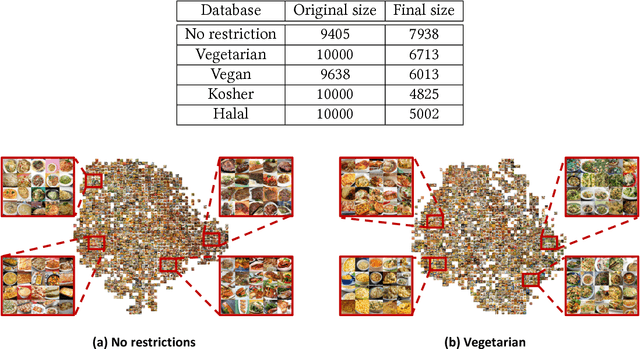

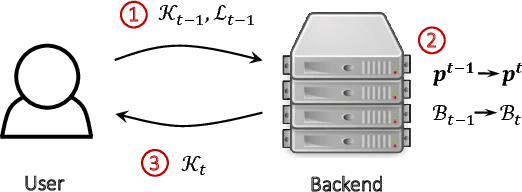

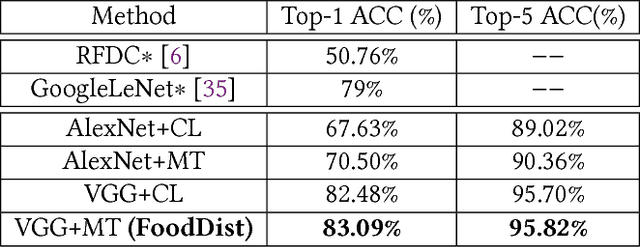

Abstract:Nutrient-based meal recommendations have the potential to help individuals prevent or manage conditions such as diabetes and obesity. However, learning people's food preferences and making recommendations that simultaneously appeal to their palate and satisfy nutritional expectations are challenging. Existing approaches either only learn high-level preferences or require a prolonged learning period. We propose Yum-me, a personalized nutrient-based meal recommender system designed to meet individuals' nutritional expectations, dietary restrictions, and fine-grained food preferences. Yum-me enables a simple and accurate food preference profiling procedure via a visual quiz-based user interface, and projects the learned profile into the domain of nutritionally appropriate food options to find ones that will appeal to the user. We present the design and implementation of Yum-me, and further describe and evaluate two innovative contributions. The first contriution is an open source state-of-the-art food image analysis model, named FoodDist. We demonstrate FoodDist's superior performance through careful benchmarking and discuss its applicability across a wide array of dietary applications. The second contribution is a novel online learning framework that learns food preference from item-wise and pairwise image comparisons. We evaluate the framework in a field study of 227 anonymous users and demonstrate that it outperforms other baselines by a significant margin. We further conducted an end-to-end validation of the feasibility and effectiveness of Yum-me through a 60-person user study, in which Yum-me improves the recommendation acceptance rate by 42.63%.

Beyond Classification: Latent User Interests Profiling from Visual Contents Analysis

Dec 21, 2015

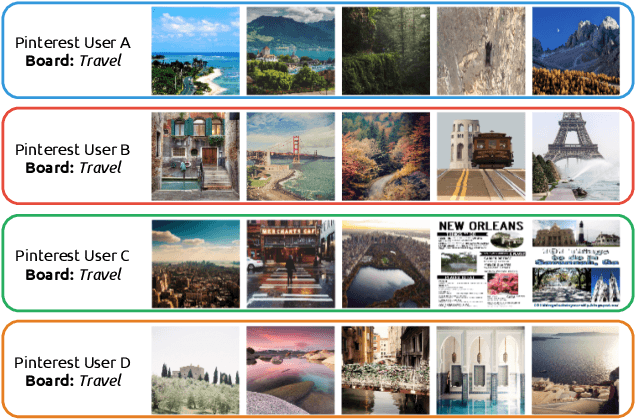

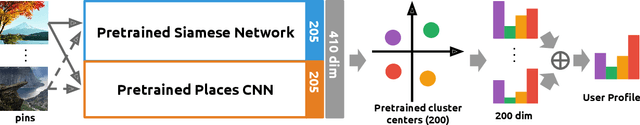

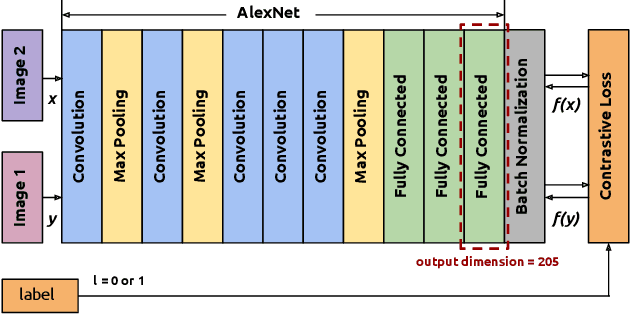

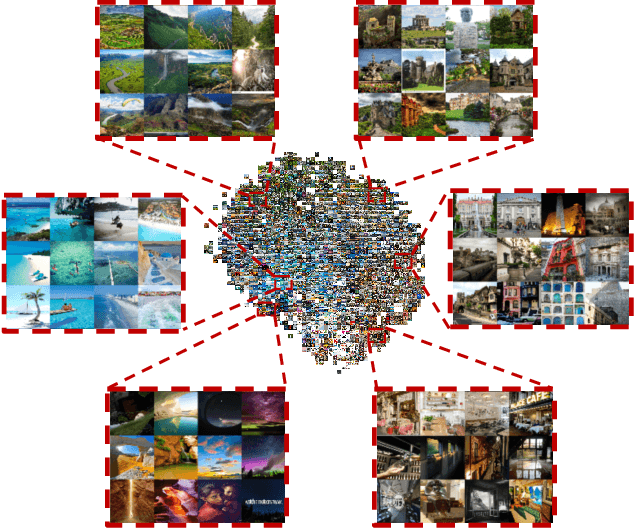

Abstract:User preference profiling is an important task in modern online social networks (OSN). With the proliferation of image-centric social platforms, such as Pinterest, visual contents have become one of the most informative data streams for understanding user preferences. Traditional approaches usually treat visual content analysis as a general classification problem where one or more labels are assigned to each image. Although such an approach simplifies the process of image analysis, it misses the rich context and visual cues that play an important role in people's perception of images. In this paper, we explore the possibilities of learning a user's latent visual preferences directly from image contents. We propose a distance metric learning method based on Deep Convolutional Neural Networks (CNN) to directly extract similarity information from visual contents and use the derived distance metric to mine individual users' fine-grained visual preferences. Through our preliminary experiments using data from 5,790 Pinterest users, we show that even for the images within the same category, each user possesses distinct and individually-identifiable visual preferences that are consistent over their lifetime. Our results underscore the untapped potential of finer-grained visual preference profiling in understanding users' preferences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge