Matt Nickens

Convolutional Collaborative Filter Network for Video Based Recommendation Systems

Oct 22, 2018

Abstract:This analysis explores the temporal sequencing of objects in a movie trailer. Temporal sequencing of objects in a movie trailer (e.g., a long shot of an object vs intermittent short shots) can convey information about the type of movie, plot of the movie, role of the main characters, and the filmmakers cinematographic choices. When combined with historical customer data, sequencing analysis can be used to improve predictions of customer behavior. E.g., a customer buys tickets to a new movie and maybe the customer has seen movies in the past that contained similar sequences. To explore object sequencing in movie trailers, we propose a video convolutional network to capture actions and scenes that are predictive of customers' preferences. The model learns the specific nature of sequences for different types of objects (e.g., cars vs faces), and the role of sequences in predicting customer future behavior. We show how such a temporal-aware model outperforms simple feature pooling methods proposed in our previous works and, importantly, demonstrate the additional model explain-ability allowed by such a model.

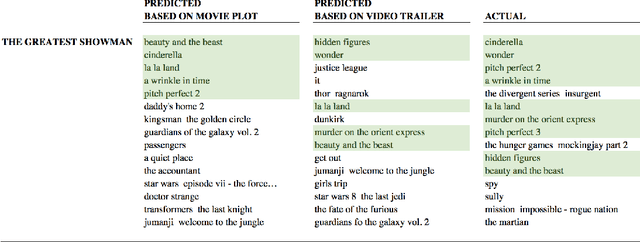

Competitive Analysis System for Theatrical Movie Releases Based on Movie Trailer Deep Video Representation

Jul 12, 2018

Abstract:Audience discovery is an important activity at major movie studios. Deep models that use convolutional networks to extract frame-by-frame features of a movie trailer and represent it in a form that is suitable for prediction are now possible thanks to the availability of pre-built feature extractors trained on large image datasets. Using these pre-built feature extractors, we are able to process hundreds of publicly available movie trailers, extract frame-by-frame low level features (e.g., a face, an object, etc) and create video-level representations. We use the video-level representations to train a hybrid Collaborative Filtering model that combines video features with historical movie attendance records. The trained model not only makes accurate attendance and audience prediction for existing movies, but also successfully profiles new movies six to eight months prior to their release.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge