Chenfei Xia

FTN: Foreground-Guided Texture-Focused Person Re-Identification

Sep 24, 2020

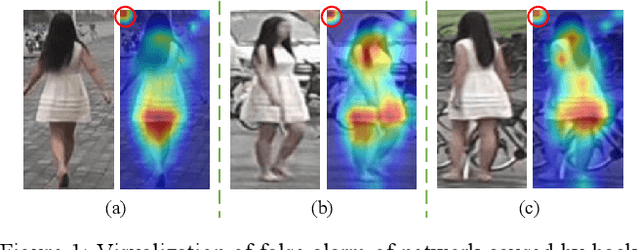

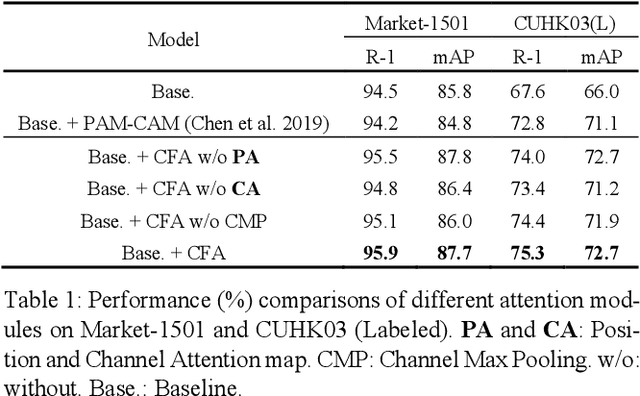

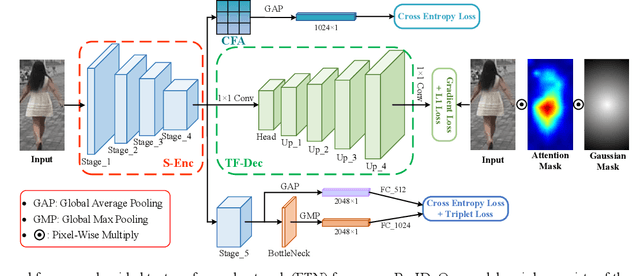

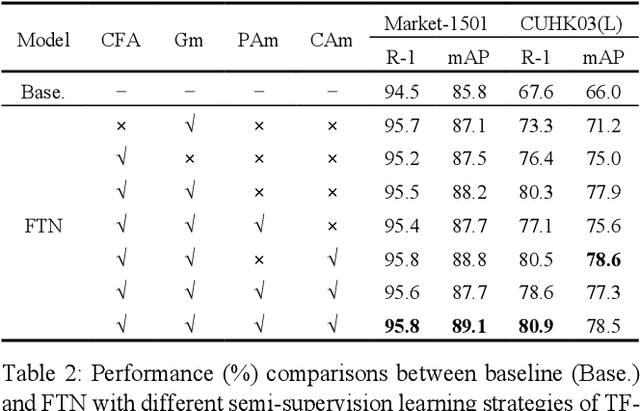

Abstract:Person re-identification (Re-ID) is a challenging task as persons are often in different backgrounds. Most recent Re-ID methods treat the foreground and background information equally for person discriminative learning, but can easily lead to potential false alarm problems when different persons are in similar backgrounds or the same person is in different backgrounds. In this paper, we propose a Foreground-Guided Texture-Focused Network (FTN) for Re-ID, which can weaken the representation of unrelated background and highlight the attributes person-related in an end-to-end manner. FTN consists of a semantic encoder (S-Enc) and a compact foreground attention module (CFA) for Re-ID task, and a texture-focused decoder (TF-Dec) for reconstruction task. Particularly, we build a foreground-guided semi-supervised learning strategy for TF-Dec because the reconstructed ground-truths are only the inputs of FTN weighted by the Gaussian mask and the attention mask generated by CFA. Moreover, a new gradient loss is introduced to encourage the network to mine the texture consistency between the inputs and the reconstructed outputs. Our FTN is computationally efficient and extensive experiments on three commonly used datasets Market1501, CUHK03 and MSMT17 demonstrate that the proposed method performs favorably against the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge