Chaoming Fang

A Compact Online-Learning Spiking Neuromorphic Biosignal Processor

Sep 26, 2022

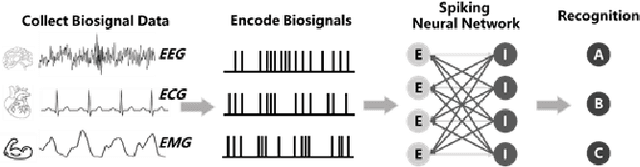

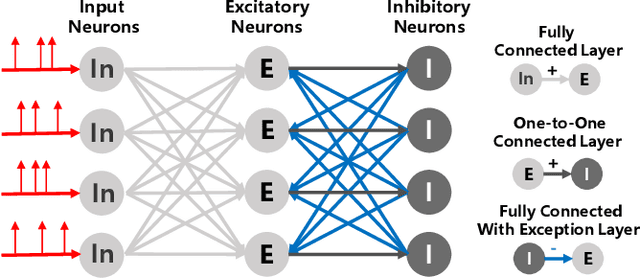

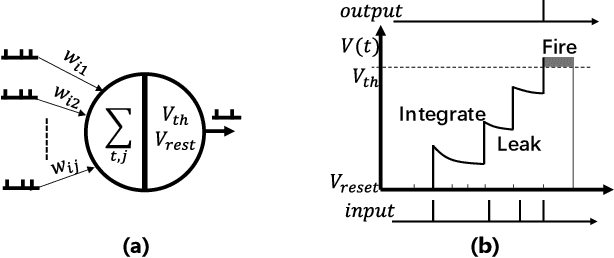

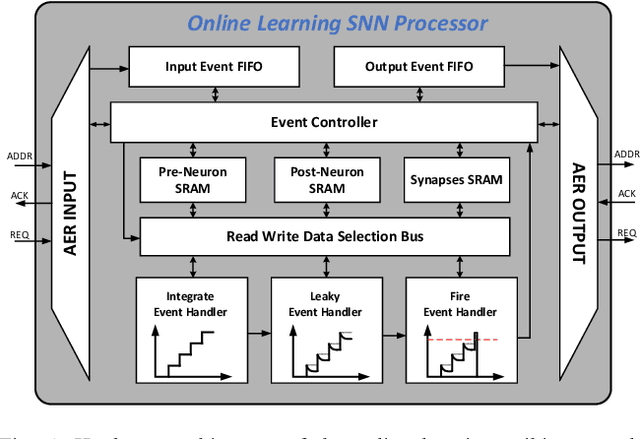

Abstract:Real-time biosignal processing on wearable devices has attracted worldwide attention for its potential in healthcare applications. However, the requirement of low-area, low-power and high adaptability to different patients challenge conventional algorithms and hardware platforms. In this design, a compact online learning neuromorphic hardware architecture with ultra-low power consumption designed explicitly for biosignal processing is proposed. A trace-based Spiking-Timing-Dependent-Plasticity (STDP) lgorithm is applied to realize hardware-friendly online learning of a single-layer excitatory-inhibitory spiking neural network. Several techniques, including event-driven architecture and a fully optimized iterative computation approach, are adopted to minimize the hardware utilization and power consumption for the hardware implementation of online learning. Experiment results show that the proposed design reaches the accuracy of 87.36% and 83% for the Mixed National Institute of Standards and Technology database (MNIST) and ECG classification. The hardware architecture is implemented on a Zynq-7020 FPGA. Implementation results show that the Look-Up Table (LUT) and Flip Flops (FF) utilization reduced by 14.87 and 7.34 times, respectively, and the power consumption reduced by 21.69% compared to state of the art.

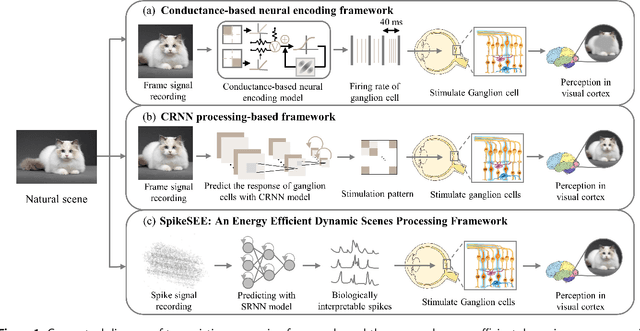

SpikeSEE: An Energy-Efficient Dynamic Scenes Processing Framework for Retinal Prostheses

Sep 16, 2022

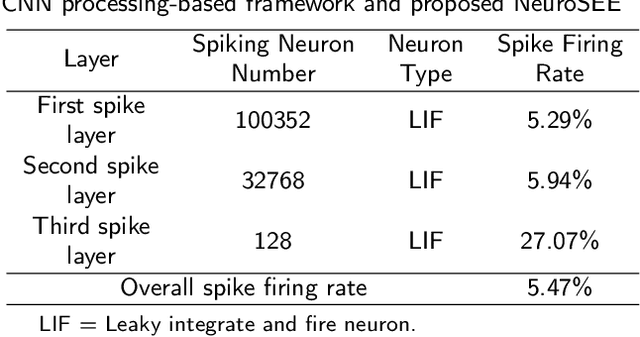

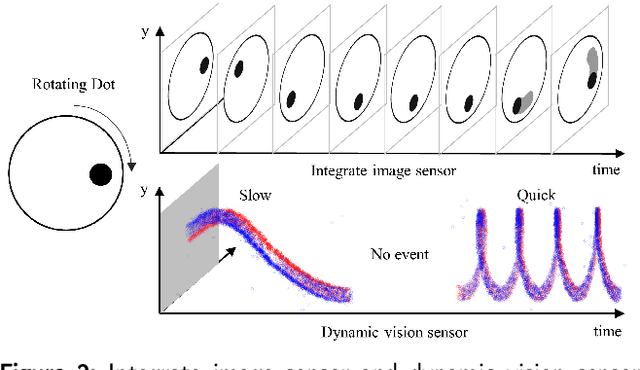

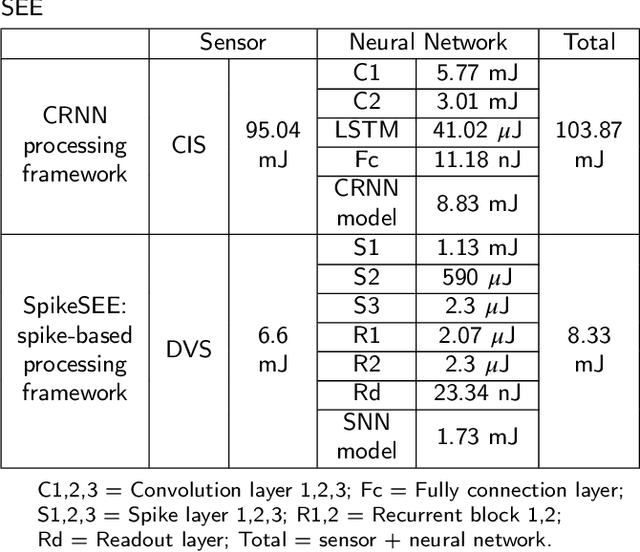

Abstract:Intelligent and low-power retinal prostheses are highly demanded in this era, where wearable and implantable devices are used for numerous healthcare applications. In this paper, we propose an energy-efficient dynamic scenes processing framework (SpikeSEE) that combines a spike representation encoding technique and a bio-inspired spiking recurrent neural network (SRNN) model to achieve intelligent processing and extreme low-power computation for retinal prostheses. The spike representation encoding technique could interpret dynamic scenes with sparse spike trains, decreasing the data volume. The SRNN model, inspired by the human retina special structure and spike processing method, is adopted to predict the response of ganglion cells to dynamic scenes. Experimental results show that the Pearson correlation coefficient of the proposed SRNN model achieves 0.93, which outperforms the state of the art processing framework for retinal prostheses. Thanks to the spike representation and SRNN processing, the model can extract visual features in a multiplication-free fashion. The framework achieves 12 times power reduction compared with the convolutional recurrent neural network (CRNN) processing-based framework. Our proposed SpikeSEE predicts the response of ganglion cells more accurately with lower energy consumption, which alleviates the precision and power issues of retinal prostheses and provides a potential solution for wearable or implantable prostheses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge