Carola Figueroa-Flores

Saliency for free: Saliency prediction as a side-effect of object recognition

Jul 20, 2021

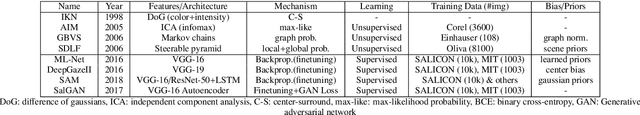

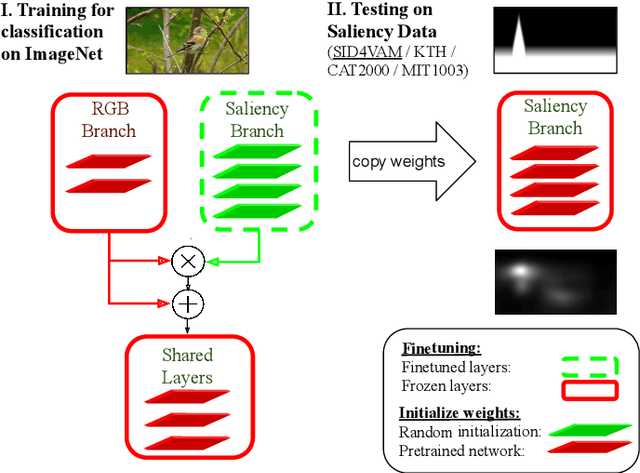

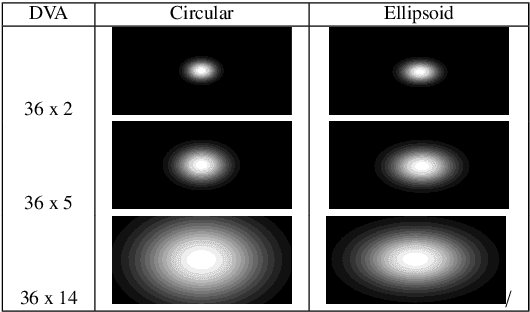

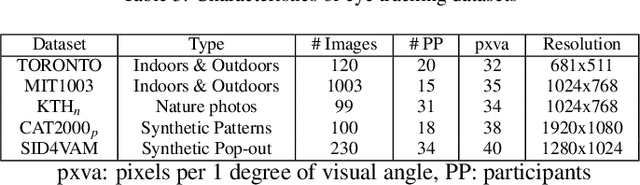

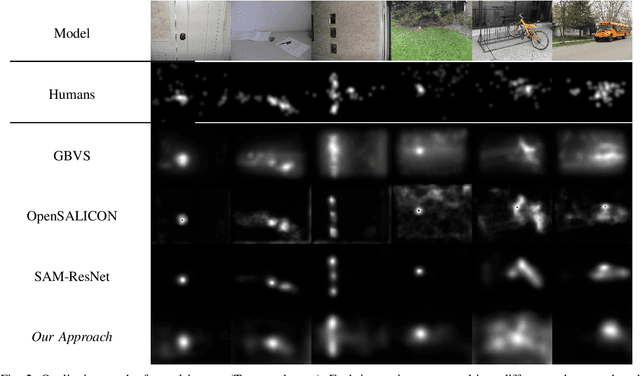

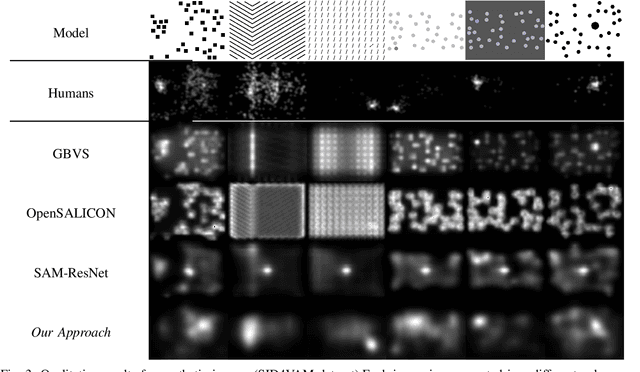

Abstract:Saliency is the perceptual capacity of our visual system to focus our attention (i.e. gaze) on relevant objects. Neural networks for saliency estimation require ground truth saliency maps for training which are usually achieved via eyetracking experiments. In the current paper, we demonstrate that saliency maps can be generated as a side-effect of training an object recognition deep neural network that is endowed with a saliency branch. Such a network does not require any ground-truth saliency maps for training.Extensive experiments carried out on both real and synthetic saliency datasets demonstrate that our approach is able to generate accurate saliency maps, achieving competitive results on both synthetic and real datasets when compared to methods that do require ground truth data.

* Paper published to Pattern Recognition Letter

Hallucinating Saliency Maps for Fine-Grained Image Classification for Limited Data Domains

Jul 24, 2020

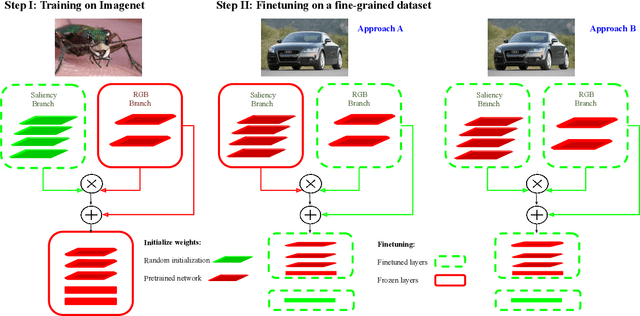

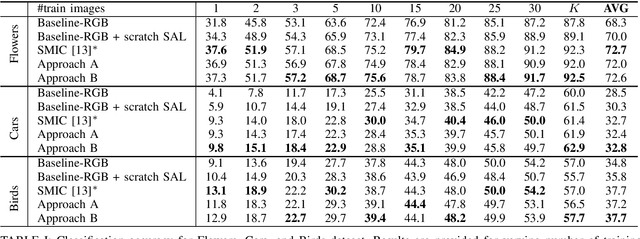

Abstract:Most of the saliency methods are evaluated on their ability to generate saliency maps, and not on their functionality in a complete vision pipeline, like for instance, image classification. In the current paper, we propose an approach which does not require explicit saliency maps to improve image classification, but they are learned implicitely, during the training of an end-to-end image classification task. We show that our approach obtains similar results as the case when the saliency maps are provided explicitely. Combining RGB data with saliency maps represents a significant advantage for object recognition, especially for the case when training data is limited. We validate our method on several datasets for fine-grained classification tasks (Flowers, Birds and Cars). In addition, we show that our saliency estimation method, which is trained without any saliency groundtruth data, obtains competitive results on real image saliency benchmark (Toronto), and outperforms deep saliency models with synthetic images (SID4VAM).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge