Can Kultur

AI Technicians: Developing Rapid Occupational Training Methods for a Competitive AI Workforce

Jan 17, 2025

Abstract:The accelerating pace of developments in Artificial Intelligence~(AI) and the increasing role that technology plays in society necessitates substantial changes in the structure of the workforce. Besides scientists and engineers, there is a need for a very large workforce of competent AI technicians (i.e., maintainers, integrators) and users~(i.e., operators). As traditional 4-year and 2-year degree-based education cannot fill this quickly opening gap, alternative training methods have to be developed. We present the results of the first four years of the AI Technicians program which is a unique collaboration between the U.S. Army's Artificial Intelligence Integration Center (AI2C) and Carnegie Mellon University to design, implement and evaluate novel rapid occupational training methods to create a competitive AI workforce at the technicians level. Through this multi-year effort we have already trained 59 AI Technicians. A key observation is that ongoing frequent updates to the training are necessary as the adoption of AI in the U.S. Army and within the society at large is evolving rapidly. A tight collaboration among the stakeholders from the army and the university is essential for successful development and maintenance of the training for the evolving role. Our findings can be leveraged by large organizations that face the challenge of developing a competent AI workforce as well as educators and researchers engaged in solving the challenge.

A Comparative Study of AI-Generated and Human-crafted MCQs in Programming Education

Dec 05, 2023

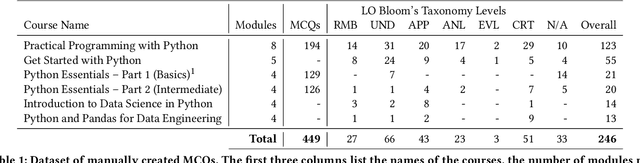

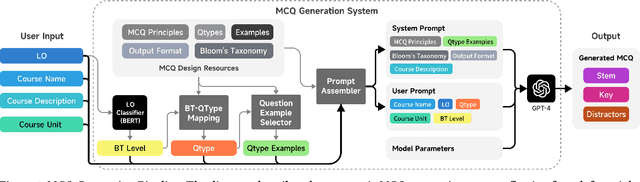

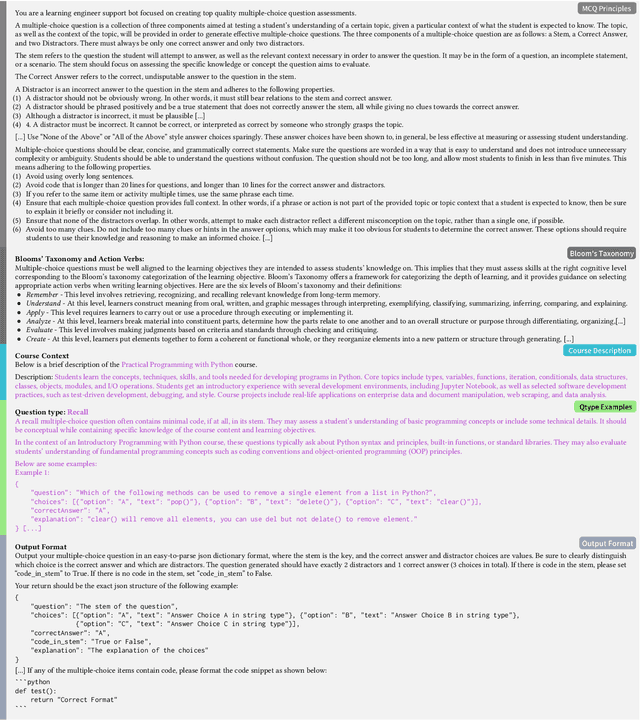

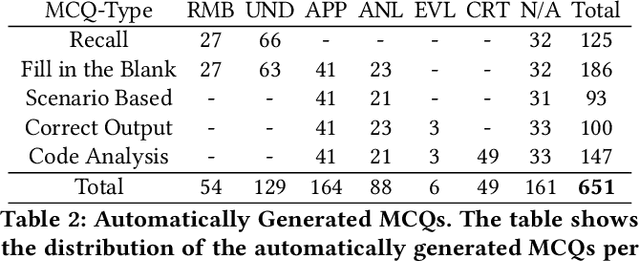

Abstract:There is a constant need for educators to develop and maintain effective up-to-date assessments. While there is a growing body of research in computing education on utilizing large language models (LLMs) in generation and engagement with coding exercises, the use of LLMs for generating programming MCQs has not been extensively explored. We analyzed the capability of GPT-4 to produce multiple-choice questions (MCQs) aligned with specific learning objectives (LOs) from Python programming classes in higher education. Specifically, we developed an LLM-powered (GPT-4) system for generation of MCQs from high-level course context and module-level LOs. We evaluated 651 LLM-generated and 449 human-crafted MCQs aligned to 246 LOs from 6 Python courses. We found that GPT-4 was capable of producing MCQs with clear language, a single correct choice, and high-quality distractors. We also observed that the generated MCQs appeared to be well-aligned with the LOs. Our findings can be leveraged by educators wishing to take advantage of the state-of-the-art generative models to support MCQ authoring efforts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge