Can Kanbak

Geometric robustness of deep networks: analysis and improvement

Nov 24, 2017

Abstract:Deep convolutional neural networks have been shown to be vulnerable to arbitrary geometric transformations. However, there is no systematic method to measure the invariance properties of deep networks to such transformations. We propose ManiFool as a simple yet scalable algorithm to measure the invariance of deep networks. In particular, our algorithm measures the robustness of deep networks to geometric transformations in a worst-case regime as they can be problematic for sensitive applications. Our extensive experimental results show that ManiFool can be used to measure the invariance of fairly complex networks on high dimensional datasets and these values can be used for analyzing the reasons for it. Furthermore, we build on Manifool to propose a new adversarial training scheme and we show its effectiveness on improving the invariance properties of deep neural networks.

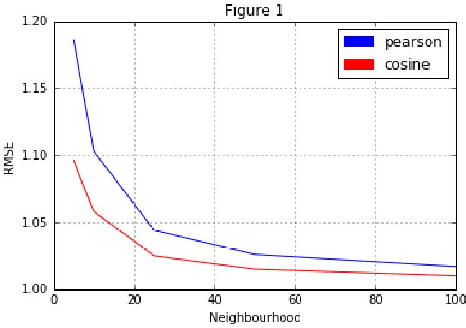

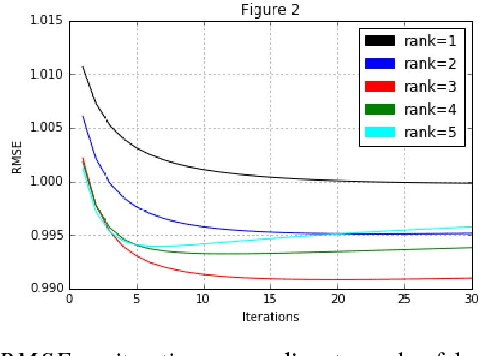

Performance Comparison of Algorithms for Movie Rating Estimation

Nov 05, 2017

Abstract:In this paper, our goal is to compare performances of three different algorithms to predict the ratings that will be given to movies by potential users where we are given a user-movie rating matrix based on the past observations. To this end, we evaluate User-Based Collaborative Filtering, Iterative Matrix Factorization and Yehuda Koren's Integrated model using neighborhood and factorization where we use root mean square error (RMSE) as the performance evaluation metric. In short, we do not observe significant differences between performances, especially when the complexity increase is considered. We can conclude that Iterative Matrix Factorization performs fairly well despite its simplicity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge