Cameron Mehlman

I Can Hear You Coming: RF Sensing for Uncooperative Satellite Evasion

Apr 04, 2025Abstract:Uncooperative satellite engagements with nation-state actors prompts the need for enhanced maneuverability and agility on-orbit. However, robust, autonomous and rapid adversary avoidance capabilities for the space environment is seldom studied. Further, the capability constrained nature of many space vehicles does not afford robust space situational awareness capabilities that can inform maneuvers. We present a "Cat & Mouse" system for training optimal adversary avoidance algorithms using Reinforcement Learning (RL). We propose the novel approach of utilizing intercepted radio frequency communication and dynamic spacecraft state as multi-modal input that could inform paths for a mouse to outmaneuver the cat satellite. Given the current ubiquitous use of RF communications, our proposed system can be applicable to a diverse array of satellites. In addition to providing a comprehensive framework for an RL architecture capable of training performant and adaptive adversary avoidance policies, we also explore several optimization based methods for adversarial avoidance on real-world data obtained from the Space Surveillance Network (SSN) to analyze the benefits and limitations of different avoidance methods.

Cat-and-Mouse Satellite Dynamics: Divergent Adversarial Reinforcement Learning for Contested Multi-Agent Space Operations

Sep 26, 2024Abstract:As space becomes increasingly crowded and contested, robust autonomous capabilities for multi-agent environments are gaining critical importance. Current autonomous systems in space primarily rely on optimization-based path planning or long-range orbital maneuvers, which have not yet proven effective in adversarial scenarios where one satellite is actively pursuing another. We introduce Divergent Adversarial Reinforcement Learning (DARL), a two-stage Multi-Agent Reinforcement Learning (MARL) approach designed to train autonomous evasion strategies for satellites engaged with multiple adversarial spacecraft. Our method enhances exploration during training by promoting diverse adversarial strategies, leading to more robust and adaptable evader models. We validate DARL through a cat-and-mouse satellite scenario, modeled as a partially observable multi-agent capture the flag game where two adversarial `cat' spacecraft pursue a single `mouse' evader. DARL's performance is compared against several benchmarks, including an optimization-based satellite path planner, demonstrating its ability to produce highly robust models for adversarial multi-agent space environments.

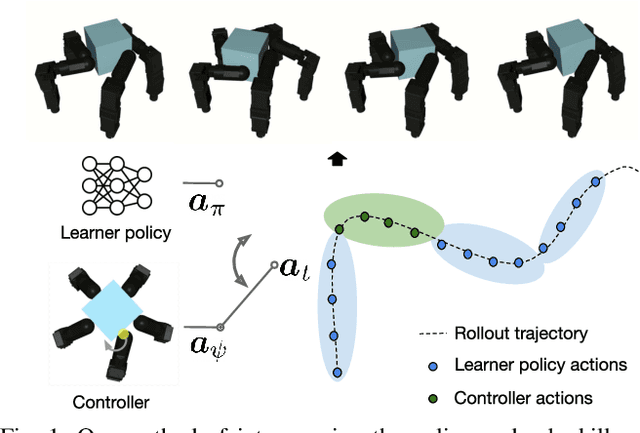

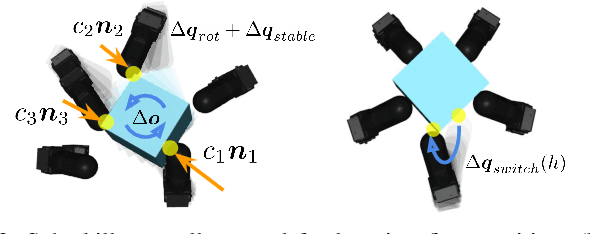

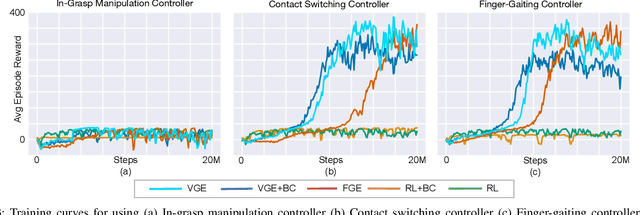

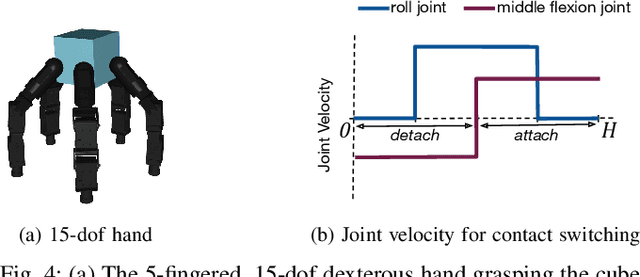

Value Guided Exploration with Sub-optimal Controllers for Learning Dexterous Manipulation

Mar 06, 2023

Abstract:Recently, reinforcement learning has allowed dexterous manipulation skills with increasing complexity. Nonetheless, learning these skills in simulation still exhibits poor sample-efficiency which stems from the fact these skills are learned from scratch without the benefit of any domain expertise. In this work, we aim to improve the sample-efficiency of learning dexterous in-hand manipulation skills using sub-optimal controllers available via domain knowledge. Our framework optimally queries the sub-optimal controllers and guides exploration toward state-space relevant to the task thereby demonstrating improved sample complexity. We show that our framework allows learning from highly sub-optimal controllers and we are the first to demonstrate learning hard-to-explore finger-gaiting in-hand manipulation skills without the use of an exploratory reset distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge