Cai Meng

Zero-Shot Multi-modal Large Language Model v.s. Supervised Deep Learning: A Comparative Study on CT-Based Intracranial Hemorrhage Subtyping

May 14, 2025Abstract:Introduction: Timely identification of intracranial hemorrhage (ICH) subtypes on non-contrast computed tomography is critical for prognosis prediction and therapeutic decision-making, yet remains challenging due to low contrast and blurring boundaries. This study evaluates the performance of zero-shot multi-modal large language models (MLLMs) compared to traditional deep learning methods in ICH binary classification and subtyping. Methods: We utilized a dataset provided by RSNA, comprising 192 NCCT volumes. The study compares various MLLMs, including GPT-4o, Gemini 2.0 Flash, and Claude 3.5 Sonnet V2, with conventional deep learning models, including ResNet50 and Vision Transformer. Carefully crafted prompts were used to guide MLLMs in tasks such as ICH presence, subtype classification, localization, and volume estimation. Results: The results indicate that in the ICH binary classification task, traditional deep learning models outperform MLLMs comprehensively. For subtype classification, MLLMs also exhibit inferior performance compared to traditional deep learning models, with Gemini 2.0 Flash achieving an macro-averaged precision of 0.41 and a macro-averaged F1 score of 0.31. Conclusion: While MLLMs excel in interactive capabilities, their overall accuracy in ICH subtyping is inferior to deep networks. However, MLLMs enhance interpretability through language interactions, indicating potential in medical imaging analysis. Future efforts will focus on model refinement and developing more precise MLLMs to improve performance in three-dimensional medical image processing.

GS2Pose: Two-stage 6D Object Pose Estimation Guided by Gaussian Splatting

Nov 07, 2024

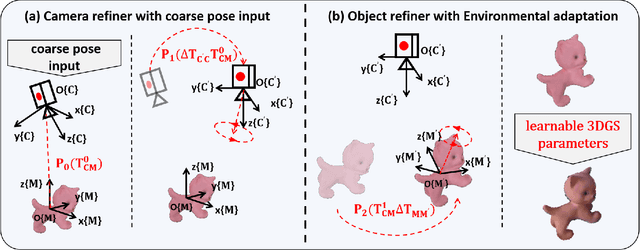

Abstract:This paper proposes a new method for accurate and robust 6D pose estimation of novel objects, named GS2Pose. By introducing 3D Gaussian splatting, GS2Pose can utilize the reconstruction results without requiring a high-quality CAD model, which means it only requires segmented RGBD images as input. Specifically, GS2Pose employs a two-stage structure consisting of coarse estimation followed by refined estimation. In the coarse stage, a lightweight U-Net network with a polarization attention mechanism, called Pose-Net, is designed. By using the 3DGS model for supervised training, Pose-Net can generate NOCS images to compute a coarse pose. In the refinement stage, GS2Pose formulates a pose regression algorithm following the idea of reprojection or Bundle Adjustment (BA), referred to as GS-Refiner. By leveraging Lie algebra to extend 3DGS, GS-Refiner obtains a pose-differentiable rendering pipeline that refines the coarse pose by comparing the input images with the rendered images. GS-Refiner also selectively updates parameters in the 3DGS model to achieve environmental adaptation, thereby enhancing the algorithm's robustness and flexibility to illuminative variation, occlusion, and other challenging disruptive factors. GS2Pose was evaluated through experiments conducted on the LineMod dataset, where it was compared with similar algorithms, yielding highly competitive results. The code for GS2Pose will soon be released on GitHub.

MambaMorph: a Mamba-based Backbone with Contrastive Feature Learning for Deformable MR-CT Registration

Jan 25, 2024

Abstract:Deformable image registration is an essential approach for medical image analysis.This paper introduces MambaMorph, an innovative multi-modality deformable registration network, specifically designed for Magnetic Resonance (MR) and Computed Tomography (CT) image alignment. MambaMorph stands out with its Mamba-based registration module and a contrastive feature learning approach, addressing the prevalent challenges in multi-modality registration. The network leverages Mamba blocks for efficient long-range modeling and high-dimensional data processing, coupled with a feature extractor that learns fine-grained features for enhanced registration accuracy. Experimental results showcase MambaMorph's superior performance over existing methods in MR-CT registration, underlining its potential in clinical applications. This work underscores the significance of feature learning in multi-modality registration and positions MambaMorph as a trailblazing solution in this field. The code for MambaMorph is available at: https://github.com/Guo-Stone/MambaMorph.

SAMIHS: Adaptation of Segment Anything Model for Intracranial Hemorrhage Segmentation

Nov 14, 2023

Abstract:Segment Anything Model (SAM), a vision foundation model trained on large-scale annotations, has recently continued raising awareness within medical image segmentation. Despite the impressive capabilities of SAM on natural scenes, it struggles with performance decline when confronted with medical images, especially those involving blurry boundaries and highly irregular regions of low contrast. In this paper, a SAM-based parameter-efficient fine-tuning method, called SAMIHS, is proposed for intracranial hemorrhage segmentation, which is a crucial and challenging step in stroke diagnosis and surgical planning. Distinguished from previous SAM and SAM-based methods, SAMIHS incorporates parameter-refactoring adapters into SAM's image encoder and considers the efficient and flexible utilization of adapters' parameters. Additionally, we employ a combo loss that combines binary cross-entropy loss and boundary-sensitive loss to enhance SAMIHS's ability to recognize the boundary regions. Our experimental results on two public datasets demonstrate the effectiveness of our proposed method. Code is available at https://github.com/mileswyn/SAMIHS .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge