C. C. Jay Kuo

Evaluation of Multimodal Semantic Segmentation using RGB-D Data

Mar 31, 2021

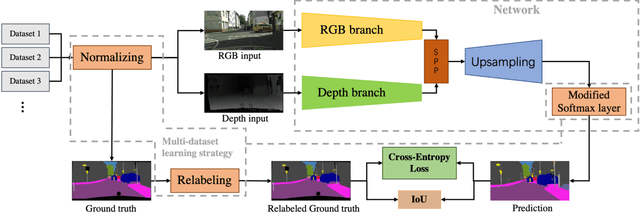

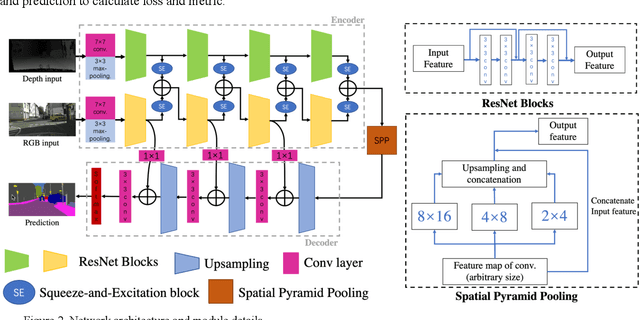

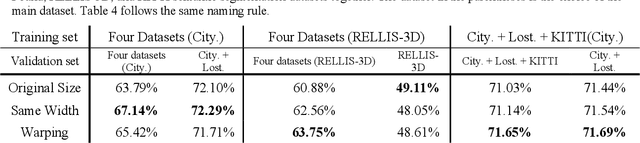

Abstract:Our goal is to develop stable, accurate, and robust semantic scene understanding methods for wide-area scene perception and understanding, especially in challenging outdoor environments. To achieve this, we are exploring and evaluating a range of related technology and solutions, including AI-driven multimodal scene perception, fusion, processing, and understanding. This work reports our efforts on the evaluation of a state-of-the-art approach for semantic segmentation with multiple RGB and depth sensing data. We employ four large datasets composed of diverse urban and terrain scenes and design various experimental methods and metrics. In addition, we also develop new strategies of multi-datasets learning to improve the detection and recognition of unseen objects. Extensive experiments, implementations, and results are reported in the paper.

MCL-3D: a database for stereoscopic image quality assessment using 2D-image-plus-depth source

Mar 23, 2014

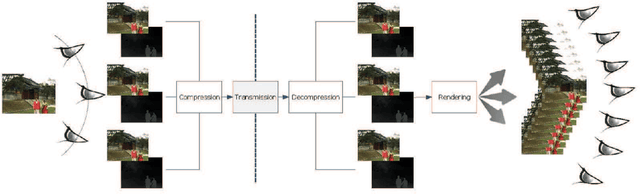

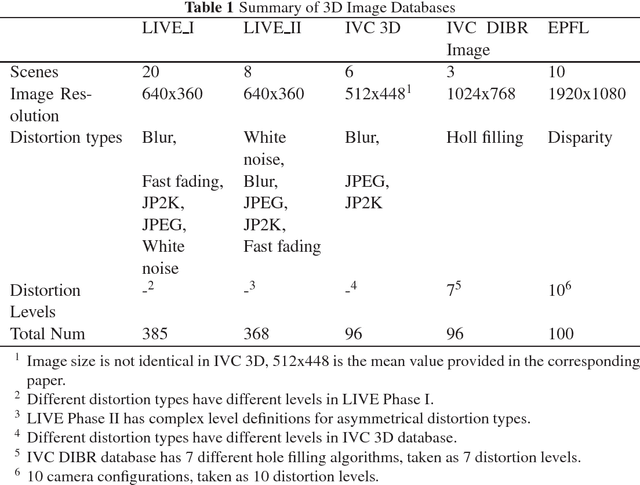

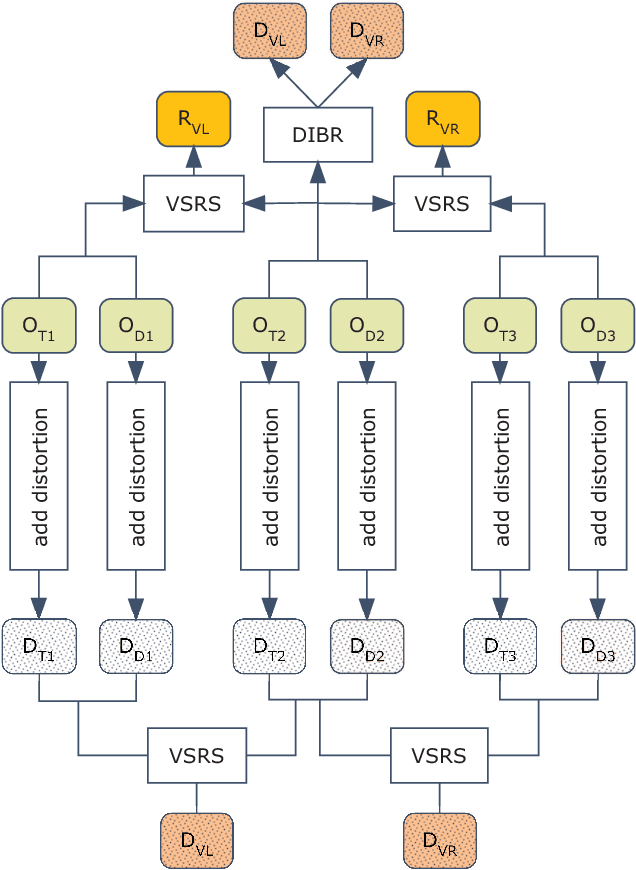

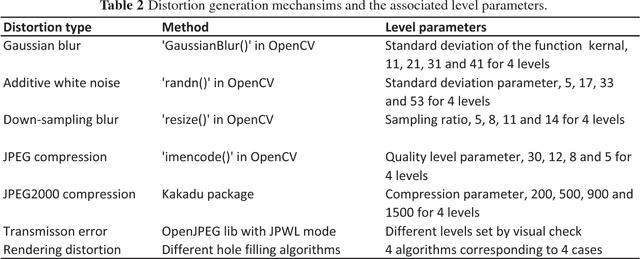

Abstract:A new stereoscopic image quality assessment database rendered using the 2D-image-plus-depth source, called MCL-3D, is described and the performance benchmarking of several known 2D and 3D image quality metrics using the MCL-3D database is presented in this work. Nine image-plus-depth sources are first selected, and a depth image-based rendering (DIBR) technique is used to render stereoscopic image pairs. Distortions applied to either the texture image or the depth image before stereoscopic image rendering include: Gaussian blur, additive white noise, down-sampling blur, JPEG and JPEG-2000 (JP2K) compression and transmission error. Furthermore, the distortion caused by imperfect rendering is also examined. The MCL-3D database contains 693 stereoscopic image pairs, where one third of them are of resolution 1024x728 and two thirds are of resolution 1920x1080. The pair-wise comparison was adopted in the subjective test for user friendliness, and the Mean Opinion Score (MOS) can be computed accordingly. Finally, we evaluate the performance of several 2D and 3D image quality metrics applied to MCL-3D. All texture images, depth images, rendered image pairs in MCL-3D and their MOS values obtained in the subjective test are available to the public (http://mcl.usc.edu/mcl-3d-database/) for future research and development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge