C-C Jay Kuo

ACT: Semi-supervised Domain-adaptive Medical Image Segmentation with Asymmetric Co-training

Jun 09, 2022

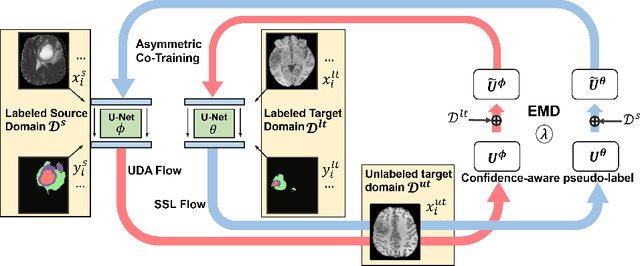

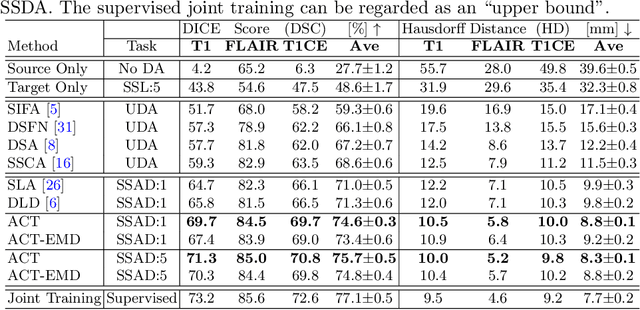

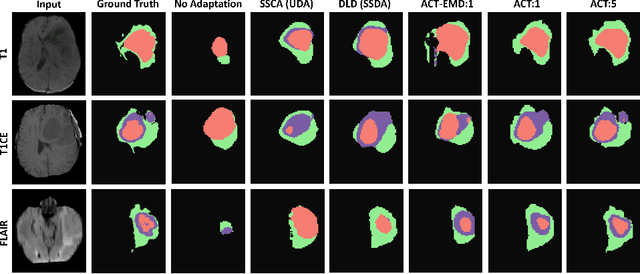

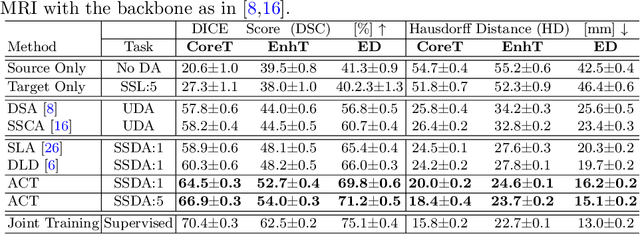

Abstract:Unsupervised domain adaptation (UDA) has been vastly explored to alleviate domain shifts between source and target domains, by applying a well-performed model in an unlabeled target domain via supervision of a labeled source domain. Recent literature, however, has indicated that the performance is still far from satisfactory in the presence of significant domain shifts. Nonetheless, delineating a few target samples is usually manageable and particularly worthwhile, due to the substantial performance gain. Inspired by this, we aim to develop semi-supervised domain adaptation (SSDA) for medical image segmentation, which is largely underexplored. We, thus, propose to exploit both labeled source and target domain data, in addition to unlabeled target data in a unified manner. Specifically, we present a novel asymmetric co-training (ACT) framework to integrate these subsets and avoid the domination of the source domain data. Following a divide-and-conquer strategy, we explicitly decouple the label supervisions in SSDA into two asymmetric sub-tasks, including semi-supervised learning (SSL) and UDA, and leverage different knowledge from two segmentors to take into account the distinction between the source and target label supervisions. The knowledge learned in the two modules is then adaptively integrated with ACT, by iteratively teaching each other, based on the confidence-aware pseudo-label. In addition, pseudo label noise is well-controlled with an exponential MixUp decay scheme for smooth propagation. Experiments on cross-modality brain tumor MRI segmentation tasks using the BraTS18 database showed, even with limited labeled target samples, ACT yielded marked improvements over UDA and state-of-the-art SSDA methods and approached an "upper bound" of supervised joint training.

Visualization, Discriminability and Applications of Interpretable Saak Features

Mar 03, 2019

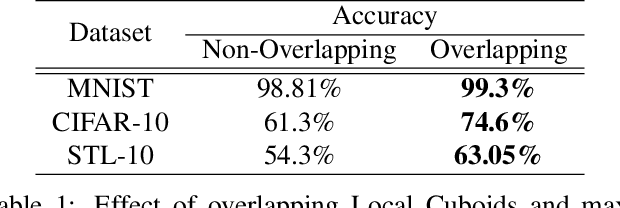

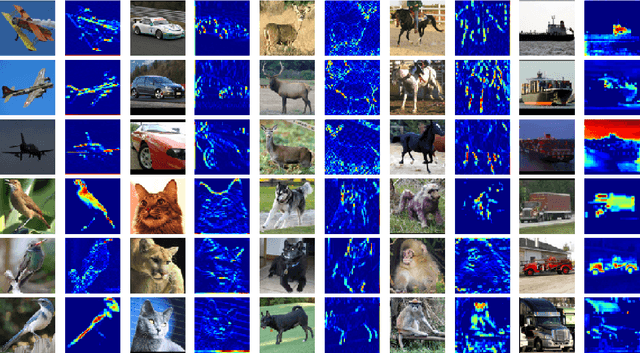

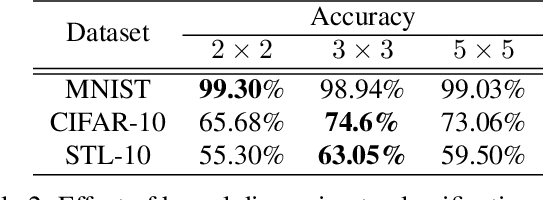

Abstract:In this work, we study the power of Saak features as an effort towards interpretable deep learning. Being inspired by the operations of convolutional layers of convolutional neural networks, multi-stage Saak transform was proposed. Based on this foundation, we provide an in-depth examination on Saak features, which are coefficients of the Saak transform, by analyzing their properties through visualization and demonstrating their applications in image classification. Being similar to CNN features, Saak features at later stages have larger receptive fields, yet they are obtained in a one-pass feedforward manner without backpropagation. The whole feature extraction process is transparent and is of extremely low complexity. The discriminant power of Saak features is demonstrated, and their classification performance in three well-known datasets (namely, MNIST, CIFAR-10 and STL-10) is shown by experimental results.

Robustness Of Saak Transform Against Adversarial Attacks

Feb 07, 2019

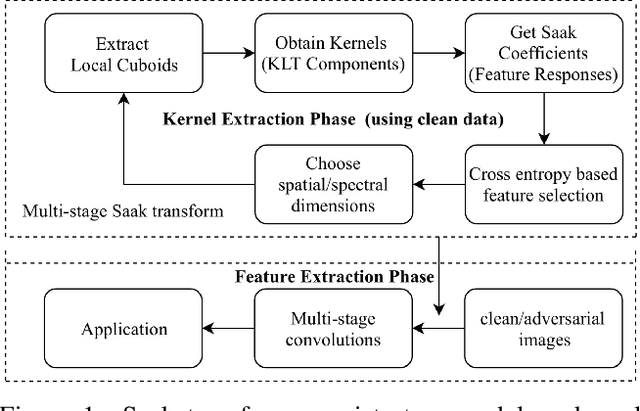

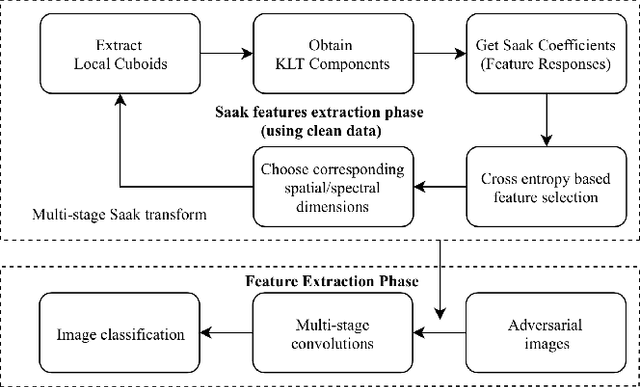

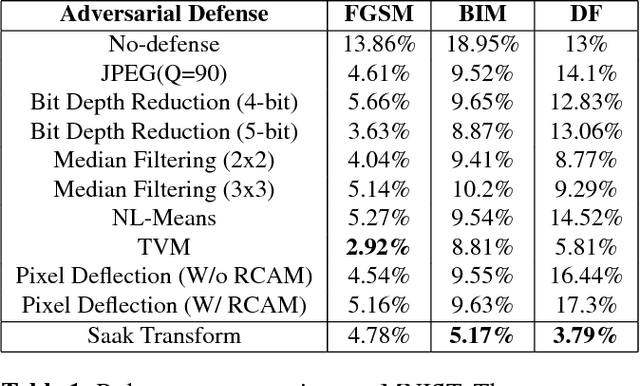

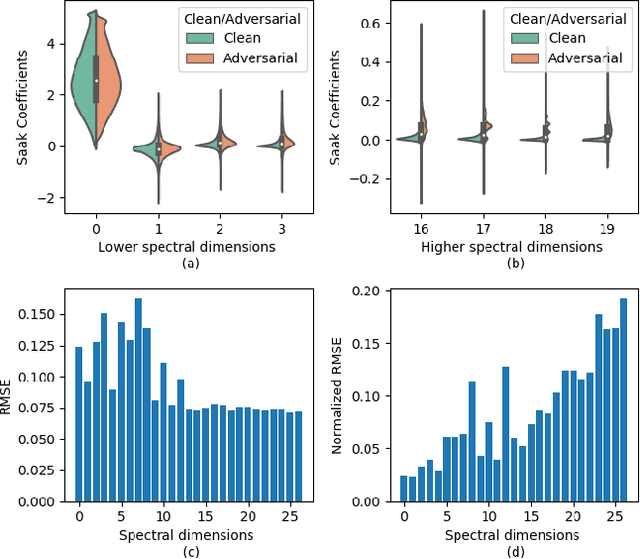

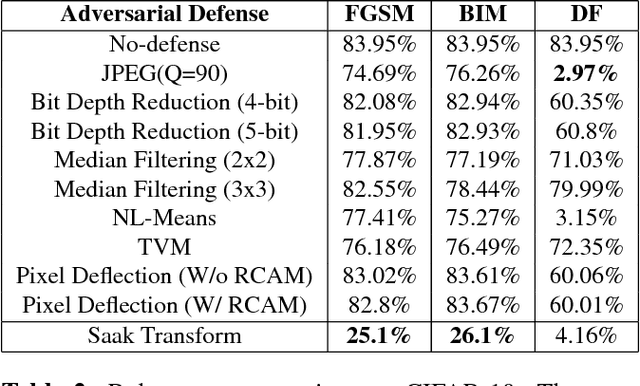

Abstract:Image classification is vulnerable to adversarial attacks. This work investigates the robustness of Saak transform against adversarial attacks towards high performance image classification. We develop a complete image classification system based on multi-stage Saak transform. In the Saak transform domain, clean and adversarial images demonstrate different distributions at different spectral dimensions. Selection of the spectral dimensions at every stage can be viewed as an automatic denoising process. Motivated by this observation, we carefully design strategies of feature extraction, representation and classification that increase adversarial robustness. The performances with well-known datasets and attacks are demonstrated by extensive experimental evaluations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge