Burhanuddin Shirose

Multi-CAP: A Multi-Robot Connectivity-Aware Hierarchical Coverage Path Planning Algorithm for Unknown Environments

Sep 18, 2025Abstract:Efficient coordination of multiple robots for coverage of large, unknown environments is a significant challenge that involves minimizing the total coverage path length while reducing inter-robot conflicts. In this paper, we introduce a Multi-robot Connectivity-Aware Planner (Multi-CAP), a hierarchical coverage path planning algorithm that facilitates multi-robot coordination through a novel connectivity-aware approach. The algorithm constructs and dynamically maintains an adjacency graph that represents the environment as a set of connected subareas. Critically, we make the assumption that the environment, while unknown, is bounded. This allows for incremental refinement of the adjacency graph online to ensure its structure represents the physical layout of the space, both in observed and unobserved areas of the map as robots explore the environment. We frame the task of assigning subareas to robots as a Vehicle Routing Problem (VRP), a well-studied problem for finding optimal routes for a fleet of vehicles. This is used to compute disjoint tours that minimize redundant travel, assigning each robot a unique, non-conflicting set of subareas. Each robot then executes its assigned tour, independently adapting its coverage strategy within each subarea to minimize path length based on real-time sensor observations of the subarea. We demonstrate through simulations and multi-robot hardware experiments that Multi-CAP significantly outperforms state-of-the-art methods in key metrics, including coverage time, total path length, and path overlap ratio. Ablation studies further validate the critical role of our connectivity-aware graph and the global tour planner in achieving these performance gains.

A Bayesian Modeling Framework for Estimation and Ground Segmentation of Cluttered Staircases

Jan 07, 2025

Abstract:Autonomous robot navigation in complex environments requires robust perception as well as high-level scene understanding due to perceptual challenges, such as occlusions, and uncertainty introduced by robot movement. For example, a robot climbing a cluttered staircase can misinterpret clutter as a step, misrepresenting the state and compromising safety. This requires robust state estimation methods capable of inferring the underlying structure of the environment even from incomplete sensor data. In this paper, we introduce a novel method for robust state estimation of staircases. To address the challenge of perceiving occluded staircases extending beyond the robot's field-of-view, our approach combines an infinite-width staircase representation with a finite endpoint state to capture the overall staircase structure. This representation is integrated into a Bayesian inference framework to fuse noisy measurements enabling accurate estimation of staircase location even with partial observations and occlusions. Additionally, we present a segmentation algorithm that works in conjunction with the staircase estimation pipeline to accurately identify clutter-free regions on a staircase. Our method is extensively evaluated on real robot across diverse staircases, demonstrating significant improvements in estimation accuracy and segmentation performance compared to baseline approaches.

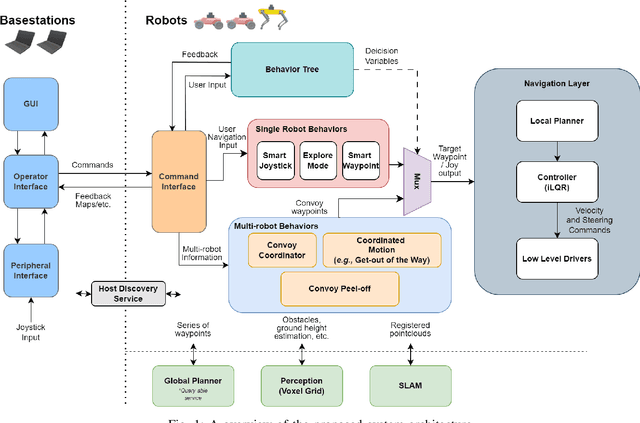

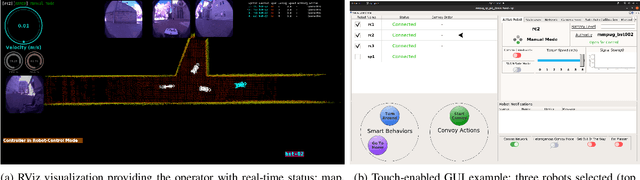

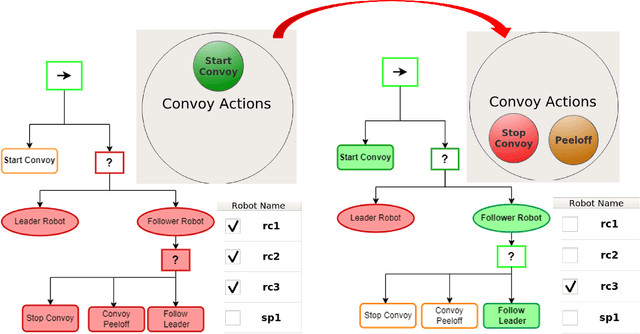

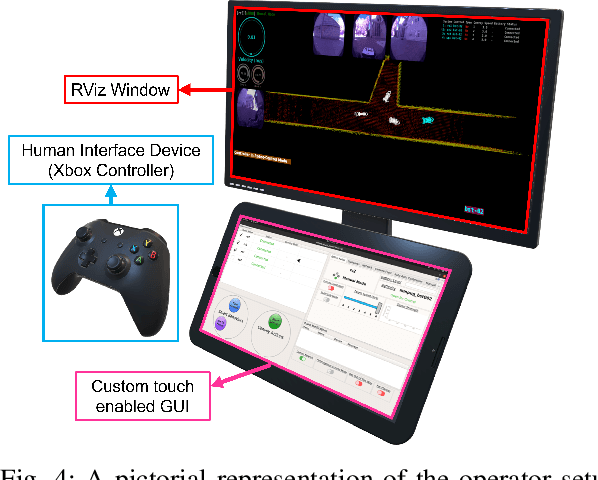

Modular, Resilient, and Scalable System Design Approaches -- Lessons learned in the years after DARPA Subterranean Challenge

Apr 27, 2024

Abstract:Field robotics applications, such as search and rescue, involve robots operating in large, unknown areas. These environments present unique challenges that compound the difficulties faced by a robot operator. The use of multi-robot teams, assisted by carefully designed autonomy, help reduce operator workload and allow the operator to effectively coordinate robot capabilities. In this work, we present a system architecture designed to optimize both robot autonomy and the operator experience in multi-robot scenarios. Drawing on lessons learned from our team's participation in the DARPA SubT Challenge, our architecture emphasizes modularity and interoperability. We empower the operator by allowing for adjustable levels of autonomy ("sliding mode autonomy"). We enhance the operator experience by using intuitive, adaptive interfaces that suggest context-aware actions to simplify control. Finally, we describe how the proposed architecture enables streamlined development of new capabilities for effective deployment of robot autonomy in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge