Bum Jun Kim

Discovering Scaling Exponents with Physics-Informed Müntz-Szász Networks

Jan 30, 2026Abstract:Physical systems near singularities, interfaces, and critical points exhibit power-law scaling, yet standard neural networks leave the governing exponents implicit. We introduce physics-informed M"untz-Sz'asz Networks (MSN-PINN), a power-law basis network that treats scaling exponents as trainable parameters. The model outputs both the solution and its scaling structure. We prove identifiability, or unique recovery, and show that, under these conditions, the squared error between learned and true exponents scales as $O(|μ- α|^2)$. Across experiments, MSN-PINN achieves single-exponent recovery with 1--5% error under noise and sparse sampling. It recovers corner singularity exponents for the two-dimensional Laplace equation with 0.009% error, matches the classical result of Kondrat'ev (1967), and recovers forcing-induced exponents in singular Poisson problems with 0.03% and 0.05% errors. On a 40-configuration wedge benchmark, it reaches a 100% success rate with 0.022% mean error. Constraint-aware training encodes physical requirements such as boundary condition compatibility and improves accuracy by three orders of magnitude over naive training. By combining the expressiveness of neural networks with the interpretability of asymptotic analysis, MSN-PINN produces learned parameters with direct physical meaning.

Temperature-Free Loss Function for Contrastive Learning

Jan 29, 2025Abstract:As one of the most promising methods in self-supervised learning, contrastive learning has achieved a series of breakthroughs across numerous fields. A predominant approach to implementing contrastive learning is applying InfoNCE loss: By capturing the similarities between pairs, InfoNCE loss enables learning the representation of data. Albeit its success, adopting InfoNCE loss requires tuning a temperature, which is a core hyperparameter for calibrating similarity scores. Despite its significance and sensitivity to performance being emphasized by several studies, searching for a valid temperature requires extensive trial-and-error-based experiments, which increases the difficulty of adopting InfoNCE loss. To address this difficulty, we propose a novel method to deploy InfoNCE loss without temperature. Specifically, we replace temperature scaling with the inverse hyperbolic tangent function, resulting in a modified InfoNCE loss. In addition to hyperparameter-free deployment, we observed that the proposed method even yielded a performance gain in contrastive learning. Our detailed theoretical analysis discovers that the current practice of temperature scaling in InfoNCE loss causes serious problems in gradient descent, whereas our method provides desirable gradient properties. The proposed method was validated on five benchmarks on contrastive learning, yielding satisfactory results without temperature tuning.

Stochastic Subsampling With Average Pooling

Sep 25, 2024

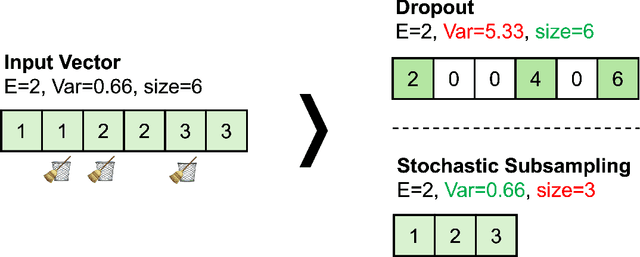

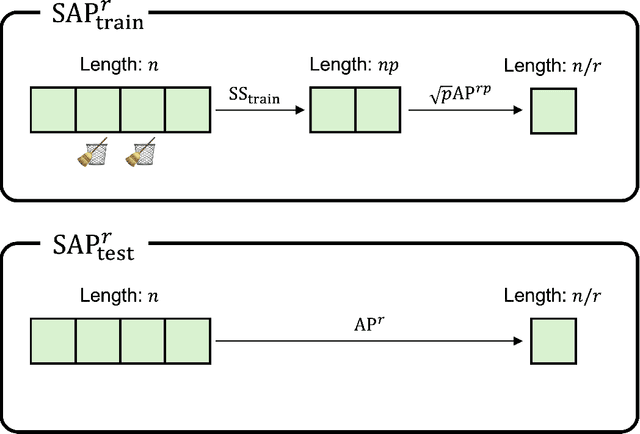

Abstract:Regularization of deep neural networks has been an important issue to achieve higher generalization performance without overfitting problems. Although the popular method of Dropout provides a regularization effect, it causes inconsistent properties in the output, which may degrade the performance of deep neural networks. In this study, we propose a new module called stochastic average pooling, which incorporates Dropout-like stochasticity in pooling. We describe the properties of stochastic subsampling and average pooling and leverage them to design a module without any inconsistency problem. The stochastic average pooling achieves a regularization effect without any potential performance degradation due to the inconsistency issue and can easily be plugged into existing architectures of deep neural networks. Experiments demonstrate that replacing existing average pooling with stochastic average pooling yields consistent improvements across a variety of tasks, datasets, and models.

Configuring Data Augmentations to Reduce Variance Shift in Positional Embedding of Vision Transformers

May 23, 2024

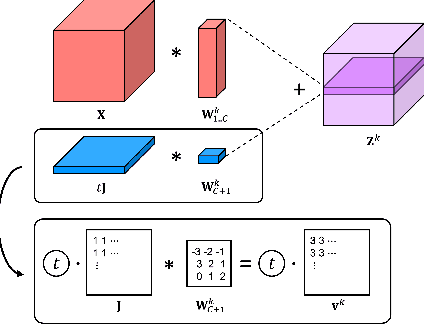

Abstract:Vision transformers (ViTs) have demonstrated remarkable performance in a variety of vision tasks. Despite their promising capabilities, training a ViT requires a large amount of diverse data. Several studies empirically found that using rich data augmentations, such as Mixup, Cutmix, and random erasing, is critical to the successful training of ViTs. Now, the use of rich data augmentations has become a standard practice in the current state. However, we report a vulnerability to this practice: Certain data augmentations such as Mixup cause a variance shift in the positional embedding of ViT, which has been a hidden factor that degrades the performance of ViT during the test phase. We claim that achieving a stable effect from positional embedding requires a specific condition on the image, which is often broken for the current data augmentation methods. We provide a detailed analysis of this problem as well as the correct configuration for these data augmentations to remove the side effects of variance shift. Experiments showed that adopting our guidelines improves the performance of ViTs compared with the current configuration of data augmentations.

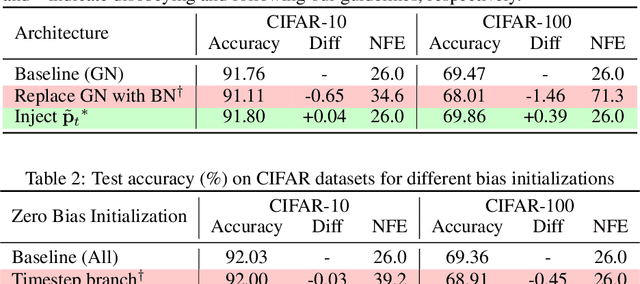

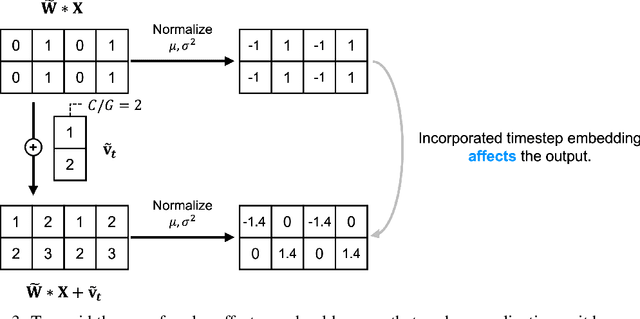

The Disappearance of Timestep Embedding in Modern Time-Dependent Neural Networks

May 23, 2024

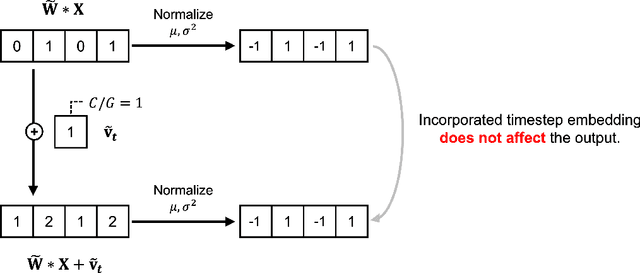

Abstract:Dynamical systems are often time-varying, whose modeling requires a function that evolves with respect to time. Recent studies such as the neural ordinary differential equation proposed a time-dependent neural network, which provides a neural network varying with respect to time. However, we claim that the architectural choice to build a time-dependent neural network significantly affects its time-awareness but still lacks sufficient validation in its current states. In this study, we conduct an in-depth analysis of the architecture of modern time-dependent neural networks. Here, we report a vulnerability of vanishing timestep embedding, which disables the time-awareness of a time-dependent neural network. Furthermore, we find that this vulnerability can also be observed in diffusion models because they employ a similar architecture that incorporates timestep embedding to discriminate between different timesteps during a diffusion process. Our analysis provides a detailed description of this phenomenon as well as several solutions to address the root cause. Through experiments on neural ordinary differential equations and diffusion models, we observed that ensuring alive time-awareness via proposed solutions boosted their performance, which implies that their current implementations lack sufficient time-dependency.

Scale Equalization for Multi-Level Feature Fusion

Feb 02, 2024Abstract:Deep neural networks have exhibited remarkable performance in a variety of computer vision fields, especially in semantic segmentation tasks. Their success is often attributed to multi-level feature fusion, which enables them to understand both global and local information from an image. However, we found that multi-level features from parallel branches are on different scales. The scale disequilibrium is a universal and unwanted flaw that leads to detrimental gradient descent, thereby degrading performance in semantic segmentation. We discover that scale disequilibrium is caused by bilinear upsampling, which is supported by both theoretical and empirical evidence. Based on this observation, we propose injecting scale equalizers to achieve scale equilibrium across multi-level features after bilinear upsampling. Our proposed scale equalizers are easy to implement, applicable to any architecture, hyperparameter-free, implementable without requiring extra computational cost, and guarantee scale equilibrium for any dataset. Experiments showed that adopting scale equalizers consistently improved the mIoU index across various target datasets, including ADE20K, PASCAL VOC 2012, and Cityscapes, as well as various decoder choices, including UPerHead, PSPHead, ASPPHead, SepASPPHead, and FCNHead.

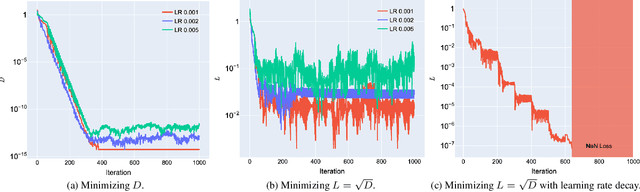

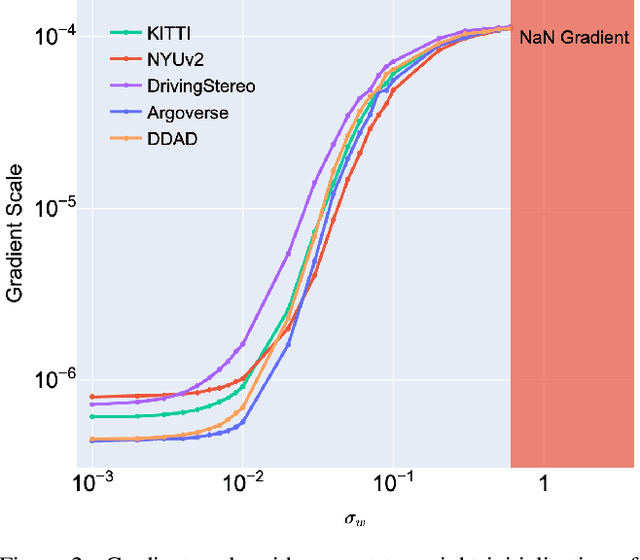

Analysis of NaN Divergence in Training Monocular Depth Estimation Model

Nov 07, 2023

Abstract:The latest advances in deep learning have facilitated the development of highly accurate monocular depth estimation models. However, when training a monocular depth estimation network, practitioners and researchers have observed not a number (NaN) loss, which disrupts gradient descent optimization. Although several practitioners have reported the stochastic and mysterious occurrence of NaN loss that bothers training, its root cause is not discussed in the literature. This study conducted an in-depth analysis of NaN loss during training a monocular depth estimation network and identified three types of vulnerabilities that cause NaN loss: 1) the use of square root loss, which leads to an unstable gradient; 2) the log-sigmoid function, which exhibits numerical stability issues; and 3) certain variance implementations, which yield incorrect computations. Furthermore, for each vulnerability, the occurrence of NaN loss was demonstrated and practical guidelines to prevent NaN loss were presented. Experiments showed that both optimization stability and performance on monocular depth estimation could be improved by following our guidelines.

Resolution-Aware Design of Atrous Rates for Semantic Segmentation Networks

Jul 26, 2023

Abstract:DeepLab is a widely used deep neural network for semantic segmentation, whose success is attributed to its parallel architecture called atrous spatial pyramid pooling (ASPP). ASPP uses multiple atrous convolutions with different atrous rates to extract both local and global information. However, fixed values of atrous rates are used for the ASPP module, which restricts the size of its field of view. In principle, atrous rate should be a hyperparameter to change the field of view size according to the target task or dataset. However, the manipulation of atrous rate is not governed by any guidelines. This study proposes practical guidelines for obtaining an optimal atrous rate. First, an effective receptive field for semantic segmentation is introduced to analyze the inner behavior of segmentation networks. We observed that the use of ASPP module yielded a specific pattern in the effective receptive field, which was traced to reveal the module's underlying mechanism. Accordingly, we derive practical guidelines for obtaining the optimal atrous rate, which should be controlled based on the size of input image. Compared to other values, using the optimal atrous rate consistently improved the segmentation results across multiple datasets, including the STARE, CHASE_DB1, HRF, Cityscapes, and iSAID datasets.

Understanding Gaussian Attention Bias of Vision Transformers Using Effective Receptive Fields

May 08, 2023

Abstract:Vision transformers (ViTs) that model an image as a sequence of partitioned patches have shown notable performance in diverse vision tasks. Because partitioning patches eliminates the image structure, to reflect the order of patches, ViTs utilize an explicit component called positional embedding. However, we claim that the use of positional embedding does not simply guarantee the order-awareness of ViT. To support this claim, we analyze the actual behavior of ViTs using an effective receptive field. We demonstrate that during training, ViT acquires an understanding of patch order from the positional embedding that is trained to be a specific pattern. Based on this observation, we propose explicitly adding a Gaussian attention bias that guides the positional embedding to have the corresponding pattern from the beginning of training. We evaluated the influence of Gaussian attention bias on the performance of ViTs in several image classification, object detection, and semantic segmentation experiments. The results showed that proposed method not only facilitates ViTs to understand images but also boosts their performance on various datasets, including ImageNet, COCO 2017, and ADE20K.

How to Use Dropout Correctly on Residual Networks with Batch Normalization

Feb 13, 2023

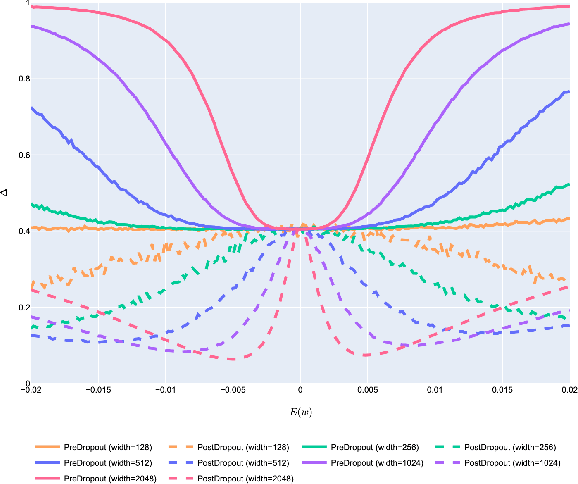

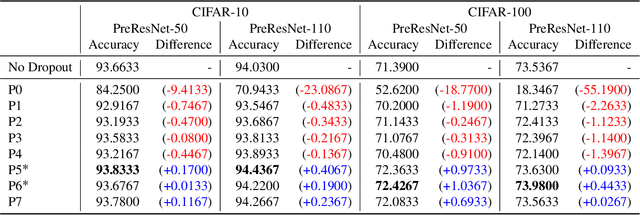

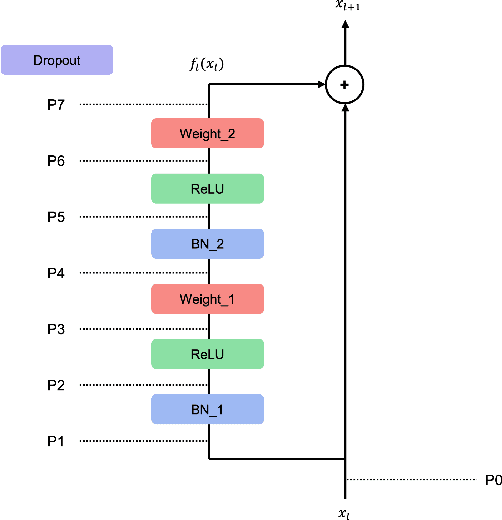

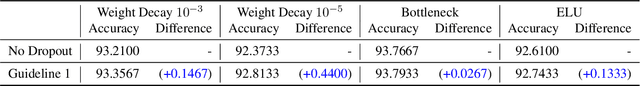

Abstract:For the stable optimization of deep neural networks, regularization methods such as dropout and batch normalization have been used in various tasks. Nevertheless, the correct position to apply dropout has rarely been discussed, and different positions have been employed depending on the practitioners. In this study, we investigate the correct position to apply dropout. We demonstrate that for a residual network with batch normalization, applying dropout at certain positions increases the performance, whereas applying dropout at other positions decreases the performance. Based on theoretical analysis, we provide the following guideline for the correct position to apply dropout: apply one dropout after the last batch normalization but before the last weight layer in the residual branch. We provide detailed theoretical explanations to support this claim and demonstrate them through module tests. In addition, we investigate the correct position of dropout in the head that produces the final prediction. Although the current consensus is to apply dropout after global average pooling, we prove that applying dropout before global average pooling leads to a more stable output. The proposed guidelines are validated through experiments using different datasets and models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge