Bryan Habas

From Ceilings to Walls: Universal Dynamic Perching of Small Aerial Robots on Surfaces with Variable Orientations

Dec 27, 2024

Abstract:This work demonstrates universal dynamic perching capabilities for quadrotors of various sizes and on surfaces with different orientations. By employing a non-dimensionalization framework and deep reinforcement learning, we systematically assessed how robot size and surface orientation affect landing capabilities. We hypothesized that maintaining geometric proportions across different robot scales ensures consistent perching behavior, which was validated in both simulation and experimental tests. Additionally, we investigated the effects of joint stiffness and damping in the landing gear on perching behaviors and performance. While joint stiffness had minimal impact, joint damping ratios influenced landing success under vertical approaching conditions. The study also identified a critical velocity threshold necessary for successful perching, determined by the robot's maneuverability and leg geometry. Overall, this research advances robotic perching capabilities, offering insights into the role of mechanical design and scaling effects, and lays the groundwork for future drone autonomy and operational efficiency in unstructured environments.

From Flies to Robots: Inverted Landing in Small Quadcopters with Dynamic Perching

Feb 29, 2024

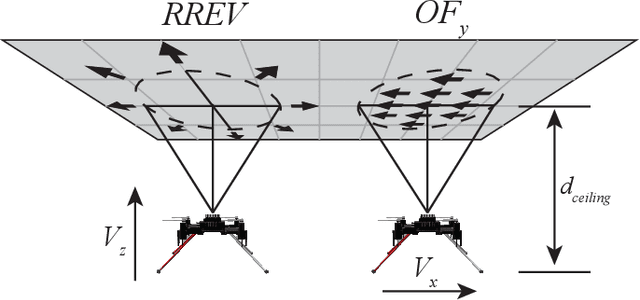

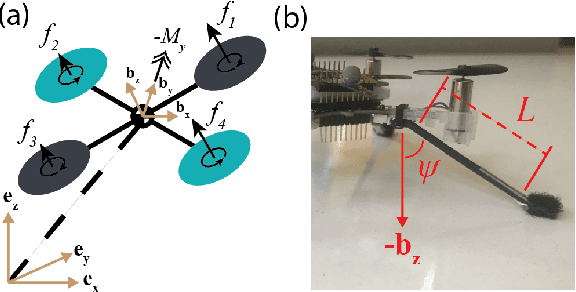

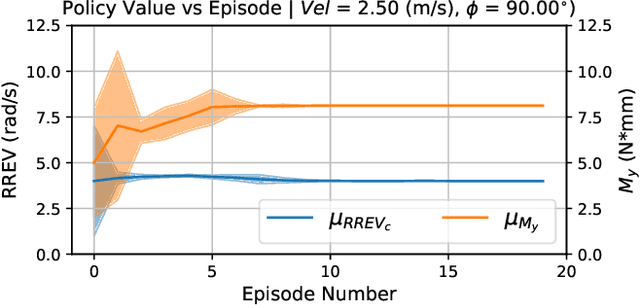

Abstract:Inverted landing is a routine behavior among a number of animal fliers. However, mastering this feat poses a considerable challenge for robotic fliers, especially to perform dynamic perching with rapid body rotations (or flips) and landing against gravity. Inverted landing in flies have suggested that optical flow senses are closely linked to the precise triggering and control of body flips that lead to a variety of successful landing behaviors. Building upon this knowledge, we aimed to replicate the flies' landing behaviors in small quadcopters by developing a control policy general to arbitrary ceiling-approach conditions. First, we employed reinforcement learning in simulation to optimize discrete sensory-motor pairs across a broad spectrum of ceiling-approach velocities and directions. Next, we converted the sensory-motor pairs to a two-stage control policy in a continuous augmented-optical flow space. The control policy consists of a first-stage Flip-Trigger Policy, which employs a one-class support vector machine, and a second-stage Flip-Action Policy, implemented as a feed-forward neural network. To transfer the inverted-landing policy to physical systems, we utilized domain randomization and system identification techniques for a zero-shot sim-to-real transfer. As a result, we successfully achieved a range of robust inverted-landing behaviors in small quadcopters, emulating those observed in flies.

Inverted Landing in a Small Aerial Robot via Deep Reinforcement Learning for Triggering and Control of Rotational Maneuvers

Sep 22, 2022

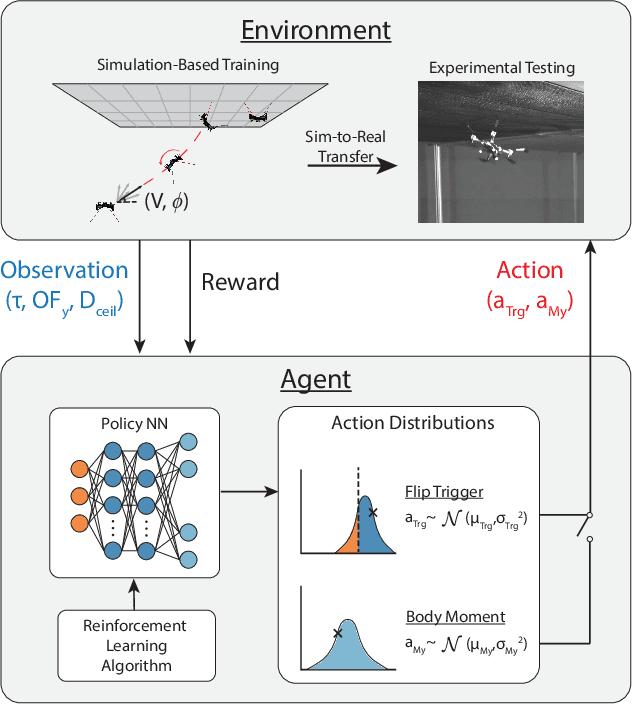

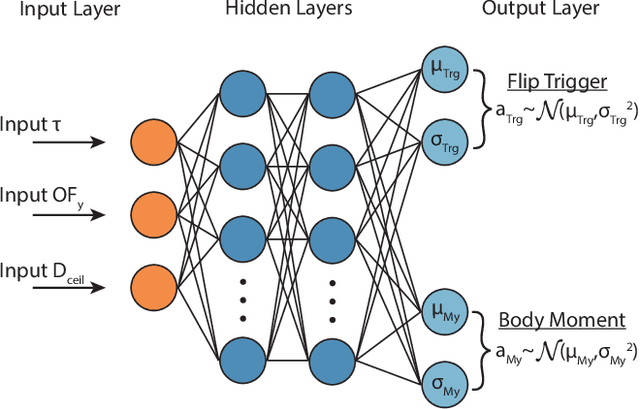

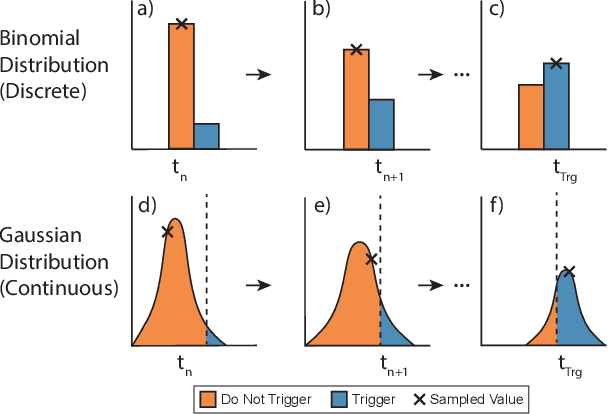

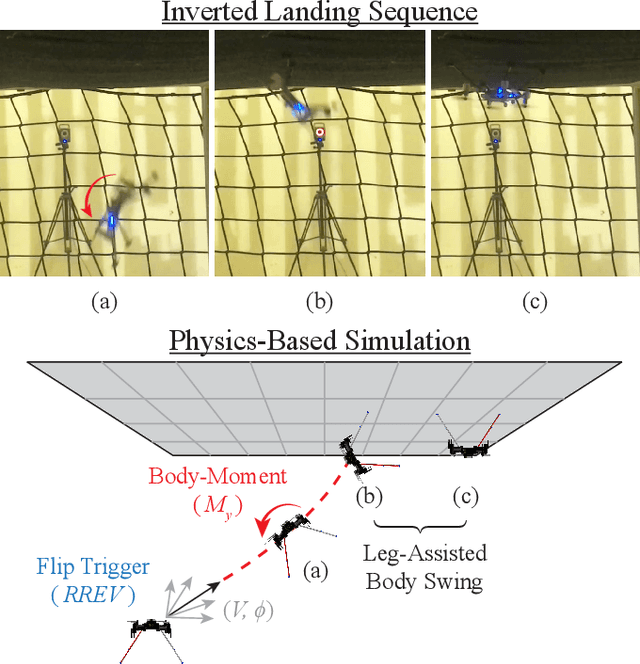

Abstract:Inverted landing in a rapid and robust manner is a challenging feat for aerial robots, especially while depending entirely on onboard sensing and computation. In spite of this, this feat is routinely performed by biological fliers such as bats, flies, and bees. Our previous work has identified a direct causal connection between a series of onboard visual cues and kinematic actions that allow for reliable execution of this challenging aerobatic maneuver in small aerial robots. In this work, we first utilized Deep Reinforcement Learning and a physics-based simulation to obtain a general, optimal control policy for robust inverted landing starting from any arbitrary approach condition. This optimized control policy provides a computationally-efficient mapping from the system's observational space to its motor command action space, including both triggering and control of rotational maneuvers. This was done by training the system over a large range of approach flight velocities that varied with magnitude and direction. Next, we performed a sim-to-real transfer and experimental validation of the learned policy via domain randomization, by varying the robot's inertial parameters in the simulation. Through experimental trials, we identified several dominant factors which greatly improved landing robustness and the primary mechanisms that determined inverted landing success. We expect the learning framework developed in this study can be generalized to solve more challenging tasks, such as utilizing noisy onboard sensory data, landing on surfaces of various orientations, or landing on dynamically-moving surfaces.

Optimal Inverted Landing in a Small Aerial Robot with Varied Approach Velocities and Landing Gear Designs

Nov 05, 2021

Abstract:Inverted landing is a challenging feat to perform in aerial robots, especially without external positioning. However, it is routinely performed by biological fliers such as bees, flies, and bats. Our previous observations of landing behaviors in flies suggest an open-loop causal relationship between their putative visual cues and the kinematics of the aerial maneuvers executed. For example, the degree of rotational maneuver (therefore the body inversion prior to touchdown) and the amount of leg-assisted body swing both depend on the flies' initial body states while approaching the ceiling. In this work, by using a physics-based simulation with experimental validation, we systematically investigated how optimized inverted landing maneuvers depend on the initial approach velocities with varied magnitude and direction. This was done by analyzing the putative visual cues (that can be derived from onboard measurements) during optimal maneuvering trajectories. We identified a three-dimensional policy region, from which a mapping to a global inverted landing policy can be developed without the use of external positioning data. In addition, we also investigated the effects of an array of landing gear designs on the optimized landing performance and identified their advantages and disadvantages. The above results have been partially validated using limited experimental testing and will continue to inform and guide our future experiments, for example by applying the calculated global policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge