Bradley Nelson

Topological Interpretations of GPT-3

Aug 08, 2023Abstract:This is an experiential study of investigating a consistent method for deriving the correlation between sentence vector and semantic meaning of a sentence. We first used three state-of-the-art word/sentence embedding methods including GPT-3, Word2Vec, and Sentence-BERT, to embed plain text sentence strings into high dimensional spaces. Then we compute the pairwise distance between any possible combination of two sentence vectors in an embedding space and map them into a matrix. Based on each distance matrix, we compute the correlation of distances of a sentence vector with respect to the other sentence vectors in an embedding space. Then we compute the correlation of each pair of the distance matrices. We observed correlations of the same sentence in different embedding spaces and correlations of different sentences in the same embedding space. These observations are consistent with our hypothesis and take us to the next stage.

Sparse canonical correlation analysis

Jun 02, 2017

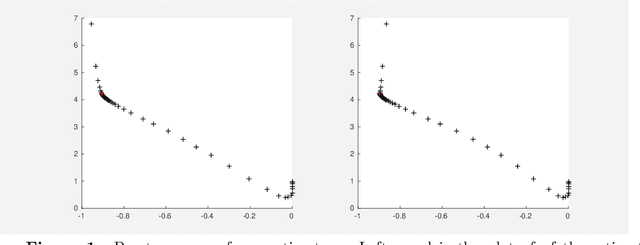

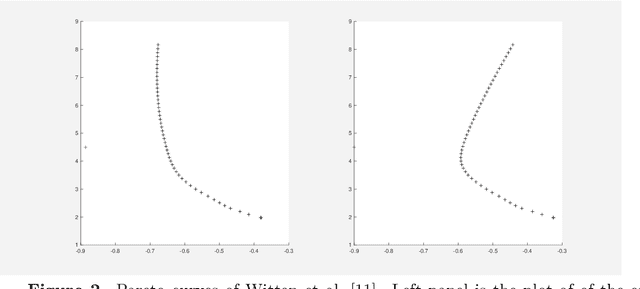

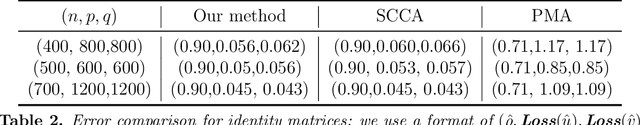

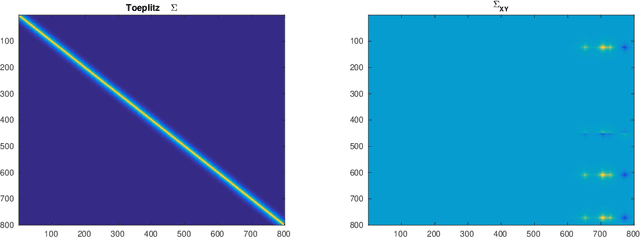

Abstract:Canonical correlation analysis was proposed by Hotelling [6] and it measures linear relationship between two multidimensional variables. In high dimensional setting, the classical canonical correlation analysis breaks down. We propose a sparse canonical correlation analysis by adding l1 constraints on the canonical vectors and show how to solve it efficiently using linearized alternating direction method of multipliers (ADMM) and using TFOCS as a black box. We illustrate this idea on simulated data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge