Bettina Hohberger

Department of Ophthalmology, Universitätsklinikum Erlangen

Multi-modal Retinal Image Registration Using a Keypoint-Based Vessel Structure Aligning Network

Jul 21, 2022

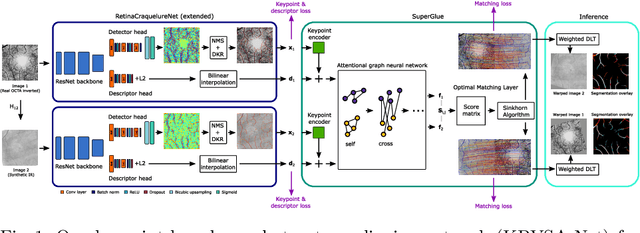

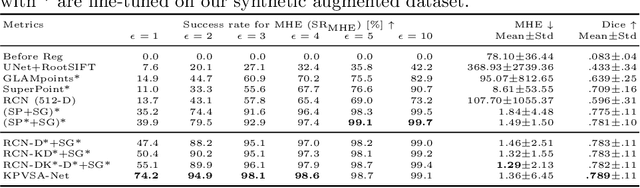

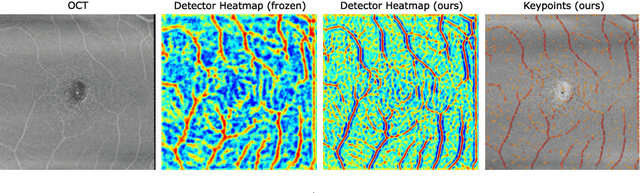

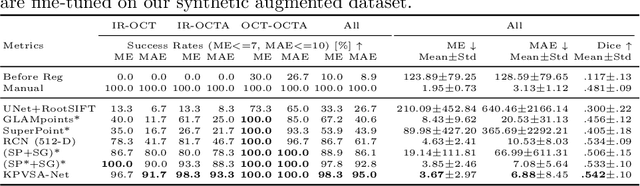

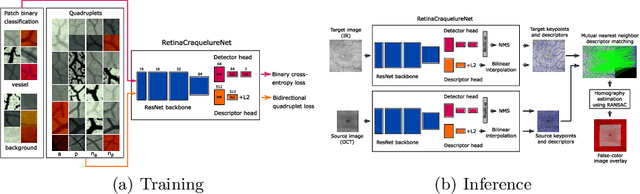

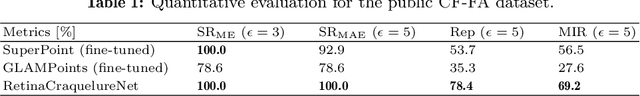

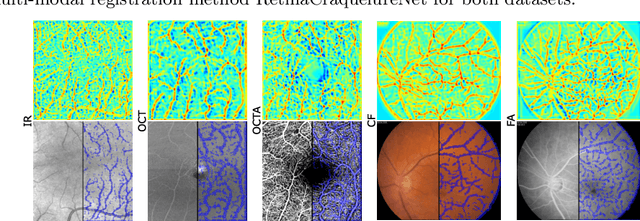

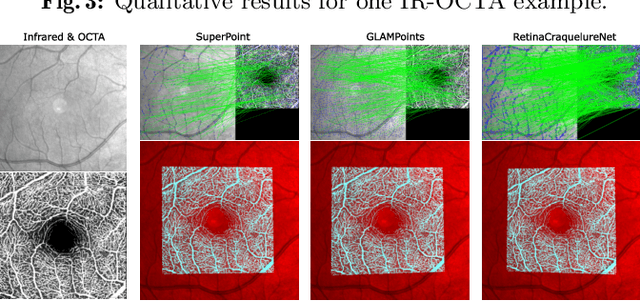

Abstract:In ophthalmological imaging, multiple imaging systems, such as color fundus, infrared, fluorescein angiography, optical coherence tomography (OCT) or OCT angiography, are often involved to make a diagnosis of retinal disease. Multi-modal retinal registration techniques can assist ophthalmologists by providing a pixel-based comparison of aligned vessel structures in images from different modalities or acquisition times. To this end, we propose an end-to-end trainable deep learning method for multi-modal retinal image registration. Our method extracts convolutional features from the vessel structure for keypoint detection and description and uses a graph neural network for feature matching. The keypoint detection and description network and graph neural network are jointly trained in a self-supervised manner using synthetic multi-modal image pairs and are guided by synthetically sampled ground truth homographies. Our method demonstrates higher registration accuracy as competing methods for our synthetic retinal dataset and generalizes well for our real macula dataset and a public fundus dataset.

A Keypoint Detection and Description Network Based on the Vessel Structure for Multi-Modal Retinal Image Registration

Jan 06, 2022

Abstract:Ophthalmological imaging utilizes different imaging systems, such as color fundus, infrared, fluorescein angiography, optical coherence tomography (OCT) or OCT angiography. Multiple images with different modalities or acquisition times are often analyzed for the diagnosis of retinal diseases. Automatically aligning the vessel structures in the images by means of multi-modal registration can support the ophthalmologists in their work. Our method uses a convolutional neural network to extract features of the vessel structure in multi-modal retinal images. We jointly train a keypoint detection and description network on small patches using a classification and a cross-modal descriptor loss function and apply the network to the full image size in the test phase. Our method demonstrates the best registration performance on our and a public multi-modal dataset in comparison to competing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge