Benoît Collins

Holdout cross-validation for large non-Gaussian covariance matrix estimation using Weingarten calculus

Sep 17, 2025Abstract:Cross-validation is one of the most widely used methods for model selection and evaluation; its efficiency for large covariance matrix estimation appears robust in practice, but little is known about the theoretical behavior of its error. In this paper, we derive the expected Frobenius error of the holdout method, a particular cross-validation procedure that involves a single train and test split, for a generic rotationally invariant multiplicative noise model, therefore extending previous results to non-Gaussian data distributions. Our approach involves using the Weingarten calculus and the Ledoit-P\'ech\'e formula to derive the oracle eigenvalues in the high-dimensional limit. When the population covariance matrix follows an inverse Wishart distribution, we approximate the expected holdout error, first with a linear shrinkage, then with a quadratic shrinkage to approximate the oracle eigenvalues. Under the linear approximation, we find that the optimal train-test split ratio is proportional to the square root of the matrix dimension. Then we compute Monte Carlo simulations of the holdout error for different distributions of the norm of the noise, such as the Gaussian, Student, and Laplace distributions and observe that the quadratic approximation yields a substantial improvement, especially around the optimal train-test split ratio. We also observe that a higher fourth-order moment of the Euclidean norm of the noise vector sharpens the holdout error curve near the optimal split and lowers the ideal train-test ratio, making the choice of the train-test ratio more important when performing the holdout method.

Free Random Projection for In-Context Reinforcement Learning

Apr 09, 2025

Abstract:Hierarchical inductive biases are hypothesized to promote generalizable policies in reinforcement learning, as demonstrated by explicit hyperbolic latent representations and architectures. Therefore, a more flexible approach is to have these biases emerge naturally from the algorithm. We introduce Free Random Projection, an input mapping grounded in free probability theory that constructs random orthogonal matrices where hierarchical structure arises inherently. The free random projection integrates seamlessly into existing in-context reinforcement learning frameworks by encoding hierarchical organization within the input space without requiring explicit architectural modifications. Empirical results on multi-environment benchmarks show that free random projection consistently outperforms the standard random projection, leading to improvements in generalization. Furthermore, analyses within linearly solvable Markov decision processes and investigations of the spectrum of kernel random matrices reveal the theoretical underpinnings of free random projection's enhanced performance, highlighting its capacity for effective adaptation in hierarchically structured state spaces.

Universal consistency of the $k$-NN rule in metric spaces and Nagata dimension

Feb 28, 2020

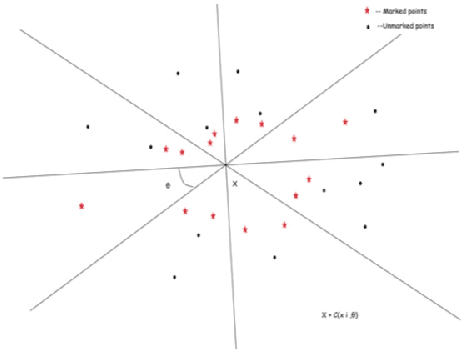

Abstract:The $k$ nearest neighbour learning rule (under the uniform distance tie breaking) is universally consistent in every metric space $X$ that is sigma-finite dimensional in the sense of Nagata. This was pointed out by C\'erou and Guyader (2006) as a consequence of the main result by those authors, combined with a theorem in real analysis sketched by D. Preiss (1971) (and elaborated in detail by Assouad and Quentin de Gromard (2006)). We show that it is possible to give a direct proof along the same lines as the original theorem of Charles J. Stone (1977) about the universal consistency of the $k$-NN classifier in the finite dimensional Euclidean space. The generalization is non-trivial because of the distance ties being more prevalent in the non-euclidean setting, and on the way we investigate the relevant geometric properties of the metrics and the limitations of the Stone argument, by constructing various examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge