Benny Drescher

Explainable AI for Correct Root Cause Analysis of Product Quality in Injection Moulding

Apr 29, 2025

Abstract:If a product deviates from its desired properties in the injection moulding process, its root cause analysis can be aided by models that relate the input machine settings with the output quality characteristics. The machine learning models tested in the quality prediction are mostly black boxes; therefore, no direct explanation of their prognosis is given, which restricts their applicability in the quality control. The previously attempted explainability methods are either restricted to tree-based algorithms only or do not emphasize on the fact that some explainability methods can lead to wrong root cause identification of a product's deviation from its desired properties. This study first shows that the interactions among the multiple input machine settings do exist in real experimental data collected as per a central composite design. Then, the model-agnostic explainable AI methods are compared for the first time to show that different explainability methods indeed lead to different feature impact analysis in injection moulding. Moreover, it is shown that the better feature attribution translates to the correct cause identification and actionable insights for the injection moulding process. Being model agnostic, explanations on both random forest and multilayer perceptron are performed for the cause analysis, as both models have the mean absolute percentage error of less than 0.05% on the experimental dataset.

Designing an LLM-Based Copilot for Manufacturing Equipment Selection

Dec 18, 2024Abstract:Effective decision-making in automation equipment selection is critical for reducing ramp-up time and maintaining production quality, especially in the face of increasing product variation and market demands. However, limited expertise and resource constraints often result in inefficiencies during the ramp-up phase when new products are integrated into production lines. Existing methods often lack structured and tailored solutions to support automation engineers in reducing ramp-up time, leading to compromises in quality. This research investigates whether large-language models (LLMs), combined with Retrieval-Augmented Generation (RAG), can assist in streamlining equipment selection in ramp-up planning. We propose a factual-driven copilot integrating LLMs with structured and semi-structured knowledge retrieval for three component types (robots, feeders and vision systems), providing a guided and traceable state-machine process for decision-making in automation equipment selection. The system was demonstrated to an industrial partner, who tested it on three internal use-cases. Their feedback affirmed its capability to provide logical and actionable recommendations for automation equipment. More specifically, among 22 equipment prompts analyzed, 19 involved selecting the correct equipment while considering most requirements, and in 6 cases, all requirements were fully met.

Prompt Selection and Augmentation for Few Examples Code Generation in Large Language Model and its Application in Robotics Control

Mar 11, 2024

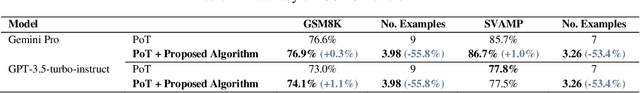

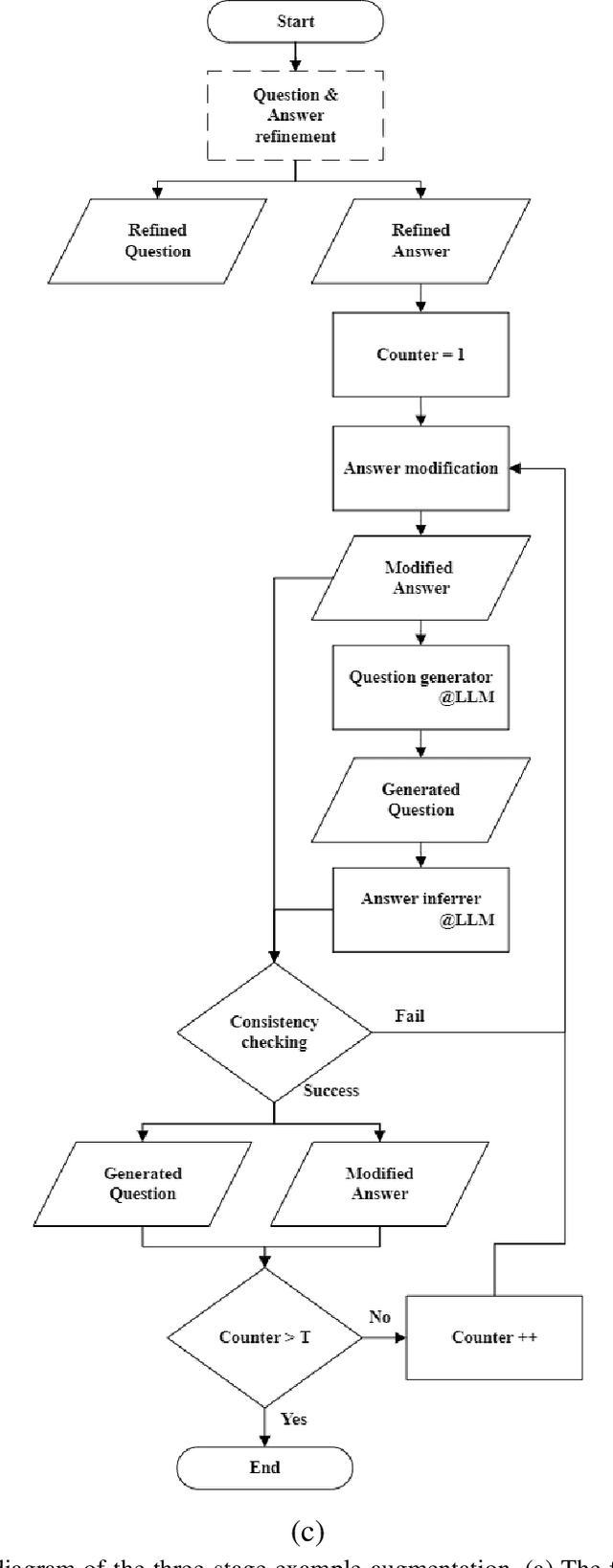

Abstract:Few-shot prompting and step-by-step reasoning have enhanced the capabilities of Large Language Models (LLMs) in tackling complex tasks including code generation. In this paper, we introduce a prompt selection and augmentation algorithm aimed at improving mathematical reasoning and robot arm operations. Our approach incorporates a multi-stage example augmentation scheme combined with an example selection scheme. This algorithm improves LLM performance by selecting a set of examples that increase diversity, minimize redundancy, and increase relevance to the question. When combined with the Program-of-Thought prompting, our algorithm demonstrates an improvement in performance on the GSM8K and SVAMP benchmarks, with increases of 0.3% and 1.1% respectively. Furthermore, in simulated tabletop environments, our algorithm surpasses the Code-as-Policies approach by achieving a 3.4% increase in successful task completions and a decrease of over 70% in the number of examples used. Its ability to discard examples that contribute little to solving the problem reduces the inferencing time of an LLM-powered robotics system. This algorithm also offers important benefits for industrial process automation by streamlining the development and deployment process, reducing manual programming effort, and enhancing code reusability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge