Ben Weinstein

Classifying geospatial objects from multiview aerial imagery using semantic meshes

May 15, 2024

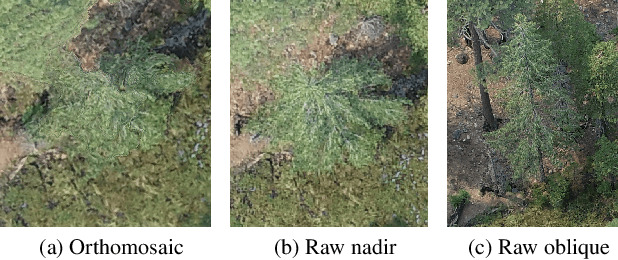

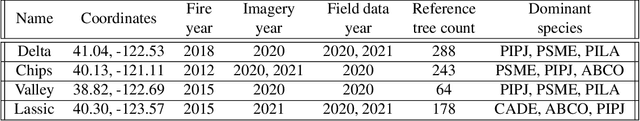

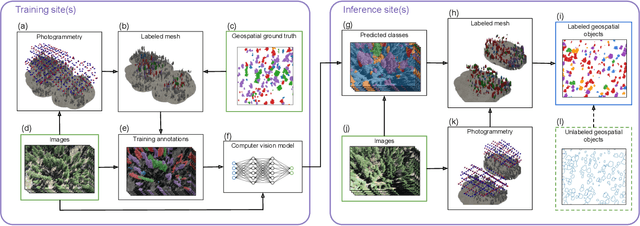

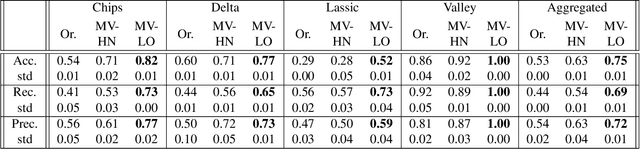

Abstract:Aerial imagery is increasingly used in Earth science and natural resource management as a complement to labor-intensive ground-based surveys. Aerial systems can collect overlapping images that provide multiple views of each location from different perspectives. However, most prediction approaches (e.g. for tree species classification) use a single, synthesized top-down "orthomosaic" image as input that contains little to no information about the vertical aspects of objects and may include processing artifacts. We propose an alternate approach that generates predictions directly on the raw images and accurately maps these predictions into geospatial coordinates using semantic meshes. This method$\unicode{x2013}$released as a user-friendly open-source toolkit$\unicode{x2013}$enables analysts to use the highest quality data for predictions, capture information about the sides of objects, and leverage multiple viewpoints of each location for added robustness. We demonstrate the value of this approach on a new benchmark dataset of four forest sites in the western U.S. that consists of drone images, photogrammetry results, predicted tree locations, and species classification data derived from manual surveys. We show that our proposed multiview method improves classification accuracy from 53% to 75% relative to an orthomosaic baseline on a challenging cross-site tree species classification task.

Addressing Annotation Imprecision for Tree Crown Delineation Using the RandCrowns Index

May 05, 2021

Abstract:Supervised methods for object delineation in remote sensing require labeled ground-truth data. Gathering sufficient high quality ground-truth data is difficult, especially when the targets are of irregular shape or difficult to distinguish from the background or neighboring objects. Tree crown delineation provides key information from remote sensing images for forestry, ecology, and management. However, tree crowns in remote sensing imagery are often difficult to label and annotate due to irregular shape, overlapping canopies, shadowing, and indistinct edges. There are also multiple approaches to annotation in this field (e.g., rectangular boxes vs. convex polygons) that further contribute to annotation imprecision. However, current evaluation methods do not account for this uncertainty in annotations, and quantitative metrics for evaluation can vary across multiple annotators. We address these limitations using an adaptation of the Rand index for weakly-labeled crown delineation that we call RandCrowns. The RandCrowns metric reformulates the Rand index by adjusting the areas over which each term of the index is computed to account for uncertain and imprecise object delineation labels. Quantitative comparisons to the commonly used intersection over union (Jaccard similarity) method shows a decrease in the variance generated by differences among multiple annotators. Combined with qualitative examples, our results suggest that this RandCrowns metric is more robust for scoring target delineations in the presence of uncertainty and imprecision in annotations that are inherent to tree crown delineation. Although the focus of this paper is on evaluation of tree crown delineations, annotation imprecision is a challenge that is common across remote sensing of the environment (and many computer vision problems in general).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge