Ben Ward

Neural network interpretability with layer-wise relevance propagation: novel techniques for neuron selection and visualization

Dec 07, 2024Abstract:Interpreting complex neural networks is crucial for understanding their decision-making processes, particularly in applications where transparency and accountability are essential. This proposed method addresses this need by focusing on layer-wise Relevance Propagation (LRP), a technique used in explainable artificial intelligence (XAI) to attribute neural network outputs to input features through backpropagated relevance scores. Existing LRP methods often struggle with precision in evaluating individual neuron contributions. To overcome this limitation, we present a novel approach that improves the parsing of selected neurons during LRP backward propagation, using the Visual Geometry Group 16 (VGG16) architecture as a case study. Our method creates neural network graphs to highlight critical paths and visualizes these paths with heatmaps, optimizing neuron selection through accuracy metrics like Mean Squared Error (MSE) and Symmetric Mean Absolute Percentage Error (SMAPE). Additionally, we utilize a deconvolutional visualization technique to reconstruct feature maps, offering a comprehensive view of the network's inner workings. Extensive experiments demonstrate that our approach enhances interpretability and supports the development of more transparent artificial intelligence (AI) systems for computer vision applications. This advancement has the potential to improve the trustworthiness of AI models in real-world machine vision applications, thereby increasing their reliability and effectiveness.

Exploring AI Text Generation, Retrieval-Augmented Generation, and Detection Technologies: a Comprehensive Overview

Dec 05, 2024

Abstract:The rapid development of Artificial Intelligence (AI) has led to the creation of powerful text generation models, such as large language models (LLMs), which are widely used for diverse applications. However, concerns surrounding AI-generated content, including issues of originality, bias, misinformation, and accountability, have become increasingly prominent. This paper offers a comprehensive overview of AI text generators (AITGs), focusing on their evolution, capabilities, and ethical implications. This paper also introduces Retrieval-Augmented Generation (RAG), a recent approach that improves the contextual relevance and accuracy of text generation by integrating dynamic information retrieval. RAG addresses key limitations of traditional models, including their reliance on static knowledge and potential inaccuracies in handling real-world data. Additionally, the paper reviews detection tools that help differentiate AI-generated text from human-written content and discusses the ethical challenges these technologies pose. The paper explores future directions for improving detection accuracy, supporting ethical AI development, and increasing accessibility. The paper contributes to a more responsible and reliable use of AI in content creation through these discussions.

Analyzing the Impact of AI Tools on Student Study Habits and Academic Performance

Dec 03, 2024

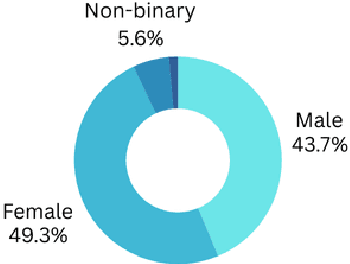

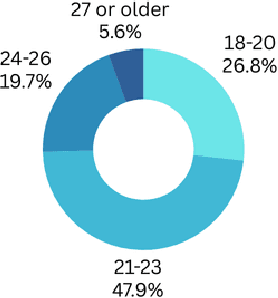

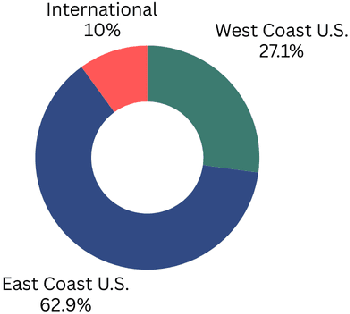

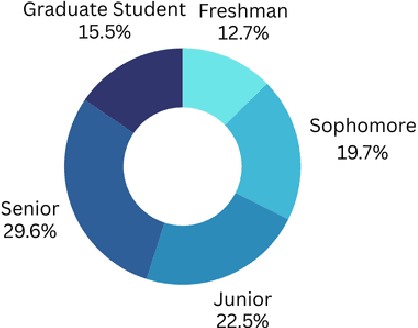

Abstract:This study explores the effectiveness of AI tools in enhancing student learning, specifically in improving study habits, time management, and feedback mechanisms. The research focuses on how AI tools can support personalized learning, adaptive test adjustments, and provide real-time classroom analysis. Student feedback revealed strong support for these features, and the study found a significant reduction in study hours alongside an increase in GPA, suggesting positive academic outcomes. Despite these benefits, challenges such as over-reliance on AI and difficulties in integrating AI with traditional teaching methods were also identified, emphasizing the need for AI tools to complement conventional educational strategies rather than replace them. Data were collected through a survey with a Likert scale and follow-up interviews, providing both quantitative and qualitative insights. The analysis involved descriptive statistics to summarize demographic data, AI usage patterns, and perceived effectiveness, as well as inferential statistics (T-tests, ANOVA) to examine the impact of demographic factors on AI adoption. Regression analysis identified predictors of AI adoption, and qualitative responses were thematically analyzed to understand students' perspectives on the future of AI in education. This mixed-methods approach provided a comprehensive view of AI's role in education and highlighted the importance of privacy, transparency, and continuous refinement of AI features to maximize their educational benefits.

A model-based approach to recovering the structure of a plant from images

Mar 12, 2015

Abstract:We present a method for recovering the structure of a plant directly from a small set of widely-spaced images. Structure recovery is more complex than shape estimation, but the resulting structure estimate is more closely related to phenotype than is a 3D geometric model. The method we propose is applicable to a wide variety of plants, but is demonstrated on wheat. Wheat is made up of thin elements with few identifiable features, making it difficult to analyse using standard feature matching techniques. Our method instead analyses the structure of plants using only their silhouettes. We employ a generate-and-test method, using a database of manually modelled leaves and a model for their composition to synthesise plausible plant structures which are evaluated against the images. The method is capable of efficiently recovering accurate estimates of plant structure in a wide variety of imaging scenarios, with no manual intervention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge