Ben Moews

Evaluating utility in synthetic banking microdata applications

Oct 29, 2024Abstract:Financial regulators such as central banks collect vast amounts of data, but access to the resulting fine-grained banking microdata is severely restricted by banking secrecy laws. Recent developments have resulted in mechanisms that generate faithful synthetic data, but current evaluation frameworks lack a focus on the specific challenges of banking institutions and microdata. We develop a framework that considers the utility and privacy requirements of regulators, and apply this to financial usage indices, term deposit yield curves, and credit card transition matrices. Using the Central Bank of Paraguay's data, we provide the first implementation of synthetic banking microdata using a central bank's collected information, with the resulting synthetic datasets for all three domain applications being publicly available and featuring information not yet released in statistical disclosure. We find that applications less susceptible to post-processing information loss, which are based on frequency tables, are particularly suited for this approach, and that marginal-based inference mechanisms to outperform generative adversarial network models for these applications. Our results demonstrate that synthetic data generation is a promising privacy-enhancing technology for financial regulators seeking to complement their statistical disclosure, while highlighting the crucial role of evaluating such endeavors in terms of utility and privacy requirements.

Physics-informed neural networks in the recreation of hydrodynamic simulations from dark matter

Mar 24, 2023Abstract:Physics-informed neural networks have emerged as a coherent framework for building predictive models that combine statistical patterns with domain knowledge. The underlying notion is to enrich the optimization loss function with known relationships to constrain the space of possible solutions. Hydrodynamic simulations are a core constituent of modern cosmology, while the required computations are both expensive and time-consuming. At the same time, the comparatively fast simulation of dark matter requires fewer resources, which has led to the emergence of machine learning algorithms for baryon inpainting as an active area of research; here, recreating the scatter found in hydrodynamic simulations is an ongoing challenge. This paper presents the first application of physics-informed neural networks to baryon inpainting by combining advances in neural network architectures with physical constraints, injecting theory on baryon conversion efficiency into the model loss function. We also introduce a punitive prediction comparison based on the Kullback-Leibler divergence, which enforces scatter reproduction. By simultaneously extracting the complete set of baryonic properties for the Simba suite of cosmological simulations, our results demonstrate improved accuracy of baryonic predictions based on dark matter halo properties, successful recovery of the fundamental metallicity relation, and retrieve scatter that traces the target simulation's distribution.

Hybrid analytic and machine-learned baryonic property insertion into galactic dark matter haloes

Dec 10, 2020

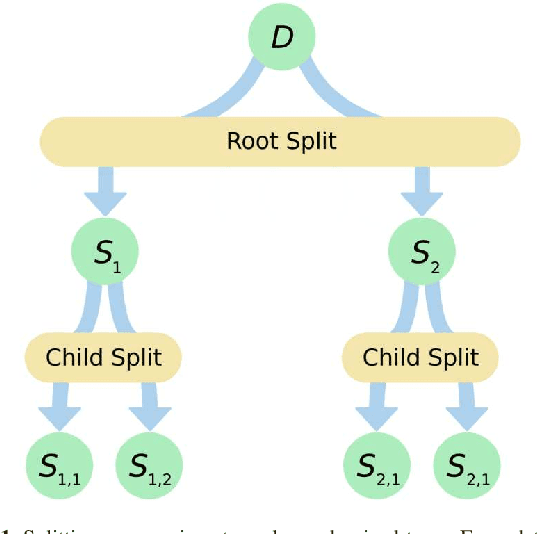

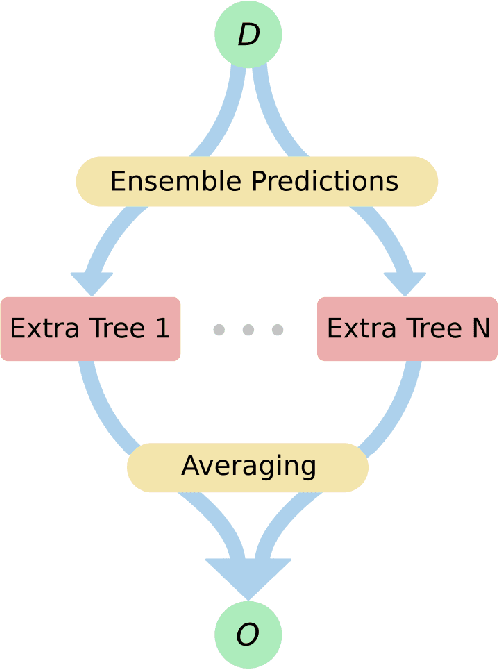

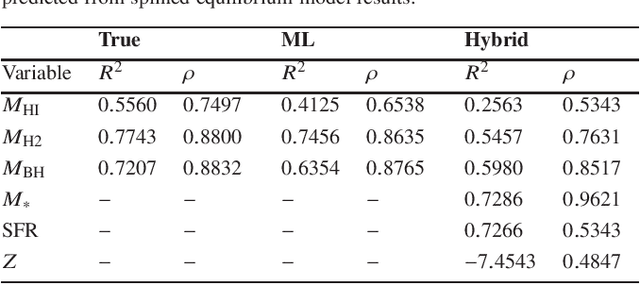

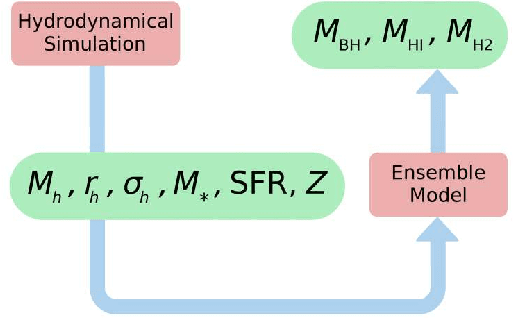

Abstract:While cosmological dark matter-only simulations relying solely on gravitational effects are comparably fast to compute, baryonic properties in simulated galaxies require complex hydrodynamic simulations that are computationally costly to run. We explore the merging of an extended version of the equilibrium model, an analytic formalism describing the evolution of the stellar, gas, and metal content of galaxies, into a machine learning framework. In doing so, we are able to recover more properties than the analytic formalism alone can provide, creating a high-speed hydrodynamic simulation emulator that populates galactic dark matter haloes in N-body simulations with baryonic properties. While there exists a trade-off between the reached accuracy and the speed advantage this approach offers, our results outperform an approach using only machine learning for a subset of baryonic properties. We demonstrate that this novel hybrid system enables the fast completion of dark matter-only information by mimicking the properties of a full hydrodynamic suite to a reasonable degree, and discuss the advantages and disadvantages of hybrid versus machine learning-only frameworks. In doing so, we offer an acceleration of commonly deployed simulations in cosmology.

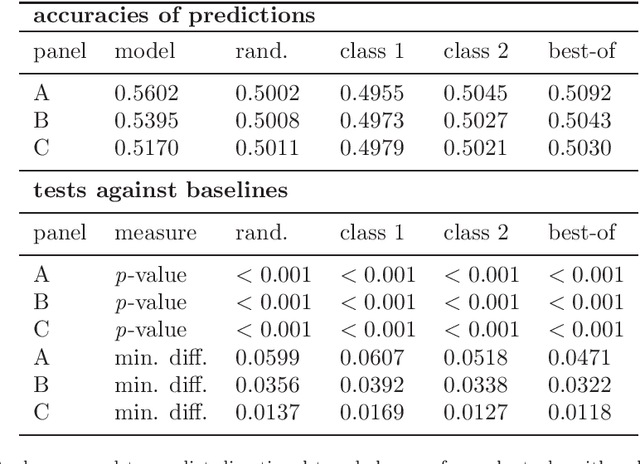

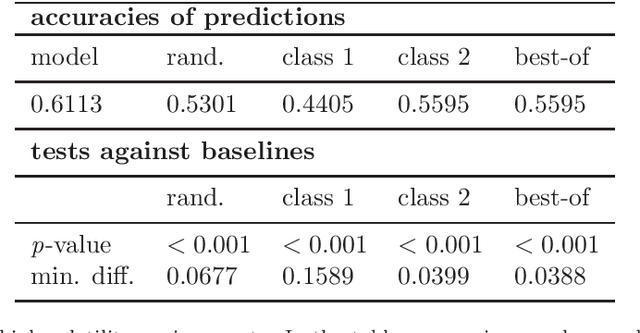

Predictive intraday correlations in stable and volatile market environments: Evidence from deep learning

Feb 24, 2020

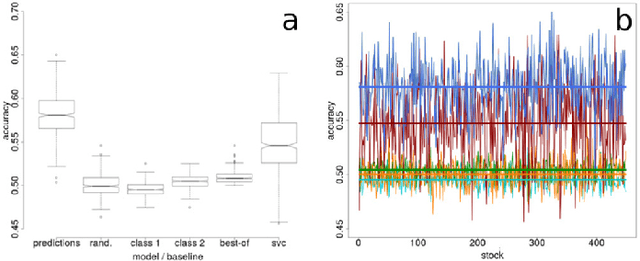

Abstract:Standard methods and theories in finance can be ill-equipped to capture highly non-linear interactions in financial prediction problems based on large-scale datasets, with deep learning offering a way to gain insights into correlations in markets as complex systems. In this paper, we apply deep learning to econometrically constructed gradients to learn and exploit lagged correlations among S&P 500 stocks to compare model behaviour in stable and volatile market environments, and under the exclusion of target stock information for predictions. In order to measure the effect of time horizons, we predict intraday and daily stock price movements in varying interval lengths and gauge the complexity of the problem at hand with a modification of our model architecture. Our findings show that accuracies, while remaining significant and demonstrating the exploitability of lagged correlations in stock markets, decrease with shorter prediction horizons. We discuss implications for modern finance theory and our work's applicability as an investigative tool for portfolio managers. Lastly, we show that our model's performance is consistent in volatile markets by exposing it to the environment of the recent financial crisis of 2007/2008.

Forging new worlds: high-resolution synthetic galaxies with chained generative adversarial networks

Dec 06, 2018

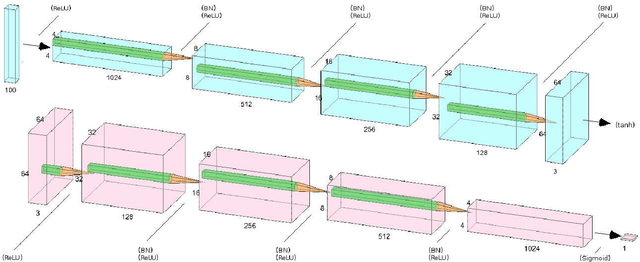

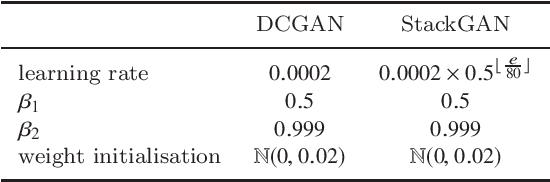

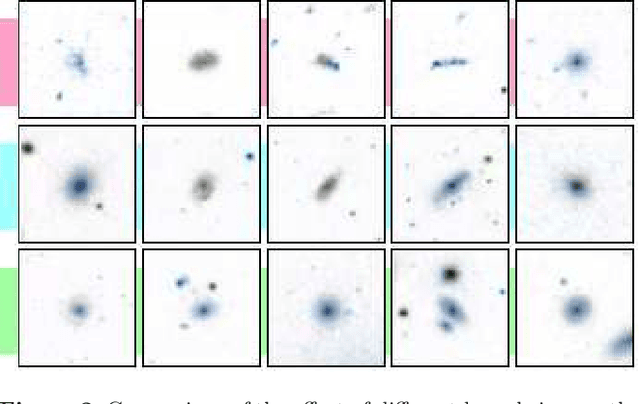

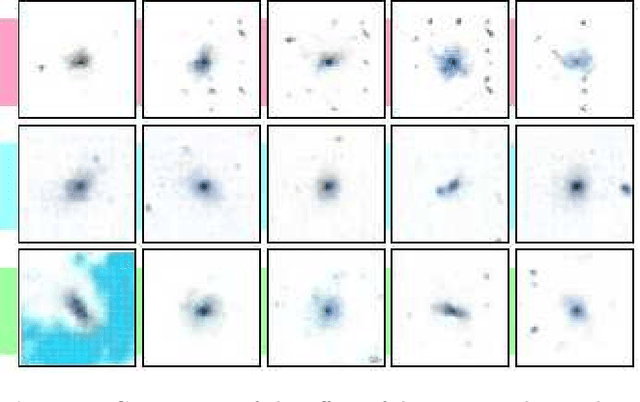

Abstract:Astronomy of the 21st century increasingly finds itself with extreme quantities of data. This growth in data is ripe for modern technologies such as deep image processing, which has the potential to allow astronomers to automatically identify, classify, segment and deblend various astronomical objects. In this paper, we explore the use of chained generative adversarial networks (GANs), a class of generative models that learn mappings from latent spaces to data distributions by modelling the joint distribution of the data, to produce physically realistic galaxy images as one use case of such models. In cosmology, such datasets can aid in the calibration of shape measurements for weak lensing by augmenting data with synthetic images. By measuring the distributions of multiple physical properties, we show that images generated with our approach closely follow the distributions of real galaxies, further establishing state-of-the-art GAN architectures as a valuable tool for modern-day astronomy.

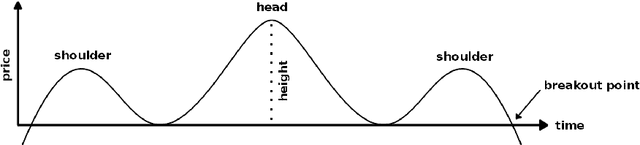

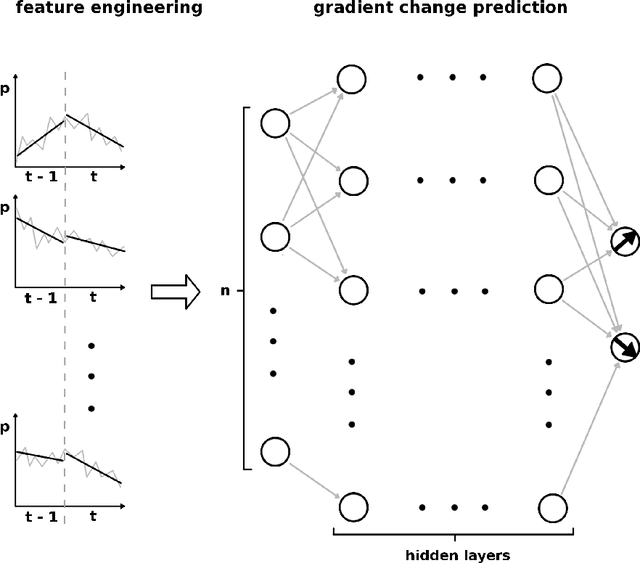

Lagged correlation-based deep learning for directional trend change prediction in financial time series

Nov 29, 2018

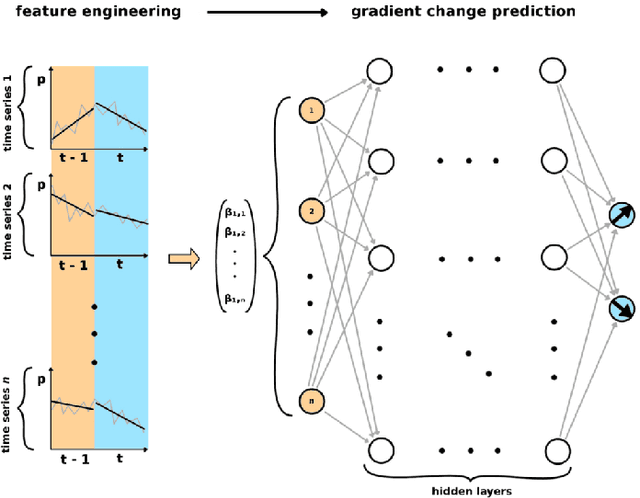

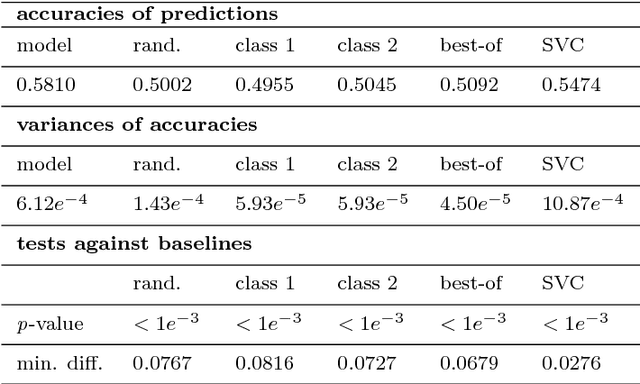

Abstract:Trend change prediction in complex systems with a large number of noisy time series is a problem with many applications for real-world phenomena, with stock markets as a notoriously difficult to predict example of such systems. We approach predictions of directional trend changes via complex lagged correlations between them, excluding any information about the target series from the respective inputs to achieve predictions purely based on such correlations with other series. We propose the use of deep neural networks that employ step-wise linear regressions with exponential smoothing in the preparatory feature engineering for this task, with regression slopes as trend strength indicators for a given time interval. We apply this method to historical stock market data from 2011 to 2016 as a use case example of lagged correlations between large numbers of time series that are heavily influenced by externally arising new information as a random factor. The results demonstrate the viability of the proposed approach, with state-of-the-art accuracies and accounting for the statistical significance of the results for additional validation, as well as important implications for modern financial economics.

* 11 pages, 4 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge