Forging new worlds: high-resolution synthetic galaxies with chained generative adversarial networks

Paper and Code

Dec 06, 2018

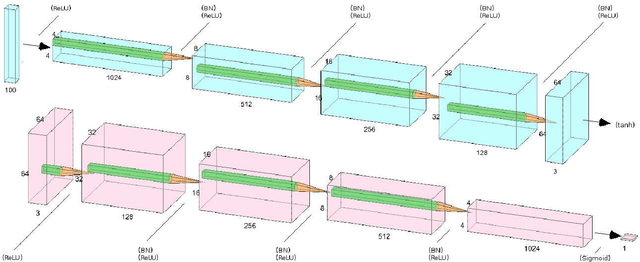

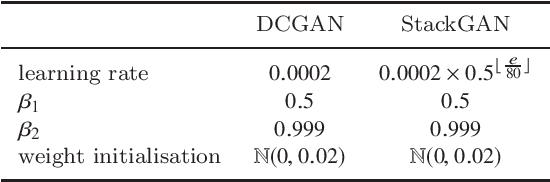

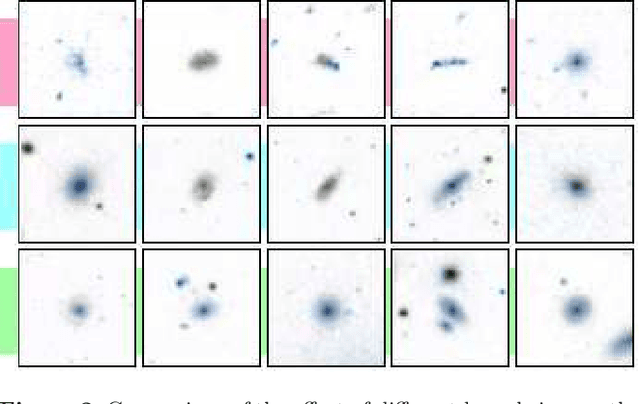

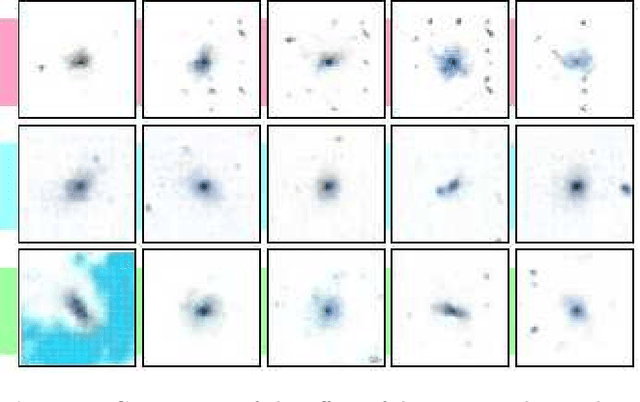

Astronomy of the 21st century increasingly finds itself with extreme quantities of data. This growth in data is ripe for modern technologies such as deep image processing, which has the potential to allow astronomers to automatically identify, classify, segment and deblend various astronomical objects. In this paper, we explore the use of chained generative adversarial networks (GANs), a class of generative models that learn mappings from latent spaces to data distributions by modelling the joint distribution of the data, to produce physically realistic galaxy images as one use case of such models. In cosmology, such datasets can aid in the calibration of shape measurements for weak lensing by augmenting data with synthetic images. By measuring the distributions of multiple physical properties, we show that images generated with our approach closely follow the distributions of real galaxies, further establishing state-of-the-art GAN architectures as a valuable tool for modern-day astronomy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge