Behnaz Nojavanasghari

Interactive Generative Adversarial Networks for Facial Expression Generation in Dyadic Interactions

Jan 30, 2018

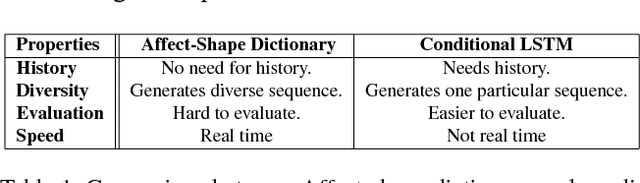

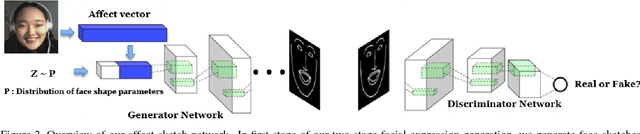

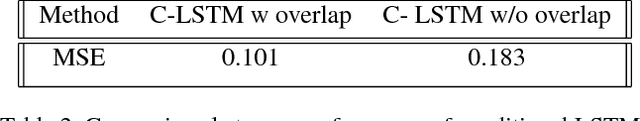

Abstract:A social interaction is a social exchange between two or more individuals,where individuals modify and adjust their behaviors in response to their interaction partners. Our social interactions are one of most fundamental aspects of our lives and can profoundly affect our mood, both positively and negatively. With growing interest in virtual reality and avatar-mediated interactions,it is desirable to make these interactions natural and human like to promote positive effect in the interactions and applications such as intelligent tutoring systems, automated interview systems and e-learning. In this paper, we propose a method to generate facial behaviors for an agent. These behaviors include facial expressions and head pose and they are generated considering the users affective state. Our models learn semantically meaningful representations of the face and generate appropriate and temporally smooth facial behaviors in dyadic interactions.

Hand2Face: Automatic Synthesis and Recognition of Hand Over Face Occlusions

Aug 17, 2017

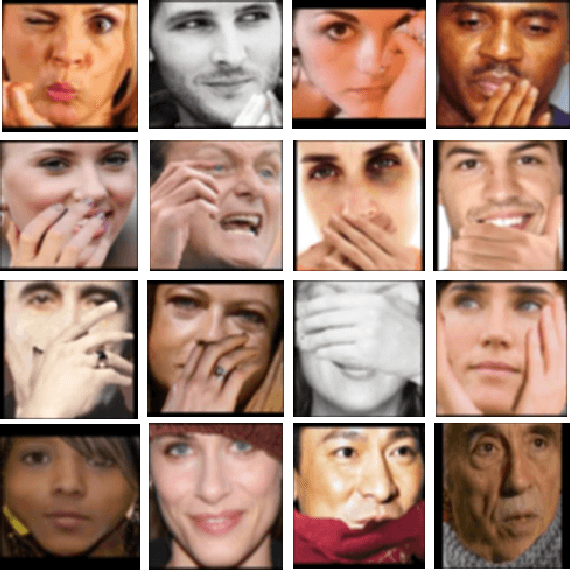

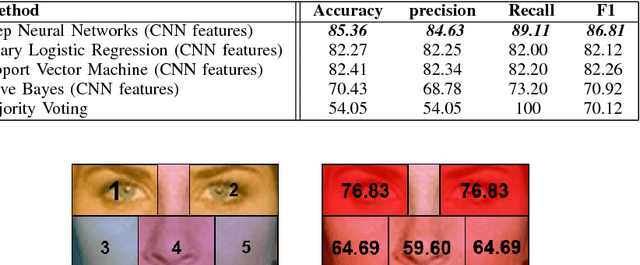

Abstract:A person's face discloses important information about their affective state. Although there has been extensive research on recognition of facial expressions, the performance of existing approaches is challenged by facial occlusions. Facial occlusions are often treated as noise and discarded in recognition of affective states. However, hand over face occlusions can provide additional information for recognition of some affective states such as curiosity, frustration and boredom. One of the reasons that this problem has not gained attention is the lack of naturalistic occluded faces that contain hand over face occlusions as well as other types of occlusions. Traditional approaches for obtaining affective data are time demanding and expensive, which limits researchers in affective computing to work on small datasets. This limitation affects the generalizability of models and deprives researchers from taking advantage of recent advances in deep learning that have shown great success in many fields but require large volumes of data. In this paper, we first introduce a novel framework for synthesizing naturalistic facial occlusions from an initial dataset of non-occluded faces and separate images of hands, reducing the costly process of data collection and annotation. We then propose a model for facial occlusion type recognition to differentiate between hand over face occlusions and other types of occlusions such as scarves, hair, glasses and objects. Finally, we present a model to localize hand over face occlusions and identify the occluded regions of the face.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge