Bassel Mabsout

Good Actors can come in Smaller Sizes: A Case Study on the Value of Actor-Critic Asymmetry

Feb 23, 2021

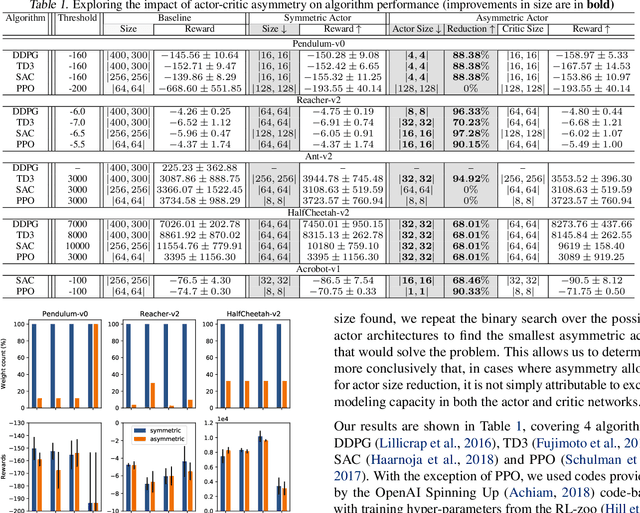

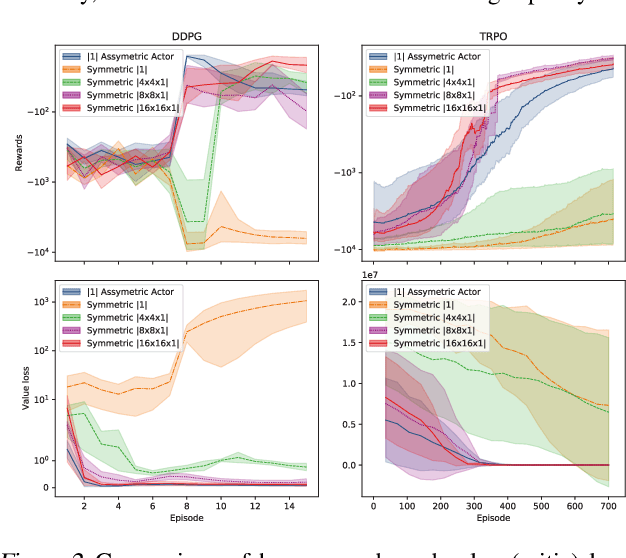

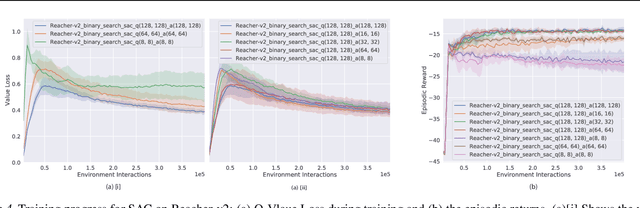

Abstract:Actors and critics in actor-critic reinforcement learning algorithms are functionally separate, yet they often use the same network architectures. This case study explores the performance impact of network sizes when considering actor and critic architectures independently. By relaxing the assumption of architectural symmetry, it is often possible for smaller actors to achieve comparable policy performance to their symmetric counterparts. Our experiments show up to 97% reduction in the number of network weights with an average reduction of 64% over multiple algorithms on multiple tasks. Given the practical benefits of reducing actor complexity, we believe configurations of actors and critics are aspects of actor-critic design that deserve to be considered independently.

How to Train your Quadrotor: A Framework for Consistently Smooth and Responsive Flight Control via Reinforcement Learning

Dec 11, 2020

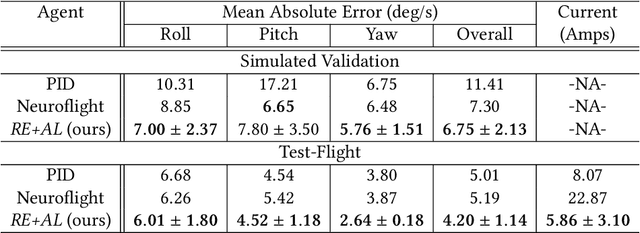

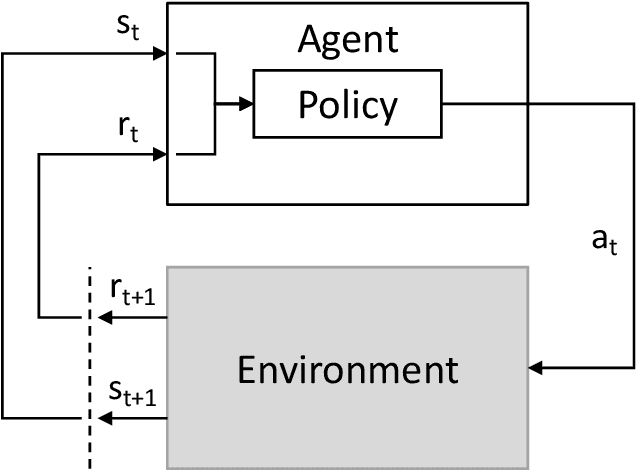

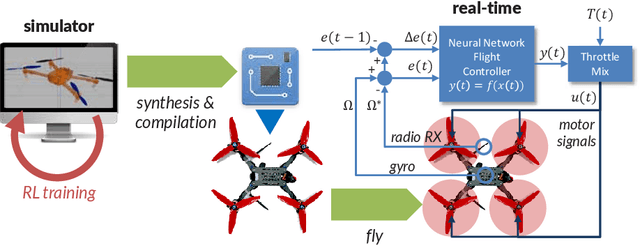

Abstract:We focus on the problem of reliably training Reinforcement Learning (RL) models (agents) for stable low-level control in embedded systems and test our methods on a high-performance, custom-built quadrotor platform. A common but often under-studied problem in developing RL agents for continuous control is that the control policies developed are not always smooth. This lack of smoothness can be a major problem when learning controllers %intended for deployment on real hardware as it can result in control instability and hardware failure. Issues of noisy control are further accentuated when training RL agents in simulation due to simulators ultimately being imperfect representations of reality - what is known as the reality gap. To combat issues of instability in RL agents, we propose a systematic framework, `REinforcement-based transferable Agents through Learning' (RE+AL), for designing simulated training environments which preserve the quality of trained agents when transferred to real platforms. RE+AL is an evolution of the Neuroflight infrastructure detailed in technical reports prepared by members of our research group. Neuroflight is a state-of-the-art framework for training RL agents for low-level attitude control. RE+AL improves and completes Neuroflight by solving a number of important limitations that hindered the deployment of Neuroflight to real hardware. We benchmark RE+AL on the NF1 racing quadrotor developed as part of Neuroflight. We demonstrate that RE+AL significantly mitigates the previously observed issues of smoothness in RL agents. Additionally, RE+AL is shown to consistently train agents that are flight-capable and with minimal degradation in controller quality upon transfer. RE+AL agents also learn to perform better than a tuned PID controller, with better tracking errors, smoother control and reduced power consumption.

Regularizing Action Policies for Smooth Control with Reinforcement Learning

Dec 11, 2020

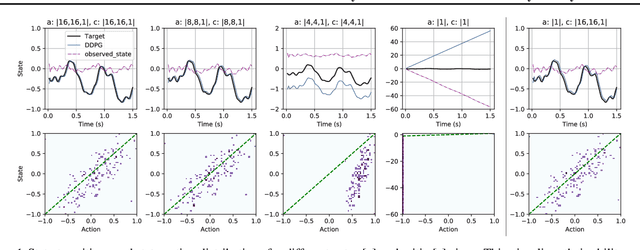

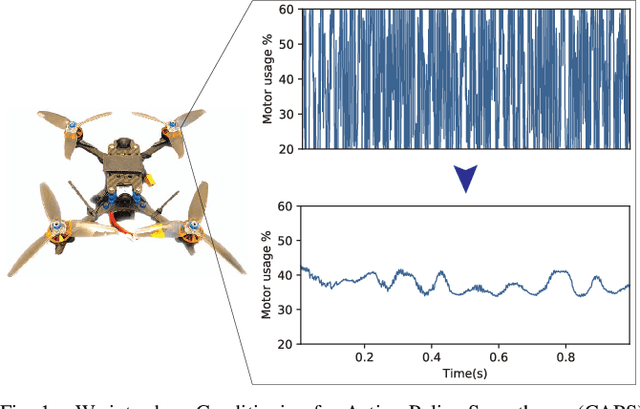

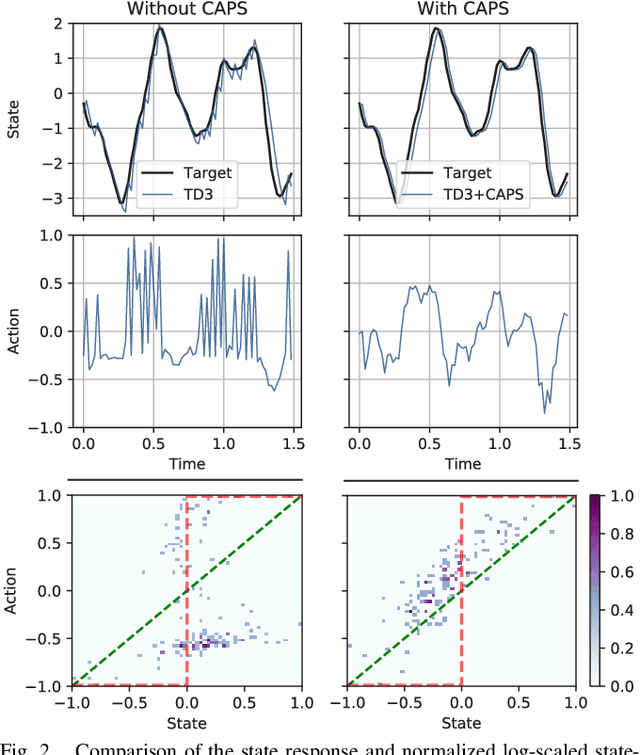

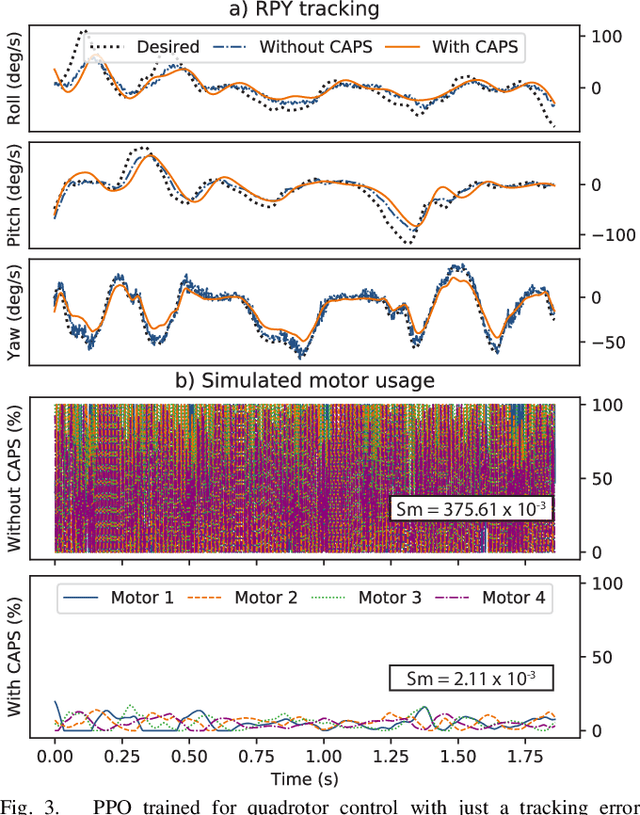

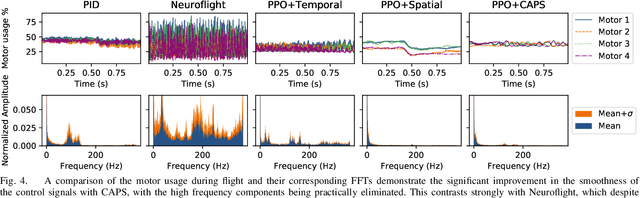

Abstract:A critical problem with the practical utility of controllers trained with deep Reinforcement Learning (RL) is the notable lack of smoothness in the actions learned by the RL policies. This trend often presents itself in the form of control signal oscillation and can result in poor control, high power consumption, and undue system wear. We introduce Conditioning for Action Policy Smoothness (CAPS), an effective yet intuitive regularization on action policies, which offers consistent improvement in the smoothness of the learned state-to-action mappings of neural network controllers, reflected in the elimination of high-frequency components in the control signal. Tested on a real system, improvements in controller smoothness on a quadrotor drone resulted in an almost 80% reduction in power consumption while consistently training flight-worthy controllers. Project website: http://ai.bu.edu/caps

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge