Baptiste Abélès

Linear Bandits with Non-i.i.d. Noise

May 26, 2025Abstract:We study the linear stochastic bandit problem, relaxing the standard i.i.d. assumption on the observation noise. As an alternative to this restrictive assumption, we allow the noise terms across rounds to be sub-Gaussian but interdependent, with dependencies that decay over time. To address this setting, we develop new confidence sequences using a recently introduced reduction scheme to sequential probability assignment, and use these to derive a bandit algorithm based on the principle of optimism in the face of uncertainty. We provide regret bounds for the resulting algorithm, expressed in terms of the decay rate of the strength of dependence between observations. Among other results, we show that our bounds recover the standard rates up to a factor of the mixing time for geometrically mixing observation noise.

Online-to-PAC generalization bounds under graph-mixing dependencies

Oct 11, 2024Abstract:Traditional generalization results in statistical learning require a training data set made of independently drawn examples. Most of the recent efforts to relax this independence assumption have considered either purely temporal (mixing) dependencies, or graph-dependencies, where non-adjacent vertices correspond to independent random variables. Both approaches have their own limitations, the former requiring a temporal ordered structure, and the latter lacking a way to quantify the strength of inter-dependencies. In this work, we bridge these two lines of work by proposing a framework where dependencies decay with graph distance. We derive generalization bounds leveraging the online-to-PAC framework, by deriving a concentration result and introducing an online learning framework incorporating the graph structure. The resulting high-probability generalization guarantees depend on both the mixing rate and the graph's chromatic number.

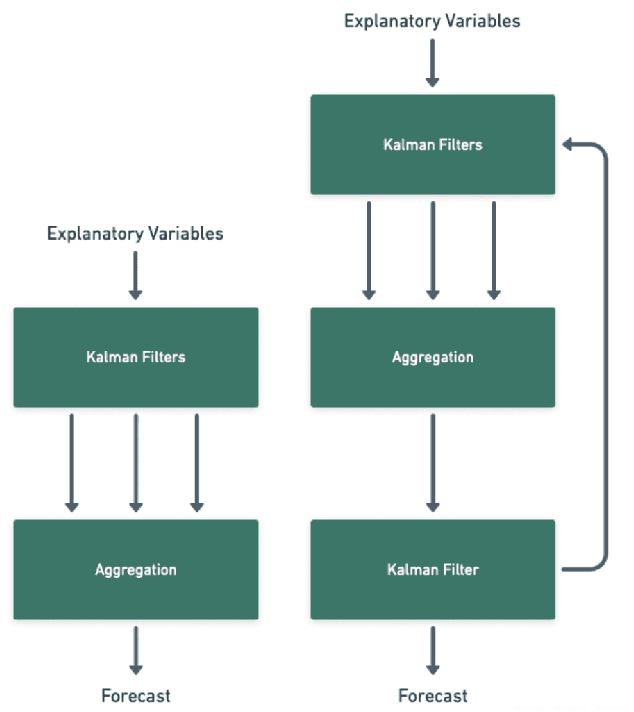

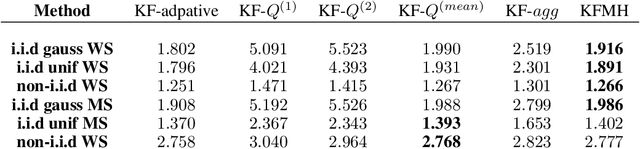

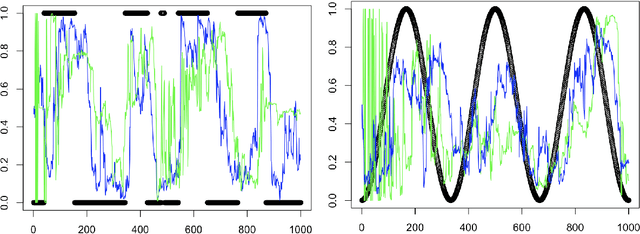

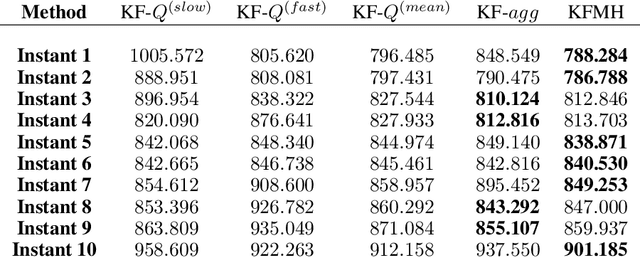

Adaptive time series forecasting with markovian variance switching

Feb 22, 2024

Abstract:Adaptive time series forecasting is essential for prediction under regime changes. Several classical methods assume linear Gaussian state space model (LGSSM) with variances constant in time. However, there are many real-world processes that cannot be captured by such models. We consider a state-space model with Markov switching variances. Such dynamical systems are usually intractable because of their computational complexity increasing exponentially with time; Variational Bayes (VB) techniques have been applied to this problem. In this paper, we propose a new way of estimating variances based on online learning theory; we adapt expert aggregation methods to learn the variances over time. We apply the proposed method to synthetic data and to the problem of electricity load forecasting. We show that this method is robust to misspecification and outperforms traditional expert aggregation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge