Baoli Li

A Unified Search and Recommendation Framework Based on Multi-Scenario Learning for Ranking in E-commerce

May 17, 2024

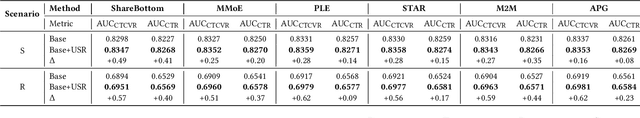

Abstract:Search and recommendation (S&R) are the two most important scenarios in e-commerce. The majority of users typically interact with products in S&R scenarios, indicating the need and potential for joint modeling. Traditional multi-scenario models use shared parameters to learn the similarity of multiple tasks, and task-specific parameters to learn the divergence of individual tasks. This coarse-grained modeling approach does not effectively capture the differences between S&R scenarios. Furthermore, this approach does not sufficiently exploit the information across the global label space. These issues can result in the suboptimal performance of multi-scenario models in handling both S&R scenarios. To address these issues, we propose an effective and universal framework for Unified Search and Recommendation (USR), designed with S&R Views User Interest Extractor Layer (IE) and S&R Views Feature Generator Layer (FG) to separately generate user interests and scenario-agnostic feature representations for S&R. Next, we introduce a Global Label Space Multi-Task Layer (GLMT) that uses global labels as supervised signals of auxiliary tasks and jointly models the main task and auxiliary tasks using conditional probability. Extensive experimental evaluations on real-world industrial datasets show that USR can be applied to various multi-scenario models and significantly improve their performance. Online A/B testing also indicates substantial performance gains across multiple metrics. Currently, USR has been successfully deployed in the 7Fresh App.

An Improved k-Nearest Neighbor Algorithm for Text Categorization

Jun 16, 2003

Abstract:k is the most important parameter in a text categorization system based on k-Nearest Neighbor algorithm (kNN).In the classification process, k nearest documents to the test one in the training set are determined firstly. Then, the predication can be made according to the category distribution among these k nearest neighbors. Generally speaking, the class distribution in the training set is uneven. Some classes may have more samples than others. Therefore, the system performance is very sensitive to the choice of the parameter k. And it is very likely that a fixed k value will result in a bias on large categories. To deal with these problems, we propose an improved kNN algorithm, which uses different numbers of nearest neighbors for different categories, rather than a fixed number across all categories. More samples (nearest neighbors) will be used for deciding whether a test document should be classified to a category, which has more samples in the training set. Preliminary experiments on Chinese text categorization show that our method is less sensitive to the parameter k than the traditional one, and it can properly classify documents belonging to smaller classes with a large k. The method is promising for some cases, where estimating the parameter k via cross-validation is not allowed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge