Avraam Bardos

Local Interpretability of Random Forests for Multi-Target Regression

Mar 29, 2023Abstract:Multi-target regression is useful in a plethora of applications. Although random forest models perform well in these tasks, they are often difficult to interpret. Interpretability is crucial in machine learning, especially when it can directly impact human well-being. Although model-agnostic techniques exist for multi-target regression, specific techniques tailored to random forest models are not available. To address this issue, we propose a technique that provides rule-based interpretations for instances made by a random forest model for multi-target regression, influenced by a recent model-specific technique for random forest interpretability. The proposed technique was evaluated through extensive experiments and shown to offer competitive interpretations compared to state-of-the-art techniques.

Local Explanation of Dimensionality Reduction

Apr 29, 2022

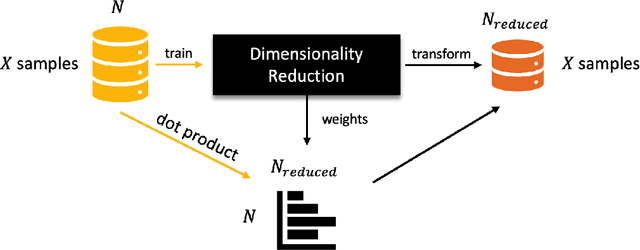

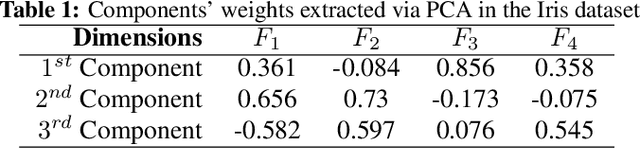

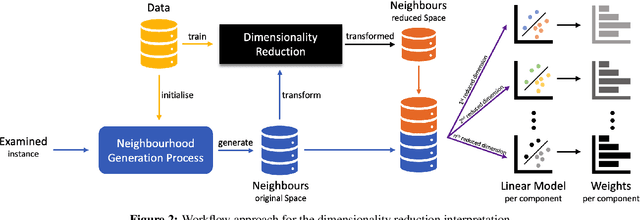

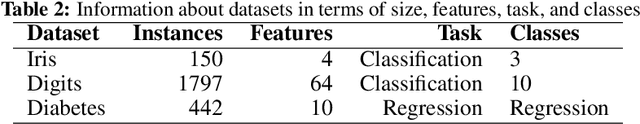

Abstract:Dimensionality reduction (DR) is a popular method for preparing and analyzing high-dimensional data. Reduced data representations are less computationally intensive and easier to manage and visualize, while retaining a significant percentage of their original information. Aside from these advantages, these reduced representations can be difficult or impossible to interpret in most circumstances, especially when the DR approach does not provide further information about which features of the original space led to their construction. This problem is addressed by Interpretable Machine Learning, a subfield of Explainable Artificial Intelligence that addresses the opacity of machine learning models. However, current research on Interpretable Machine Learning has been focused on supervised tasks, leaving unsupervised tasks like Dimensionality Reduction unexplored. In this paper, we introduce LXDR, a technique capable of providing local interpretations of the output of DR techniques. Experiment results and two LXDR use case examples are presented to evaluate its usefulness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge