Atta Norouzian

A new approach for fine-tuning sentence transformers for intent classification and out-of-scope detection tasks

Oct 17, 2024

Abstract:In virtual assistant (VA) systems it is important to reject or redirect user queries that fall outside the scope of the system. One of the most accurate approaches for out-of-scope (OOS) rejection is to combine it with the task of intent classification on in-scope queries, and to use methods based on the similarity of embeddings produced by transformer-based sentence encoders. Typically, such encoders are fine-tuned for the intent-classification task, using cross-entropy loss. Recent work has shown that while this produces suitable embeddings for the intent-classification task, it also tends to disperse in-scope embeddings over the full sentence embedding space. This causes the in-scope embeddings to potentially overlap with OOS embeddings, thereby making OOS rejection difficult. This is compounded when OOS data is unknown. To mitigate this issue our work proposes to regularize the cross-entropy loss with an in-scope embedding reconstruction loss learned using an auto-encoder. Our method achieves a 1-4% improvement in the area under the precision-recall curve for rejecting out-of-sample (OOS) instances, without compromising intent classification performance.

Exploring attention mechanism for acoustic-based classification of speech utterances into system-directed and non-system-directed

Feb 01, 2019

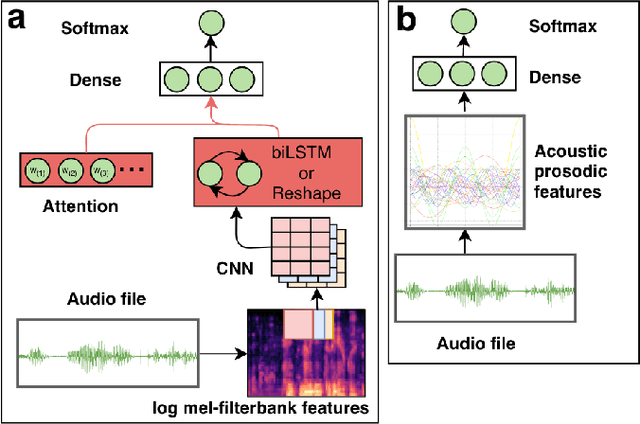

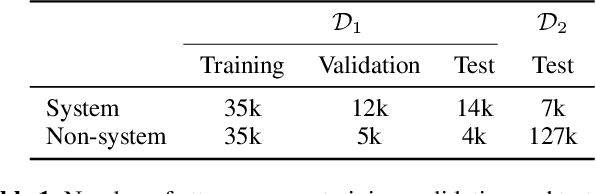

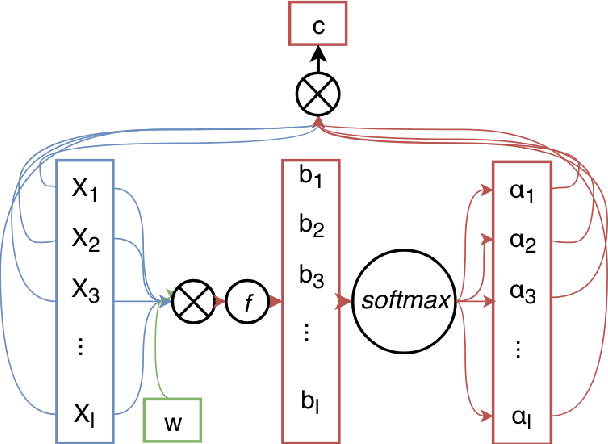

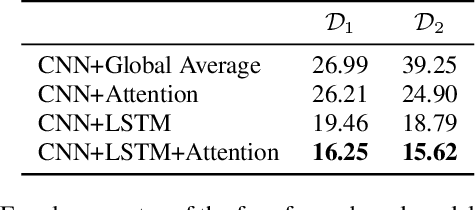

Abstract:Voice controlled virtual assistants (VAs) are now available in smartphones, cars, and standalone devices in homes. In most cases, the user needs to first "wake-up" the VA by saying a particular word/phrase every time he or she wants the VA to do something. Eliminating the need for saying the wake-up word for every interaction could improve the user experience. This would require the VA to have the capability to detect the speech that is being directed at it and respond accordingly. In other words, the challenge is to distinguish between system-directed and non-system-directed speech utterances. In this paper, we present a number of neural network architectures for tackling this classification problem based on using only acoustic features. These architectures are based on using convolutional, recurrent and feed-forward layers. In addition, we investigate the use of an attention mechanism applied to the output of the convolutional and the recurrent layers. It is shown that incorporating the proposed attention mechanism into the models always leads to significant improvement in classification accuracy. The best model achieved equal error rates of 16.25 and 15.62 percents on two distinct realistic datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge