Ashish D. Deshpande

Human-Exoskeleton Kinematic Calibration to Improve Hand Tracking for Dexterous Teleoperation

Jul 31, 2025Abstract:Hand exoskeletons are critical tools for dexterous teleoperation and immersive manipulation interfaces, but achieving accurate hand tracking remains a challenge due to user-specific anatomical variability and donning inconsistencies. These issues lead to kinematic misalignments that degrade tracking performance and limit applicability in precision tasks. We propose a subject-specific calibration framework for exoskeleton-based hand tracking that uses redundant joint sensing and a residual-weighted optimization strategy to estimate virtual link parameters. Implemented on the Maestro exoskeleton, our method improves joint angle and fingertip position estimation across users with varying hand geometries. We introduce a data-driven approach to empirically tune cost function weights using motion capture ground truth, enabling more accurate and consistent calibration across participants. Quantitative results from seven subjects show substantial reductions in joint and fingertip tracking errors compared to uncalibrated and evenly weighted models. Qualitative visualizations using a Unity-based virtual hand further confirm improvements in motion fidelity. The proposed framework generalizes across exoskeleton designs with closed-loop kinematics and minimal sensing, and lays the foundation for high-fidelity teleoperation and learning-from-demonstration applications.

BiFlex: A Passive Bimodal Stiffness Flexible Wrist for Manipulation in Unstructured Environments

Apr 11, 2025Abstract:Robotic manipulation in unstructured, humancentric environments poses a dual challenge: achieving the precision need for delicate free-space operation while ensuring safety during unexpected contact events. Traditional wrists struggle to balance these demands, often relying on complex control schemes or complicated mechanical designs to mitigate potential damage from force overload. In response, we present BiFlex, a flexible robotic wrist that uses a soft buckling honeycomb structure to provides a natural bimodal stiffness response. The higher stiffness mode enables precise household object manipulation, while the lower stiffness mode provides the compliance needed to adapt to external forces. We design BiFlex to maintain a fingertip deflection of less than 1 cm while supporting loads up to 500g and create a BiFlex wrist for many grippers, including Panda, Robotiq, and BaRiFlex. We validate BiFlex under several real-world experimental evaluations, including surface wiping, precise pick-and-place, and grasping under environmental constraints. We demonstrate that BiFlex simplifies control while maintaining precise object manipulation and enhanced safety in real-world applications.

BaRiFlex: A Robotic Gripper with Versatility and Collision Robustness for Robot Learning

Dec 08, 2023Abstract:We present a new approach to robot hand design specifically suited for successfully implementing robot learning methods to accomplish tasks in daily human environments. We introduce BaRiFlex, an innovative gripper design that alleviates the issues caused by unexpected contact and collisions during robot learning, offering robustness, grasping versatility, task versatility, and simplicity to the learning processes. This achievement is enabled by the incorporation of low-inertia actuators, providing high Back-drivability, and the strategic combination of Rigid and Flexible materials which enhances versatility and the gripper's resilience against unpredicted collisions. Furthermore, the integration of flexible Fin-Ray linkages and rigid linkages allows the gripper to execute compliant grasping and precise pinching. We conducted rigorous performance tests to characterize the novel gripper's compliance, durability, grasping and task versatility, and precision. We also integrated the BaRiFlex with a 7 Degree of Freedom (DoF) Franka Emika's Panda robotic arm to evaluate its capacity to support a trial-and-error (reinforcement learning) training procedure. The results of our experimental study are then compared to those obtained using the original rigid Franka Hand and a reference Fin-Ray soft gripper, demonstrating the superior capabilities and advantages of our developed gripper system.

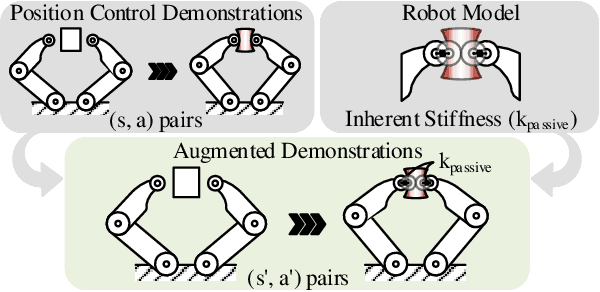

SCAPE: Learning Stiffness Control from Augmented Position Control Experiences

Feb 16, 2021

Abstract:We introduce a sample-efficient method for learning state-dependent stiffness control policies for dexterous manipulation. The ability to control stiffness facilitates safe and reliable manipulation by providing compliance and robustness to uncertainties. So far, most current reinforcement learning approaches to achieve robotic manipulation have exclusively focused on position control, often due to the difficulty of learning high-dimensional stiffness control policies. This difficulty can be partially mitigated via policy guidance such as in imitation learning. However, expert stiffness control demonstrations are often expensive or infeasible to record. Therefore, we present an approach to learn Stiffness Control from Augmented Position control Experiences (SCAPE) that bypasses this difficulty by transforming position control demonstrations into approximate, suboptimal stiffness control demonstrations. Then, the suboptimality of the augmented demonstrations is addressed by using complementary techniques that help the agent safely learn from both the demonstrations and reinforcement learning. By using simulation tools and experiments on a robotic testbed, we show that the proposed approach efficiently learns safe manipulation policies and outperforms learned position control policies and several other baseline learning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge