Asad Munir

Resolution based Feature Distillation for Cross Resolution Person Re-Identification

Sep 16, 2021

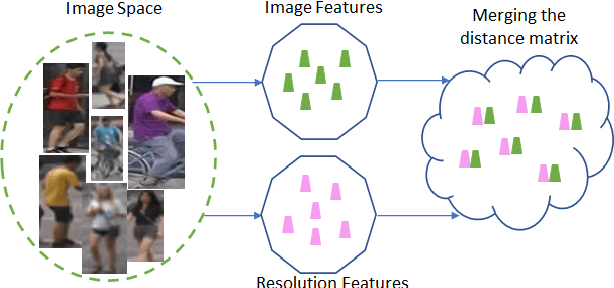

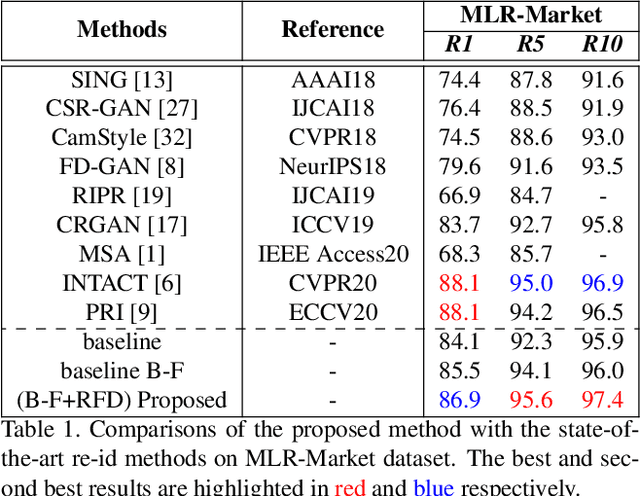

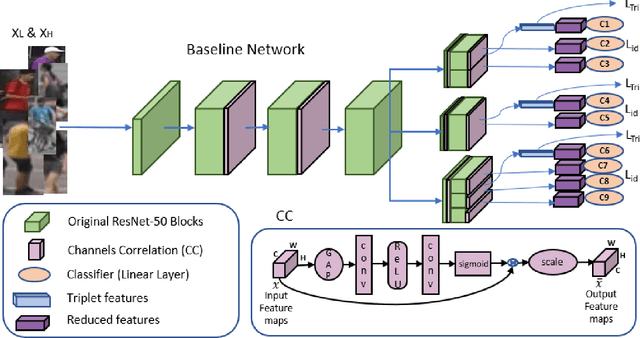

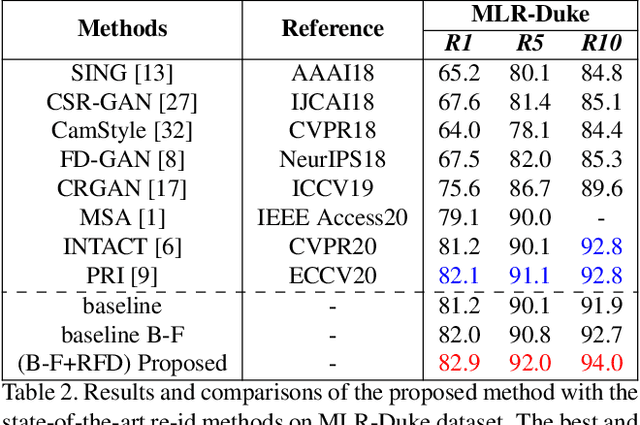

Abstract:Person re-identification (re-id) aims to retrieve images of same identities across different camera views. Resolution mismatch occurs due to varying distances between person of interest and cameras, this significantly degrades the performance of re-id in real world scenarios. Most of the existing approaches resolve the re-id task as low resolution problem in which a low resolution query image is searched in a high resolution images gallery. Several approaches apply image super resolution techniques to produce high resolution images but ignore the multiple resolutions of gallery images which is a better realistic scenario. In this paper, we introduce channel correlations to improve the learning of features from the degraded data. In addition, to overcome the problem of multiple resolutions we propose a Resolution based Feature Distillation (RFD) approach. Such an approach learns resolution invariant features by filtering the resolution related features from the final feature vectors that are used to compute the distance matrix. We tested the proposed approach on two synthetically created datasets and on one original multi resolution dataset with real degradation. Our approach improves the performance when multiple resolutions occur in the gallery and have comparable results in case of single resolution (low resolution re-id).

A Deep Residual Star Generative Adversarial Network for multi-domain Image Super-Resolution

Jul 07, 2021

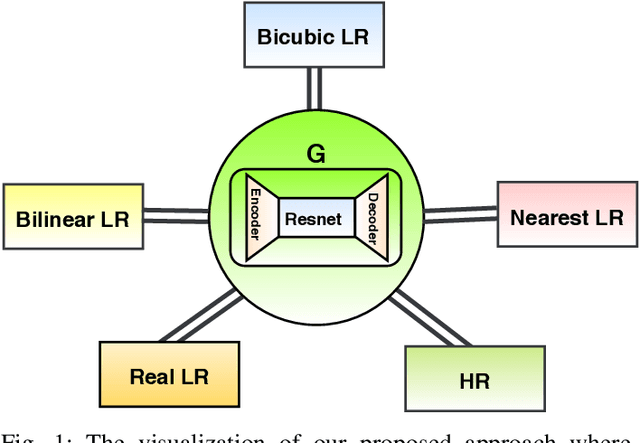

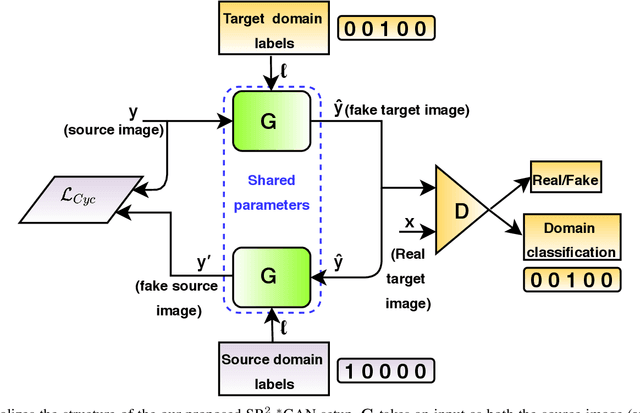

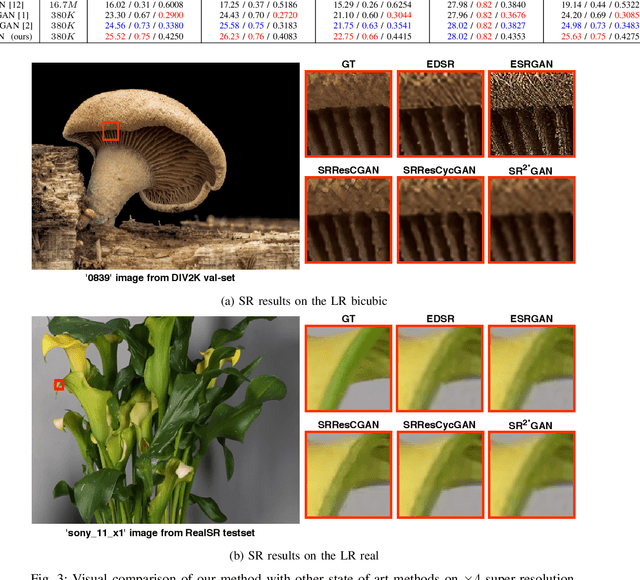

Abstract:Recently, most of state-of-the-art single image super-resolution (SISR) methods have attained impressive performance by using deep convolutional neural networks (DCNNs). The existing SR methods have limited performance due to a fixed degradation settings, i.e. usually a bicubic downscaling of low-resolution (LR) image. However, in real-world settings, the LR degradation process is unknown which can be bicubic LR, bilinear LR, nearest-neighbor LR, or real LR. Therefore, most SR methods are ineffective and inefficient in handling more than one degradation settings within a single network. To handle the multiple degradation, i.e. refers to multi-domain image super-resolution, we propose a deep Super-Resolution Residual StarGAN (SR2*GAN), a novel and scalable approach that super-resolves the LR images for the multiple LR domains using only a single model. The proposed scheme is trained in a StarGAN like network topology with a single generator and discriminator networks. We demonstrate the effectiveness of our proposed approach in quantitative and qualitative experiments compared to other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge